For years, the NLP Lab empowered healthcare teams with a no-code platform to develop and manage AI in a secure environment. It enabled organizations to create domain-specific models, label data, and comply with regulations without needing software engineers.

Now, evolving demands are pushing that foundation to its limits. Requirements like full auditability, multimodal review, and private LLM workflows are increasingly common in RFPs and audit checklists. Scanned referrals, DICOM images, and LLM-generated summaries now share workflows, with regulators expecting unified oversight across them.

The NLP Lab, now evolved into the Generative AI Lab, wasn’t designed for this scale, oversight, or data complexity. The Generative AI Lab addresses these challenges by integrating local LLMs, multi-format labeling, versioned assets, and policy-driven review in a single, secure platform.

The following sections explore how the Generative AI Lab meets modern enterprise needs and why healthcare leaders are adopting it.

What made NLP Lab indispensable?

NLP Lab empowered healthcare teams to securely process unstructured data in AI workflows. Its core value boiled down to three strengths:

- No-code labeling and tuning: Domain experts could tag data, fine-tune models, and review results in a visual interface, selecting from 6,600-plus healthcare models without writing code.

- Compliance and audit built in: Role-based access, multi-factor authentication, and append-only logs satisfied Health Insurance Portability and Accountability Act (HIPAA) and the General Data Protection Regulation (GDPR) requirements.

- Flexible and economical deployment: One license covers cloud, on-premises, and air-gapped environments, keeping data local and costs predictable.

These advantages made NLP Lab the trusted starting point for regulated AI, but as expanding data formats and stricter oversight called for something broader.

Advancing AI: How Generative AI Lab Meets Healthcare Challenges

In 2025, a wave of new rules and budget realities shapes how regulated enterprises build and govern AI.

Population-level audits replace sample checks

Now, the Centers for Medicare & Medicaid Services (CMS) will audit every eligible Medicare Advantage contract each year. Moreover, the Securities and Exchange Commission (SEC) requires listed firms to file an 8-K within four business days of a material cyber incident. These rules expand scrutiny from small samples to entire populations.

Review teams (especially in healthcare) need platforms that link every claim, trade, or disclosure to the exact sentence, scan, or log entry that supports it and capture reviewer sign-off in a tamper-proof record.

Regulations move faster than release cycles

With the CMS adding 29 payment categories and removing over 2,000 ICD-10 codes in Hierarchical Condition Category (HCC) version 28, coders and risk teams must adapt quickly to stay compliant.

A condition that was billable last quarter may no longer qualify, and new splits require coders to follow updated logic. To keep up, domain experts need no-code tools to update prompts and rules without waiting for engineering. Without that flexibility, teams risk submission errors and delayed reporting.

Governance now requires visibility and control

Security teams need clear proof of how models handle protected health information. Clinicians and legal reviewers expect direct access to refine prompts and update models.

To meet these needs, organizations are shifting to LLMs, versioned assets, and append-only logs that support governed, no-code workflows within their own infrastructure.

Evidence spans far more than structured text

Regulatory guidance now expects full traceability across all of them. Many teams still rely on separate tools for each format. One system handles PDF redaction, another is used for labeling images, and a third manages text annotation. This fragmented setup incurs additional costs, complexity, and audit risk.

A unified platform that handles all formats within a single workflow simplifies compliance and ensures that no evidence is overlooked.

AI budgets are under pressure

With rising demands for traceability and audit readiness, regulated teams now expect AI tools to run securely on-prem, deliver explainable results, and maintain predictable costs. In response, many organizations are shifting to compact, task-specific models that run on local infrastructure, reducing spend while keeping sensitive data in-house.

As expectations around cost, compliance, and oversight continue to grow, this is where Generative AI Lab extends the foundation laid by NLP Lab.

Generative AI Lab: Designed for Advanced Enterprise Demands

The NLP Lab simplified AI deployment by eliminating coding needs, enabling teams to work with data in place. The Generative AI Lab preserves that accessibility while introducing advanced governance, automation, and multimodal capabilities to meet today’s enterprise demands.

Audit-ready logging

NLP Lab kept a basic record of who labeled what and when. That worked for internal tracking, but it wasn’t built for external audits. Generative AI Lab introduces audit logs that can’t be quietly changed or overwritten. You can log every user or system action and stream the data to an Elastic-compatible Security Information and Event Management (SIEM). This allows you to give auditors a tamper-proof log on demand.

Private, predictable LLM workloads

NLP Lab can pre-annotate with Spark NLP and, when needed, send zero-shot prompts to third-party LLM services. That option delivers quick wins but raises token fees and data-residency concerns.

Generative AI Lab ships an on-prem prompt engine that processes text inside your environment by default, while external connectors stay off until compliance approves them, keeping costs and privacy under local control.

Central governance for models and prompts

NLP Lab stores models, rules, and prompts within individual projects, which gives teams flexibility but little cross-project visibility. Generative AI Lab introduces an enterprise Models Hub where every asset is versioned, searchable, and protected by role-based access, enabling security officers to trace lineage and roll back if necessary.

Built-in evaluation workflows

NLP Lab relies on exports and spreadsheets for model scoring, a workable method that adds manual steps and scattered evidence. Generative AI Lab adds project types for LLM evaluation and side-by-side comparisons, allowing domain experts to grade responses and view accuracy dashboards without leaving the platform.

Continuous testing and active learning

NLP Lab lets users retrain models when new data arrives, but bias and robustness checks require outside tools. Generative AI Lab integrates LangTest to run automated test suites, then launches data-augmentation and active-learning loops when reviewers resolve low-confidence cases, keeping models aligned with evolving policies while limiting manual effort.

Ready-made multimodal templates

NLP Lab focuses on text annotation and basic image labeling, which means scanned forms or handwriting need custom setups. Generative AI Lab adds templates for scanned PDFs with OCR, bounding-box annotation, handwriting detection, and healthcare accelerators such as HCC and CPT coding, so teams can start specialized workflows in minutes instead of weeks.

Generative AI Lab elevates the familiar NLP Lab’s no-code capabilities into a comprehensive platform that meets current demands for scale, governance, and cost control. The use cases below highlight the transformative business gains enterprises can obtain through this strategic upgrade.

Real-time, audit-ready evidence

The Generative AI Lab streams every user and system event into an append-only Elasticsearch index that lives in your virtual private cloud, ensuring complete and immediate traceability for regulatory compliance.

For instance, a compliance officer can filter the log and export a tamper-evident file in under an hour, freeing staff from the time-consuming task of merging logs and reducing the likelihood of missing a critical entry.

Run LLMs on-prem and keep costs predictable

With the Generative AI Lab, the built-in prompt engine runs on your local GPUs, ensuring that protected health information (PHI) remains behind the firewall. You can leave cloud connectors off until security signs off, allowing finance to forecast LLM expenses like any other internal workload and reducing the chance of unexpected token fees.

Govern models and prompts from one source of truth

The platform’s role-based Models Hub stores every prompt, rule, and model with a full version history, ensuring consistent governance across teams and use cases. When guidelines change, your lead clinicians can publish an update, and audit teams can still reference earlier versions for year-over-year analysis. This clear change control can shorten approvals and limit policy drift.

Choose LLM providers with hard data

Built-in evaluation projects enable domain experts to score outputs from multiple models and view accuracy dashboards within the same interface. For instance, procurement teams can compare performance and cost before signing a contract, helping you negotiate from a stronger position and plan long-term ownership costs.

Keep quality high with scheduled tests and active learning

Generative AI Lab runs LangTest suites to check bias and robustness on a schedule you set. When reviewers correct low-confidence cases, the platform can retrain the model in the background, helping maintain accuracy and fairness.

Launch multimodal projects in weeks, not months

Ready-made templates handle scanned PDFs, handwriting, and OCR bounding boxes. An insurance team, for example, can build a claims-triage proof of concept in a few hours and move to production in weeks, saving custom development time and bringing automation value forward.

Automate risk-adjustment coding with linked evidence

HCC templates help extract ICD-10 codes, map them to HCC categories, and suggest Risk Adjustment Factor (RAF) deltas while keeping the source text linked for audit readiness. Senior coders can review high-impact cases in a side-by-side view, ensuring accurate submissions. This evidence-driven approach can improve risk-adjusted revenue and lower the chance of claw-backs during audits.

Scale operations without adding headcount

Your team can process hundreds of thousands of documents without hiring more annotators by using bulk task assignment, background imports, and GPU-ready cloud images. This helps increase throughput while keeping labor costs steady, turning workload spikes into manageable compute spend.

Real-world impact: How healthcare leaders are using Generative AI Lab

The challenge

FDA pharmacovigilance analysts needed to spot opioid-related adverse events hidden in free-text discharge summaries. Manual review took weeks and offered limited traceability, leaving leadership with a slow, resource-heavy process and no audit-ready evidence.

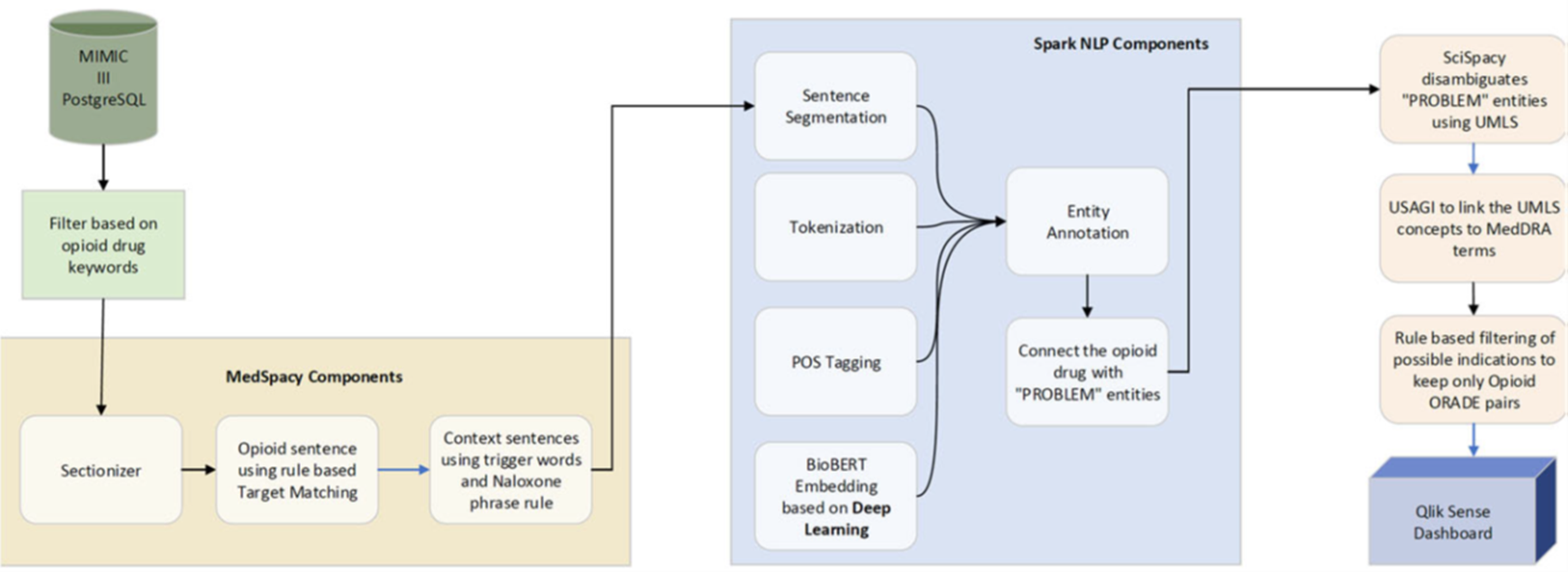

The approach

Teams loaded forty-seven discharge summaries into Generative AI Lab, applied rule triggers, and fine-tuned a clinical model to extract drug names, adverse-event terms, and trigger phrases.

Findings were mapped to SNOMED CT and RxNorm, and every annotation and model change landed in the platform’s append-only log. An interactive dashboard then combined coded data with original text for quick review.

The business impact

Analysts condensed fifty pages of narrative into thirty validated drug-event pairs, surfacing known toxicities and potential new signals. Leadership gained an auditable evidence chain without adding headcount, and protected data never left agency infrastructure. Hospitals now reuse the same workflow to flag chemotherapy toxicities, and payers apply it to detect high-risk prescribing.

What comes next for compliance-driven AI programs

Regulatory oversight is intensifying, and the need to work across structured and unstructured formats is now standard. Simultaneously, teams are being asked to do more with fewer resources, without compromising accuracy or audit readiness.

Generative AI Lab helps meet this challenge by providing a secure, no-code platform that scales across various use cases and formats, while maintaining full control over sensitive data.

You can manage clinical notes, scanned documents, and imaging data in one unified workspace, applying consistent policies and capturing every reviewer’s action with an append-only audit trail. Active and transfer learning enable teams to continually improve models as they work, reducing the burden on engineering and shortening delivery cycles.

Join our upcoming webinar to see how healthcare teams are using Generative AI Lab to de-identify patient data across formats while maintaining full privacy controls and audit readiness.

If you’re evaluating how this approach could support your compliance goals, you can also schedule a custom demo tailored to your environment and operational priorities.

Frequently Asked Questions

How is data kept private when LLMs are involved?

All bundled LLMs run within your infrastructure, whether on-premises, in a private cloud, or in an air-gapped environment. Data stays local unless you manually enable an external connector. Inference activity is recorded in an audit trail and stored in the Model Hub with role-based access control.

Can we train models without using external LLM APIs?

Yes. The platform supports zero-shot prompting, active learning, and fine-tuning using local models and internal compute resources. There is no need for external APIs or token-based billing.

Can we use Generative AI Lab for Medicare Risk Adjustment and coding support?

Yes. Version 7 includes HCC coding templates, automatic ICD-to-HCC mapping, RAF triage fields, and audit-ready logs. An optional Databricks integration can pre-annotate charts and send high-value cases for human validation, helping prepare for RADV audits.