What is the purpose of integrating medical LLMs for patient journeys?

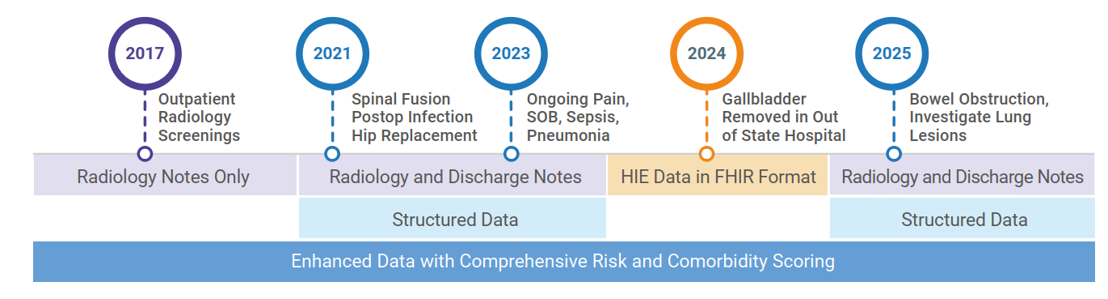

Integrating Document Understanding, Reasoning, and Conversational Medical LLMs (Large Language Models) enables healthcare organizations to construct longitudinal, context-rich patient journeys. This integration supports better clinical decision-making, accurate cohort building, and scalable healthcare data analysis. John Snow Labs has developed a robust solution that combines multiple modalities of patient data, supports natural language querying, and delivers clinically relevant answers, all within the organization’s secure perimeter.

Learn more about our Medical LLMs.

How does document understanding contribute to patient journey modeling?

Document understanding is the foundation of the system. It ingests structured and unstructured data, including:

- PDFs and scanned documents

- Free-text notes

- FHIR messages

- Tabular data

Using state-of-the-art NLP and LLM models, John Snow Labs extracts over 400 types of medical entities, such as:

- Symptoms, diagnoses, and medications

- Tumor characteristics and psychological abnormalities

- Social determinants of health

These entities are contextualized with assertion status (e.g., confirmed, negated, hypothetical), temporal information, and reference provenance. Medical terminology mapping is handled by an AI-enabled terminology server that performs semantic matching, resolving codes across SNOMED CT, ICD-10, LOINC, and RxNorm.

Learn more about our Terminology Server.

Why is reasoning essential in longitudinal patient modeling?

Patient data is messy, redundant, and distributed across multiple encounters and data systems. Reasoning models resolve inconsistencies, deduplicate facts, and perform inferential logic. For example:

- Consolidating “hip pain,” “left hip pain,” and “pain” into a single event

- Preferring newer, more accurate diagnoses over older, outdated ones

- Inferring clinical status from biometric data (e.g., calculating BMI or risk scores)

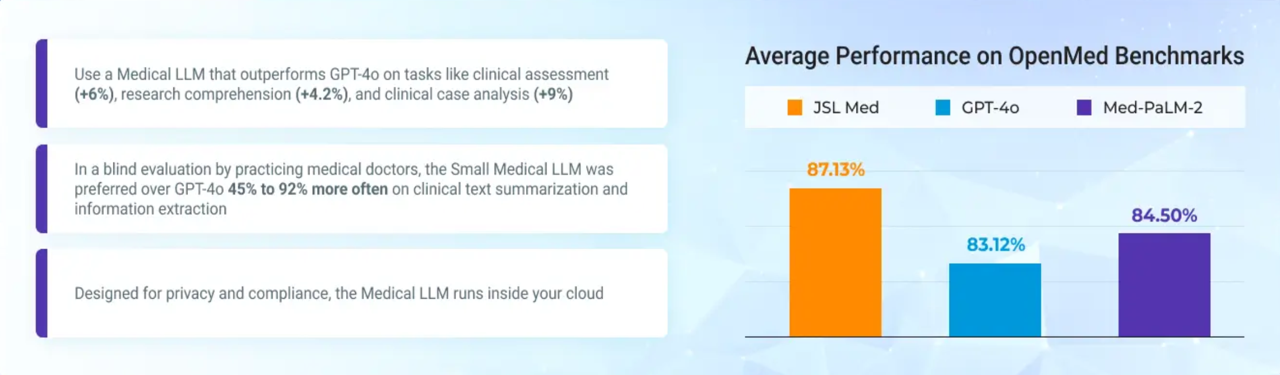

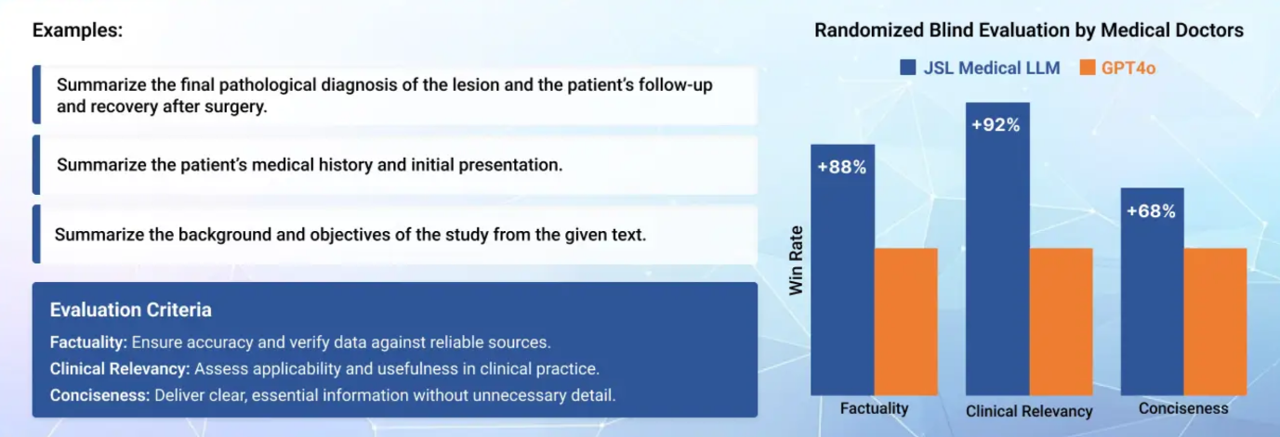

John Snow Labs’ Medical Reasoning LLMs are trained on over 62,000 clinical reasoning traces and integrate medical decision trees. These models:

- Provide transparent chains of thought

- Support uncertainty quantification

- Enable multi-step inference

Benchmarked against OpenMed, BigBench-Hard, and Math500, they outperform general-purpose LLMs like GPT-4o, Qwen2.5, and DeepSeek R1.

Explore our Reasoning LLMs.

How do conversational LLMs facilitate cohort building and clinical Q&A?

Once data is normalized and inferred into a high-quality OHDSI OMOP data model, users can interact with the system via natural language. For instance:

Q: “What drugs are prescribed after an atrial fibrillation diagnosis?”

This query is interpreted semantically, mapped to the correct concepts via the terminology server, and executed via optimized SQL agents. The process ensures:

- Accurate results using validated queries

- Consistency across repeated runs

- Sub-second response times over massive datasets

This is not a generic text-to-SQL problem. It’s an agentic workflow where the model plans the steps, selects from validated queries, and explains its choices.

What sets John Snow Labs’ platform apart for enterprise deployment?

- On-premise first: All models run within the customer’s infrastructure, ensuring compliance and data sovereignty.

- Transparent pricing: Priced per server per year, not per token, supporting large-scale workloads cost-effectively.

- Continuous innovation: Bi-weekly model updates for over eight years, keeping users at the forefront of medical AI.

- Universal license: One key unlocks access to all tools: Healthcare NLP, Medical LLMs, Reasoning Models, and Terminology Server.

See more about our Healthcare NLP solutions.

What are the practical use cases of this integration?

- Oncology: Multi-year, multi-visit patient summaries

- Psychiatry: Real-time decision support and risk profiling

- Clinical Trials: Patient matching based on complex inclusion/exclusion criteria

- Population Health: Identifying care gaps via screening and lab test coverage

Explore AI Use Cases.

FAQs

What data formats are supported for ingestion? Structured (CSV, FHIR, HL7), semi-structured (PDF, XML), and unstructured (notes, discharge summaries).

How does the platform handle terminology updates? The embedded AI terminology server is regularly updated and supports semantic mapping across standard vocabularies.

Can this system scale across millions of patient records? Yes. The system is optimized for sub-second response times even on datasets with billions of clinical events.

How is patient privacy maintained? Data never leaves the organization’s secure perimeter. All processing happens on-premises or in a private cloud.

Is the platform explainable? Yes. Every answer includes provenance, confidence scores, and a chain-of-thought rationale.

Supplementary Q&A

How are long patient timelines managed without overwhelming context windows? Instead of overloading the LLM with long sequences, data is pre-processed, indexed, and structured in OMOP. LLMs query this efficient knowledge graph, bypassing window size limitations.

Can non-technical users build cohorts? Yes. Through the conversational UI, clinicians can ask natural language questions without knowing SQL, data schemas, or terminologies. The system handles the translation.

How does the platform adapt to new use cases? With the universal license, users can mix and match components as needed. The modular architecture supports rapid integration of new models and data types.