Our Commitment to Responsible AI

We build healthcare AI that’s safe, compliant, and transparent – certified through our partnership with Pacific AI.

We build healthcare AI that’s safe, compliant, and transparent – certified through our partnership with Pacific AI.

John Snow Labs’ AI Governance Framework conforms to 200+ laws,

regulations, and industry standards worldwide.

threat modeling, controls, and continuous evaluation

robustness, red-teaming, and clinical guardrails

IP-safe training and use; protected content handling

gated advancement from design → deployment → retirement

data-minimization, access control, and de-identification

defined triggers, SLAs, RCAs, and customer notice

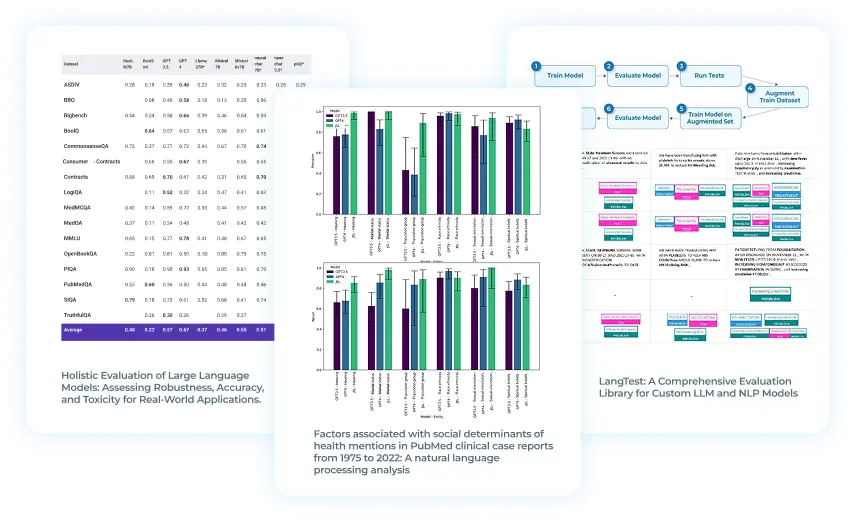

model cards, benchmarks, and decision traces

pre-deployment bias testing; ongoing monitoring

permitted/prohibited use cases; abuse prevention

Customer-hosted models: JSL LLMs and SLMs deploy on-premises, in private cloud, or in air-gapped settings. No external “model-as-a-service” is required.

Zero data sharing: Products like Generative AI Lab support fully isolated deployments, with audit trails and RBAC.

Data residency & sovereignty: Local deployments help meet residency rules and sector-specific requirements.

Privacy-preserving pipelines: Reference architectures for DICOM and metadata de-identification with OCR, PHI detection, obfuscation, and full audit logging.

Explore how our Pacific AI-certified governance, transparent benchmarking, privacy-first deployments, and fairness testing can accelerate your AI roadmap.