The healthcare industry faces a growing challenge in handling prior authorization denials, which cost the U.S. healthcare system approximately $31 billion annually1. These denials lead to significant revenue loss for hospitals, averaging between $5-10 million annually per facility. Despite the potential for recovery, 30-40% of denials remain unprocessed due to limited resources and manual inefficiencies.

With the advent of generative AI and large language models (LLMs), the landscape of healthcare chatbots has been transformed. John Snow Labs, in collaboration with AWS, has introduced a novel approach to productizing healthcare chatbots using the Medical LLM-as-a-Judge. This innovative model not only streamlines the prior authorization process but also enhances decision accuracy through AI-driven evaluations.

This blog explores how John Snow Labs’ Medical LLM-as-a-Judge is productizing healthcare chatbots, focusing on enhancing prior authorization workflows and improving decision-making accuracy.

The Problem: Manual Processing of Prior Authorization Denials

Manual processing of prior authorization denials is labor-intensive and prone to errors. Nurses and utilization managers often spend countless hours sifting through insurance letters, clinical notes, and policy documents to determine why a claim was denied and whether it can be appealed. This manual effort is not only time-consuming but also financially draining.

Key Challenges:

- High Administrative Costs:

- The U.S. healthcare system spends $31 billion annually on prior authorization processes.1

- Revenue Loss Due to Denials:

- Average hospital losses due to denied claims range between $5-10 million annually.

- Recoverable Denials Go Unprocessed:

- Approximately 30-40% of denials are recoverable but remain unaddressed due to limited staffing.

- Resource Constraints:

- Utilization nurses are often overwhelmed with hundreds of denials, each requiring a detailed analysis and response.

The Solution: LLM-as-a-Judge

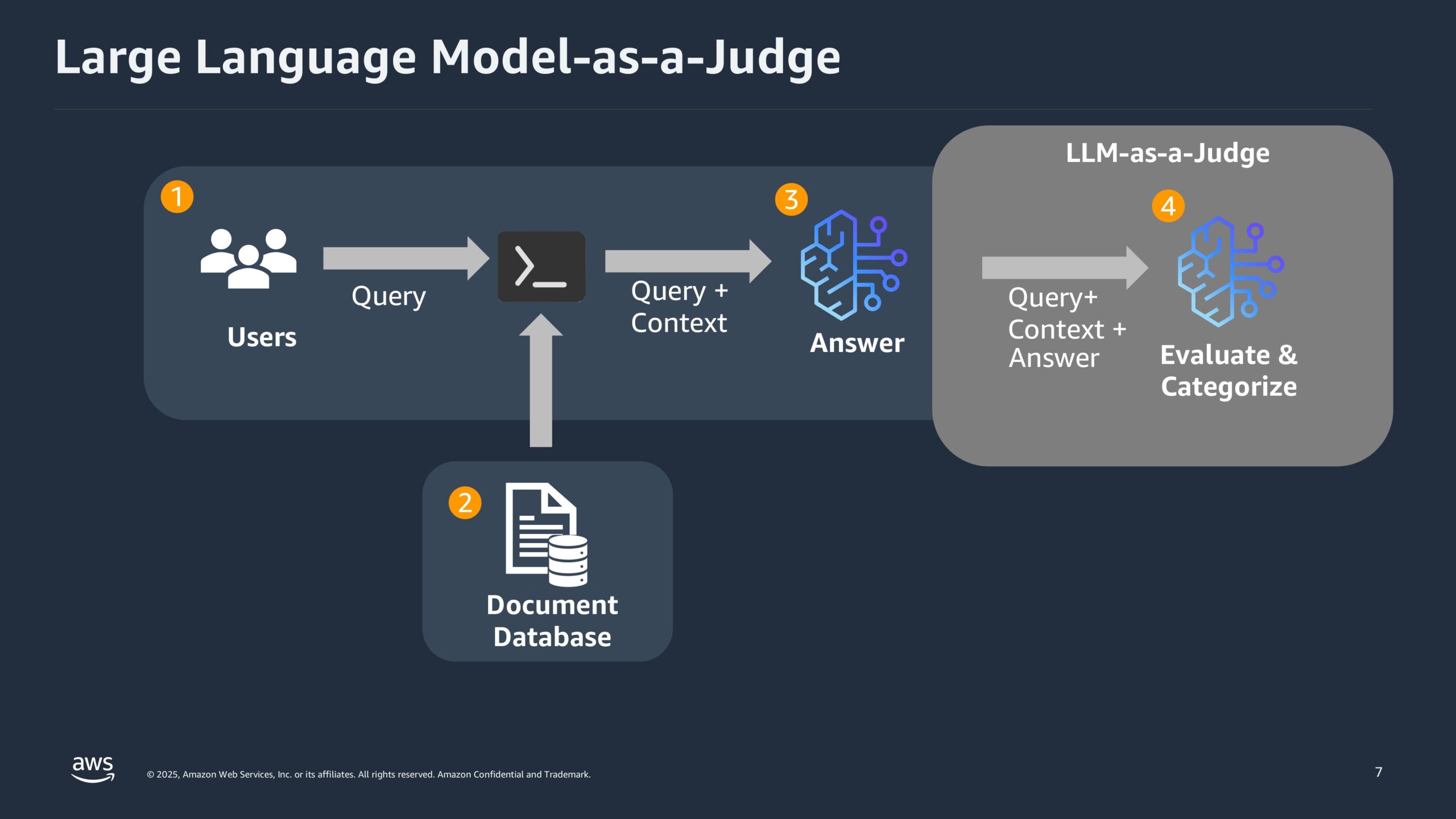

John Snow Labs’ Medical LLM-as-a-Judge offers a powerful approach to addressing the challenges of prior authorization. The model functions as a virtual assistant that analyzes denial cases, categorizes them based on appeal likelihood, and provides guidance on the next steps.

How It Works:

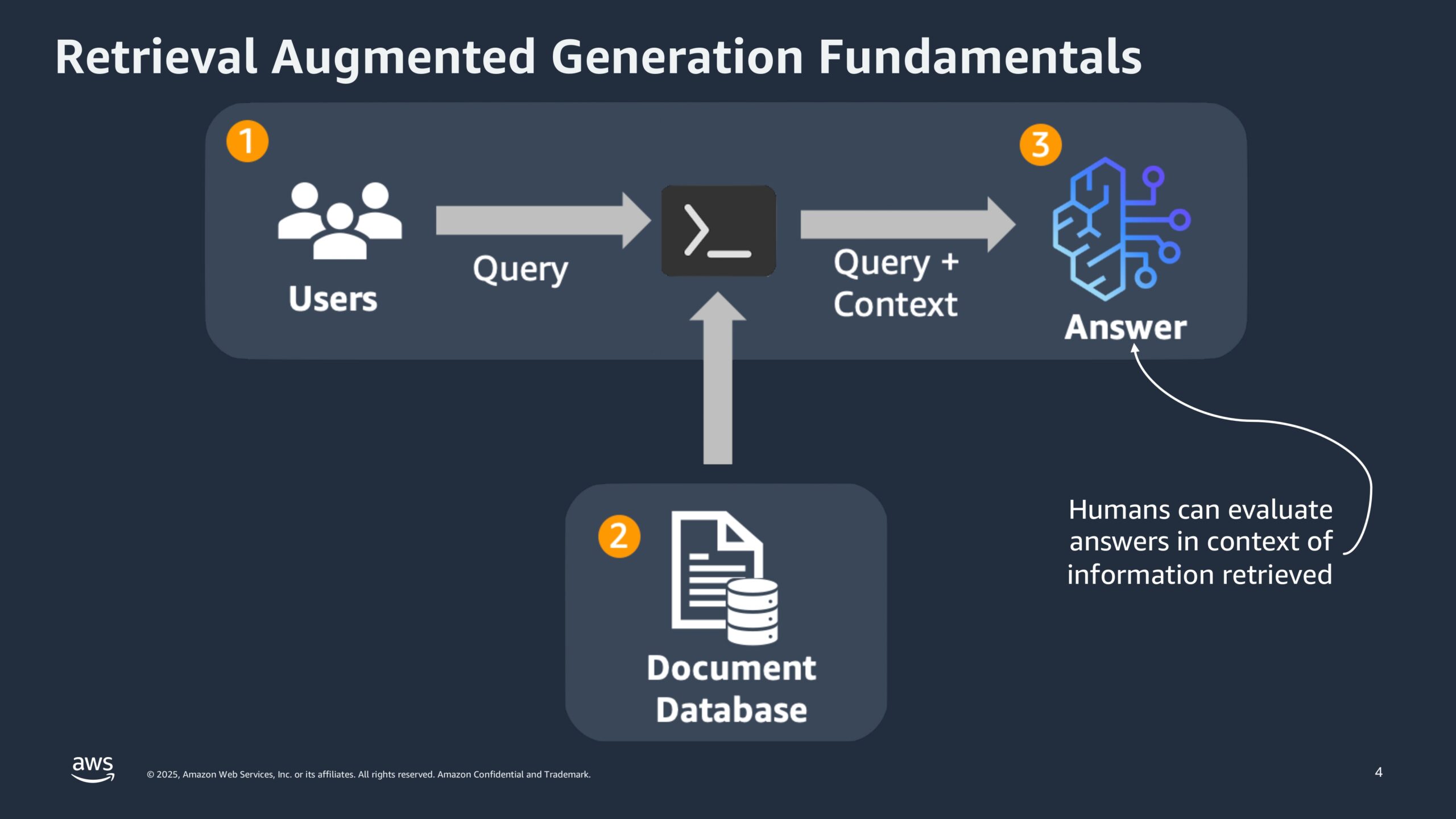

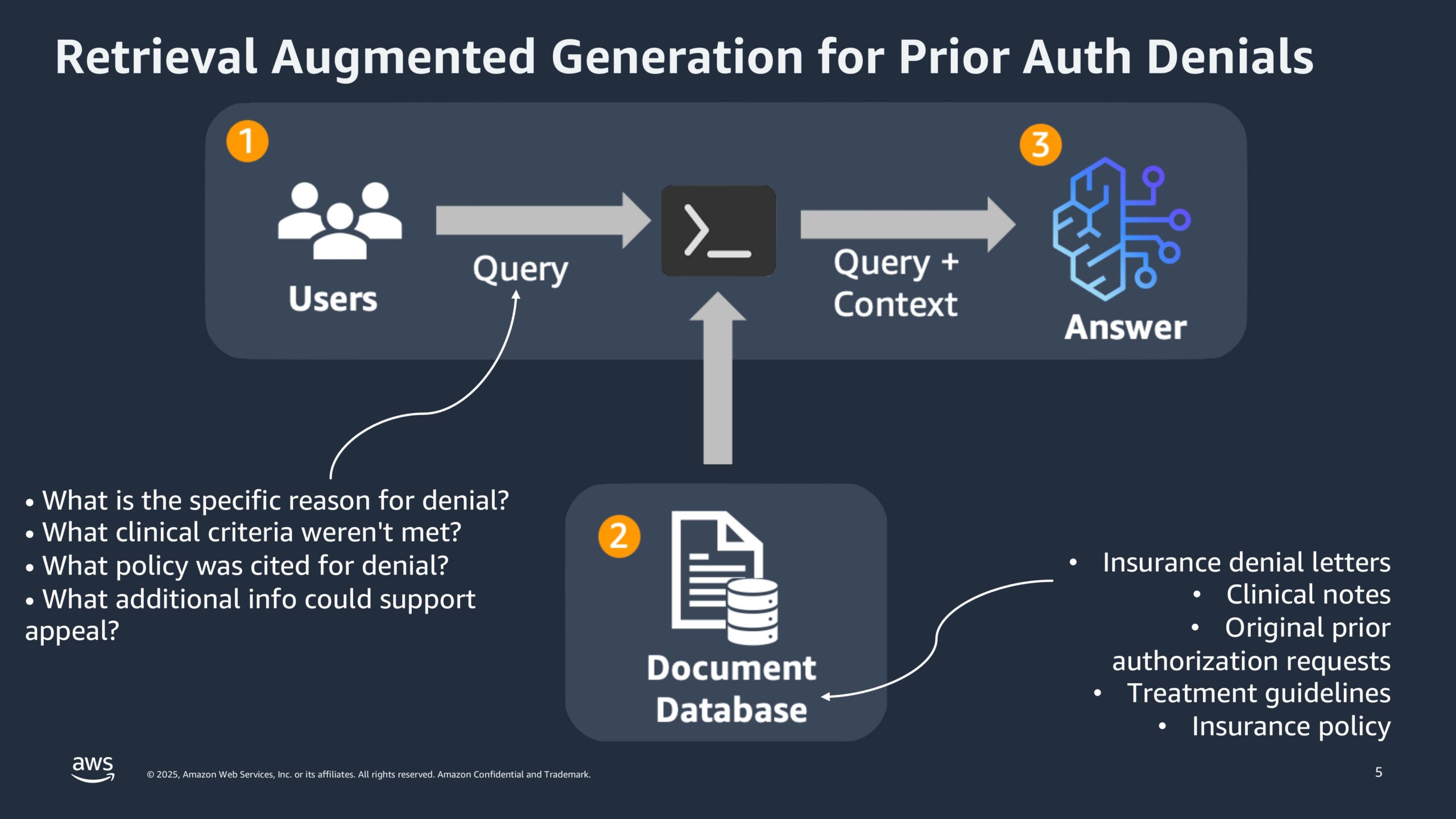

The process starts with Data Retrieval, using Retrieval Augmented Generation (RAG) to pull relevant details from insurance letters, clinical notes, and policy documents. Semantic search ensures it finds the most meaningful information, not just surface matches.

Next comes AI Evaluation, where the Medical LLM analyzes the data and scores each case based on relevance, accuracy, and logic. These scores guide the Categorization step, which sorts denials into two groups: cases with a high chance of appeal that need little physician input, and complex ones that require further review.

Finally, in the Human-in-the-Loop stage, utilization nurses review and validate the system’s transparent, traceable outputs, combining AI speed with expert oversight to improve appeal decisions.

Key Technology Stack

The system relies on integrating John Snow Labs’ Medical LLM with AWS Bedrock to leverage a scalable and efficient framework.

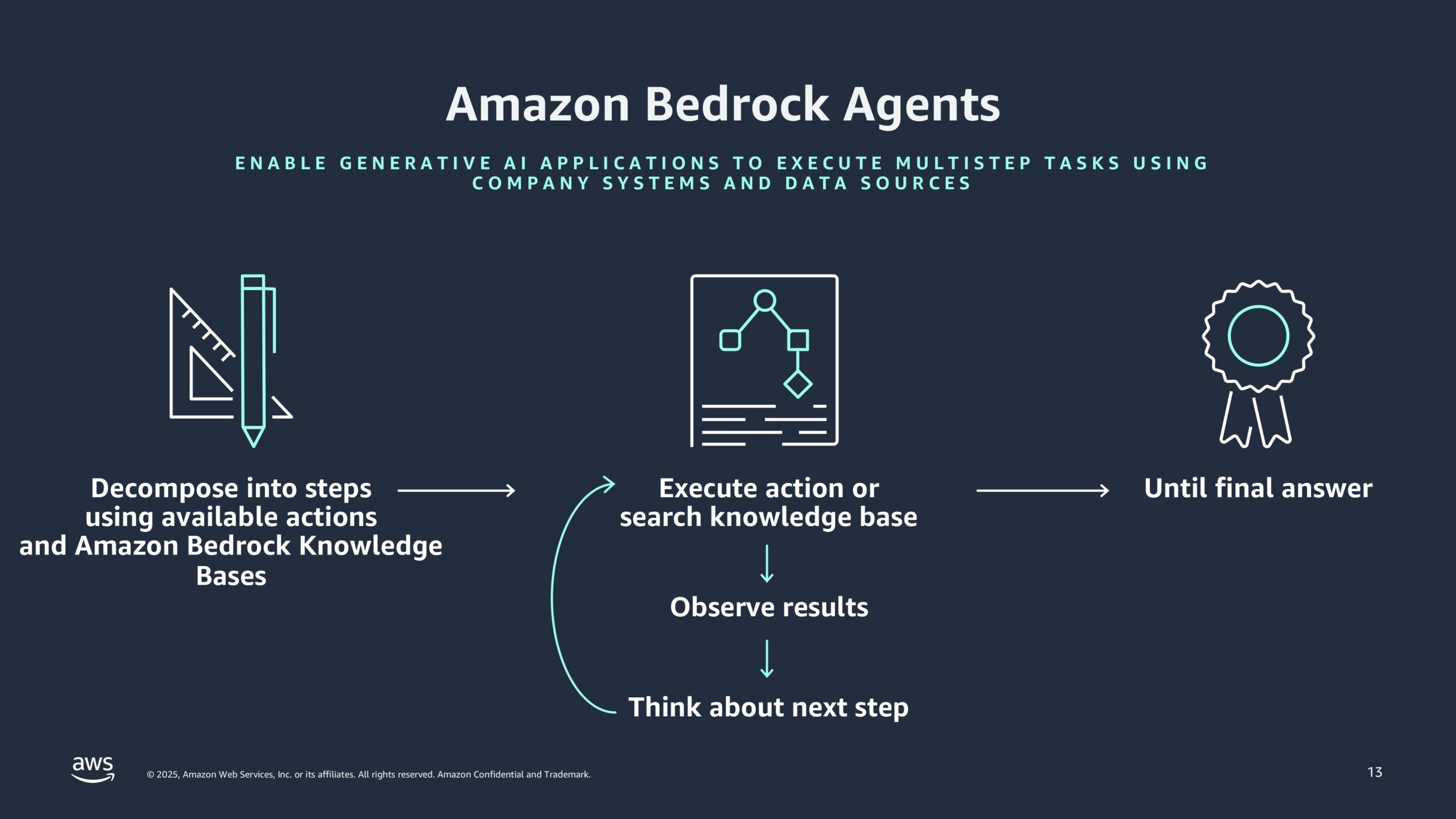

AWS Bedrock acts as the backbone, allowing direct integration of the LLM while handling multi-step tasks through agent orchestration. It also enforces responsible AI practices using built-in Guardrails, which help ensure data privacy and prevent misuse.

To improve accuracy, the system uses Retrieval Augmented Generation (RAG). Instead of relying only on the model’s internal knowledge, RAG pulls in real data to produce answers that are traceable and grounded in facts—reducing the risk of hallucinated responses.

For deployment, Amazon SageMaker takes charge. It supports real-time model execution, especially for tasks like handling authorization denials, and adjusts resources automatically based on demand. With continuous monitoring, SageMaker helps keep performance steady and reliable.

How Is LLM‑as‑a‑Judge Already Being Used in Healthcare?

The system was built to tackle some of the most time-consuming and error-prone tasks in healthcare administration, beginning with Prior Authorization Denial Management. By automatically reading and interpreting denial letters, it quickly sorts each case into categories, those that can be fast-tracked for resubmission and those that require deeper review. This saves time and effort for utilization nurses, freeing them up to focus on the more complex cases where their judgment really matters.

Next, the system supports Insurance Claim Validation, acting as a checkpoint to confirm that the clinical data submitted matches the insurer’s coverage rules. This step helps prevent one of the most common problems in claims processing: denials caused by missing or incomplete documentation. With this safeguard in place, the likelihood of reimbursement issues drops significantly.

Finally, at the foundation of it all is Compliance and Documentation. Every decision the system makes is recorded, creating a clear trail that shows why an action was taken. This not only supports internal oversight but also makes audits and appeals much smoother, offering straightforward, evidence-backed explanations when questions come up.

Altogether, these components work side by side to reduce administrative burden, lower denial rates, and make processes more transparent, helping healthcare teams focus less on paperwork and more on patient care.

What Benefits Does LLM‑as‑a‑Judge Bring to Prior Authorization?

1. Efficiency Gains:

- Reduces manual processing time from hours to minutes.

- Automatically ranks cases based on appeal potential.

2. Accuracy and Transparency:

- Minimizes human error by providing evidence-backed decisions.

- Scores are generated based on the actual content retrieved, reducing hallucinations.

3. Improved Workflow:

- Integrates seamlessly with existing EHR and insurance management systems.

- Supports multi-agent orchestration, allowing complex queries to be broken into manageable steps.

Implementation and Scalability

Deploying the LLM-as-a-Judge solution requires configuring AWS Bedrock to integrate with hospital data systems. John Snow Labs models are accessed through the AWS Model Catalog, allowing healthcare providers to select, deploy, and manage models efficiently.

Best Practices for Implementation:

The first step is to Start with Pilot Projects, particularly in departments that experience high denial rates. By focusing initial efforts on areas where the solution can have the most immediate impact, providers can refine the system before rolling it out on a larger scale.

Next, it’s important to Use Multi-Agent Collaboration. This approach allows the system to break down complex queries into manageable parts, ensuring that responses are thorough and cover all aspects of the issue at hand. It’s a way to enhance the AI’s ability to deal with intricate cases and provide comprehensive insights.

Finally, the system should Integrate Human Feedback. Nurses, who are on the frontlines of denial management, can validate the scores generated by the system and provide real-world feedback. This not only improves the accuracy of the results but also keeps the system aligned with practical, on-the-ground realities. By continuously updating the system with human insights, the solution becomes more adaptable and responsive over time.

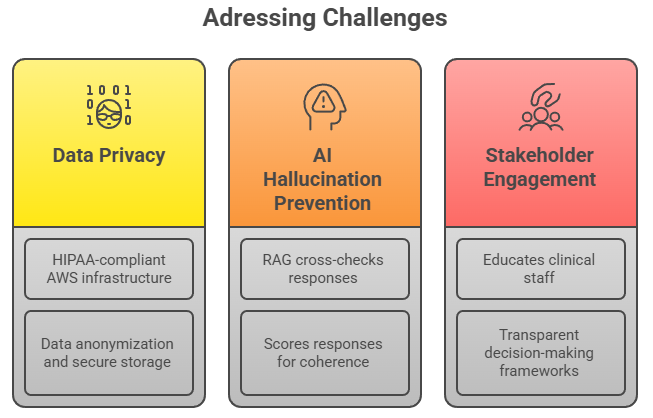

Addressing Challenges

Tackling real-world problems in healthcare requires more than just smart technology, it demands careful planning and thoughtful execution.

The first challenge is Data Privacy and Compliance. Since patient information is sensitive and heavily regulated, the system runs on HIPAA-compliant AWS infrastructure. It also uses data anonymization techniques and secure storage methods to make sure that patient records remain protected at all times, whether in motion or at rest.

Next comes AI Hallucination Prevention, a key concern when relying on language models for decision support. To keep answers grounded in reality, the system integrates Retrieval Augmented Generation (RAG), which checks all responses against original source material. Before anything is finalized, answers are scored for clarity and relevance, helping ensure that what the AI delivers is both trustworthy and accurate.

The third piece is Stakeholder Engagement. For AI tools to make a real impact, clinical staff need to understand how to work with them. That’s why the system includes built-in training and support to help users interpret AI-generated scores. It also offers clear and transparent frameworks that explain how decisions are made, building confidence in the technology and making collaboration between human and machine smoother.

By addressing these three challenges head-on, the system creates an environment where innovation can thrive without losing sight of what matters most: safety, accuracy, and trust.

Conclusion

Productizing healthcare chatbots with John Snow Labs’ Medical LLM-as-a-Judge represents a leap forward in handling prior authorization denials. By automating the evaluation process and minimizing manual effort, healthcare providers can focus on improving patient care rather than battling administrative bottlenecks.

By leveraging AWS Bedrock and John Snow Labs’ advanced LLM capabilities, the healthcare sector can not only enhance operational efficiency but also significantly reduce revenue loss due to claim denials.

For more information on deploying healthcare chatbots with John Snow Labs’ Medical LLM-as-a-Judge watch a video.

More Information on deploying healthcare chatbots with John Snow Labs’ Medical LLM-as-a-Judge

Watch Now