What is data governance in healthcare AI?

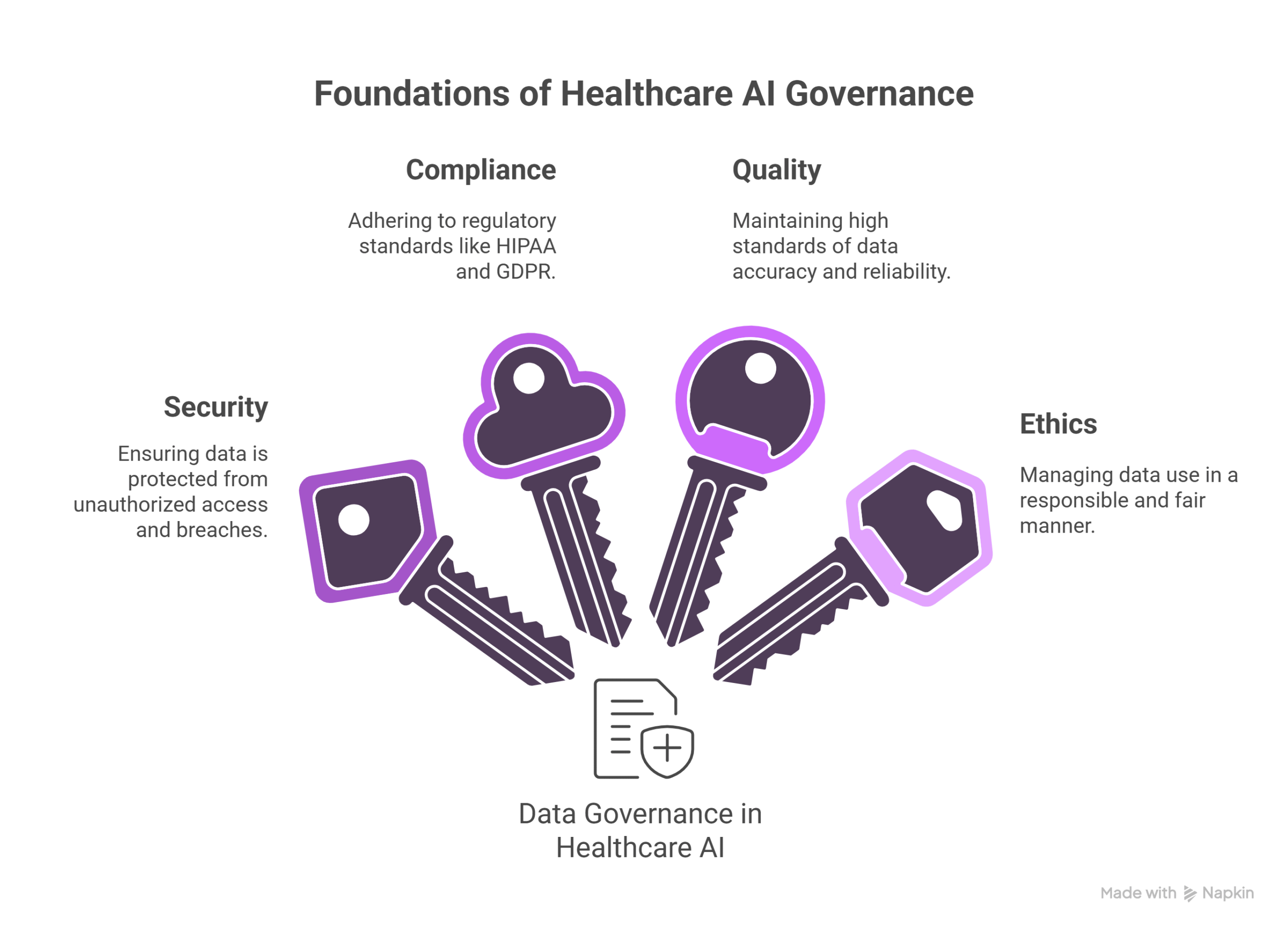

Data governance in healthcare AI refers to the frameworks, policies, and practices that ensure data used for developing and deploying artificial intelligence is secure, compliant, high-quality, and ethically managed. As AI becomes more integrated into clinical workflows, robust governance is essential for maintaining trust, ensuring privacy, and maximizing innovation.

At John Snow Labs, every product, whether it’s Healthcare NLP, the Generative AI Lab, or the Medical Reasoning LLM is built with strict data governance as a foundational requirement. All tools comply with HIPAA and GDPR standards and can be deployed securely in any environment.

Why is data governance critical in healthcare AI?

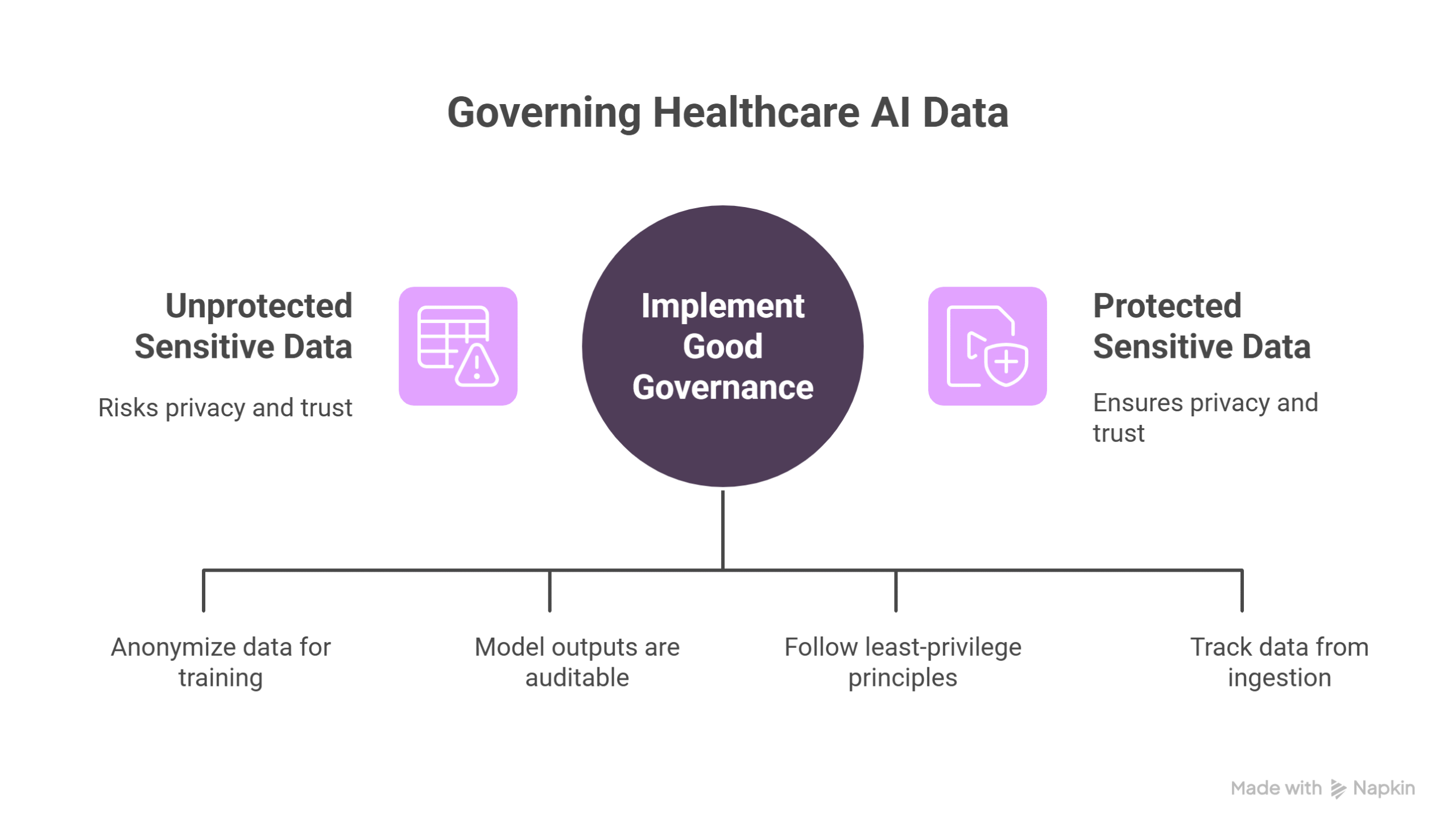

Healthcare AI depends on vast volumes of sensitive information electronic health records, medical images, pathology reports, and patient-generated data. Without proper governance, using this data risks privacy breaches, model bias, regulatory penalties, and diminished public trust.

Good governance ensures that:

- Only de-identified or anonymized data is used for training and inference.

- Model outputs are traceable, auditable, and explainable.

- Access is restricted and logged, following least-privilege principles.

- Data lineage is clear from ingestion through model deployment.

For example, as outlined in our blog on elevating compliance and auditability in Generative AI Lab, John Snow Labs product provides full versioning, traceability, and audit logging at every step of the AI lifecycle.

How can healthcare AI innovate without compromising privacy?

Innovation and privacy are not opposing goals; they can be mutually reinforcing when supported by the right tools and processes. For instance, federated learning allows models to train on decentralized data without ever transferring it. De-identification techniques protect individual-level data while preserving aggregate insights. Role-based access controls ensure the right people have the right level of access and nothing more.

John Snow Labs supports these approaches through on-premise deployment options, customizable de-identification pipelines, and full integration with audit and validation workflows. This enables organizations to safely accelerate development of AI systems that improve patient outcomes, streamline operations, and support regulatory compliance.

What best practices support responsible data use in AI development?

Key best practices include:

- De-identification at scale: Using advanced Named Entity Recognition (NER) and obfuscation to meet HIPAA and GDPR standards.

- Human-in-the-loop validation: Ensuring real-time oversight of model performance and data usage.

- Auditability and version control: Tracking every model change, dataset update, and validation cycle.

- Model explainability: Leveraging interpretable models and visualizations to ensure outputs are understandable and defensible.

FAQs

How does John Snow Labs ensure data privacy in model development?

All models can be trained, fine-tuned and run within private environments, with built-in de-identification and audit logs. No data ever needs to leave your firewall.

Can AI models be explainable and compliant at the same time?

Yes. John Snow Labs’ tools support explainable AI through transparent pipelines, labeled data tracking, and customizable validation reports.

What role does HITL play in data governance?

Human-in-the-loop (HITL) processes allow experts to monitor, review, and override AI decisions when needed, improving safety, fairness, and compliance.

Are open-source models safe to use for healthcare data?

Open-source models must be always evaluated for bias, explainability, and data provenance. John Snow Labs offers commercially supported alternatives tailored for healthcare, with robust governance built-in.

Supplementary Q&A

How does data governance affect model performance?

Well-governed data improves model quality by ensuring accuracy, consistency, and representativeness. It also reduces risk by preventing use of biased, low-quality, or non-compliant datasets.

Can governance frameworks scale across teams and departments?

Yes. With centralized platforms like the Generative AI Lab, governance policies can be codified into workflows, scaling consistency across use cases, teams, and regions.

How should organizations start building a data governance framework for AI?

Begin by inventorying your data assets, defining access policies, and identifying compliance requirements. Then select tools, like Spark NLP or the Generative AI Lab, that support traceability, HITL validation, and secure deployment from the outset.