Generative AI for Cross-Analysis of Family Medical History and Current Health Indicators

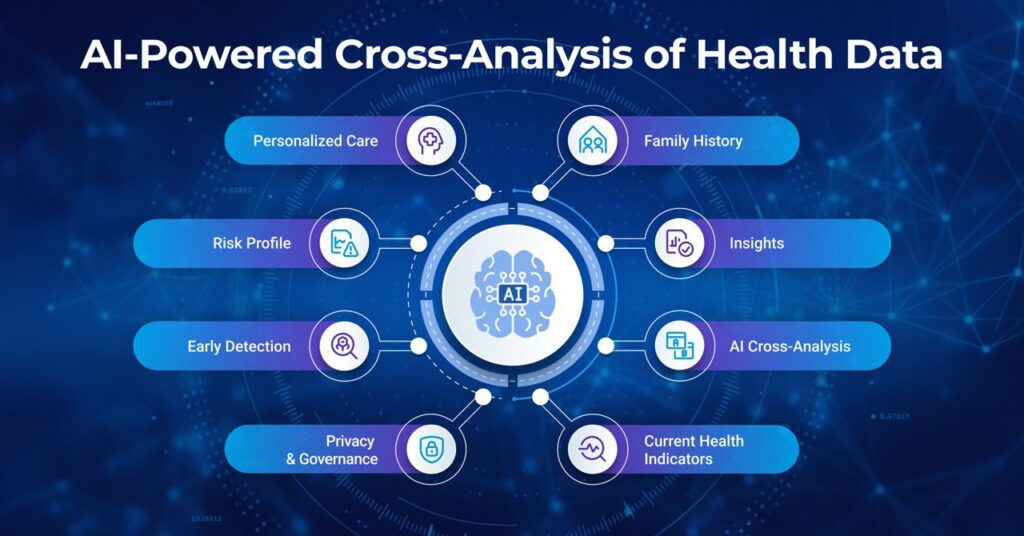

Hospitals often depend on two streams of information that rarely connect: family medical history analysis and current health indicators. When these are combined, risks become clearer, interventions happen earlier, and care plans become more personalized.

The blocker is not a lack of information; it’s scattered systems, messy documentation, and short visits that leave little time to assemble the whole picture. The solution is not “more data,” but smarter cross-analysis health data that connects family risk with present signals and presents them clearly so clinicians can act in real time.

This is where generative AI in healthcare proves valuable. By reading disparate notes and labs, it can align timelines, recognize relationships in a patient’s pedigree, and summarize insights for clinicians. When merged with today’s indicators, a detailed family history shifts care from reactive to proactive, facilitating earlier diagnosis, stronger prevention, and more personalized care plans.

This article explains how a large language model (LLM) in Healthcare and diagnosis-focused AI can transform fragmented patient records into actionable intelligence for safer, faster, and more proactive care.

Why Hospitals and Clinics Should Connect Family History with Current Data

When assessing patients’ health, family medical history analysis remains one of the well-established predictors. It guides when to start screening, when to discuss genetic counseling, and how closely to monitor borderline trends.

When you add in today’s signals, like low-density lipoprotein [LDL] levels over the years, hemoglobin A1c [HbA1c] trends, blood pressure logs, symptom notes, and even wearable data on sleep or HRV, the picture of risk and health becomes much clearer. Workups can start sooner, and plans become specific rather than generic. This type of cross-analysis of health data enables earlier detection and more personalized care plans.

Healthcare-specific large language models make this practical at the point of care. They read narrative notes and lab panels, build timelines, recognize family relationships and ages at onset, and summarize the essentials for each visit—an approach grounded in LLM in Healthcare.

When the question turns to diagnosis, the model augments rather than replaces clinical judgment. It surfaces patterns and gaps, enabling clinicians to make decisions faster and with greater context. This demonstrates the value of generative AI in healthcare, providing patient safety, early intervention, and preventative care.

Closing the Data Gap With AI

Most healthcare organizations still struggle with healthcare data silos and electronic health record (EHR) limitations. Family history is often captured in free-text intake notes or scanned PDFs, whereas current indicators live in lab systems, imaging archives, and device feeds. These fragments rarely align during a short visit, so correlations between pedigree risk and today’s vitals are easy to miss. This data gap leads to delayed diagnosis and slower prevention.

Predictive healthcare AI helps close this gap. Generative models can read across structured fields and unstructured notes, extracting information about who in the family had which condition and the age at onset, and then aligning those facts with laboratory results, vital signs, and symptoms. Instead of forcing clinicians into a scavenger hunt through the chart, the system provides a concise, reviewable summary that highlights what matters.

However, doing this safely and repeatedly requires strong governance. Platforms should support human-in-the-loop HITL review and role-based access control RBAC. There should also be support for audit trails and deployments that keep protected health information PHI under enterprise control. Tools such as the Generative AI Lab can help with these safeguards in mind.

It’s just as important to use the right inputs. High-quality healthcare datasets, like those in the Healthcare Data Library, cut down noise, make results more reliable, and help teams see value faster.

How Generative AI Transforms Health Data into Insights

Generative AI in healthcare consolidates structured EHR fields, lab results, imaging reports, symptom notes, and wearable device streams into a unified view of multimodal healthcare data. It can process both free text and discrete values while learning from longitudinal context. By leveraging high-quality healthcare datasets, clinicians gain faster, deeper insights with less manual effort. For practical examples, explore Generative AI in Healthcare.

In practice, four core capabilities drive value:

1. Understands Messy Clinical Text: It parses intake notes, consult letters, and discharge summaries, extracting relationships such as “maternal aunt,” “colon cancer at 42,” or “no family history of stroke,” and links them back to the patient’s chart.

2. Builds a Cohesive Timeline: Family history events are displayed alongside labs, vitals, imaging, and medications in a single, structured view. Trends become clearer, and outliers are easier to spot and address.

3. Answers Focused Questions: The model supports diagnosis by handling prompts, such as determining eligibility for an earlier colonoscopy based on family history and current indicators, or assessing whether sufficient information exists for a genetic counseling referral.

4. Works Across Modalities: When imaging is central, a vision-language model (such as VLM-24B) pairs scan findings with textual records, thereby connecting image insights with a patient’s medical history.

Beyond imaging, wearables provide early signals that become meaningful in context. A gradual increase in resting heart rate and drop in HRV may look minor on their own, but when combined with a sibling’s sudden cardiac death, the risk profile changes significantly. This illustrates the power of cross-analysis of health data, where value comes from integrated interpretation rather than isolated data points.

Early Detection and Personalized Care in Action

Generative AI turns fragmented signals into earlier insights, supporting early disease detection AI, diagnosis support AI, and personalized care AI during real visits, while keeping clinical judgment central.

Here is how it works in practice:

- Hereditary Cardiac Risk: Imagine a patient in their mid-30s whose resting heart rate has been gradually increasing over the past six months, while their heart rate variability continues to decline. They’ve also started noticing palpitations during exercise. When it is combined with the fact that a sibling had passed away suddenly due to cardiac issues, the risk picture changes. An AI system may flag these connections early and suggest a closer examination by a cardiologist or even a referral for genetic testing. The doctor is still the one making the call, but the technology helps reveal some insights, allowing the diagnosis to be more focused.

- Oncology Triage: In busy clinics, important family history details are often buried in notes. Primary-care tools that scan charts and surface risk patterns can help prompt timely cancer screenings. This ensures patients are tested earlier without replacing the clinician’s decision-making role.

- Metabolic Risk: A family history of type 2 diabetes, combined with subtle upticks in fasting glucose and triglycerides, signals higher metabolic risk. By linking family context with current lab results, AI supports early counseling and a tighter cadence for HbA1c monitoring, improving adherence because patients understand the “why.”

- Inherited Cancer Syndromes: If a parent had early-onset colon cancer, screening protocols may change in both age and modality. Generative models help keep these details structured and tied to clinical orders, while flagging missing pieces of family history so future visits are better informed.

Building the Technical and Governance Foundation for AI

Strong outcomes begin with reliable inputs. Leveraging high-quality healthcare datasets and consistently mapping them to clinical terminologies ensures that models learn from stable signals rather than noise.

Equally important is governance in healthcare AI. Hospitals and clinics require platforms that support human review, RBAC, audit trails, and secure deployments to keep PHI secure within organizational boundaries. Enterprise tools, such as the Generative AI Lab, can provide these safeguards, but the principle is broader: governance must be designed into every project from the outset.

Privacy should be built in from day one. Before centralizing family history with current indicators, all identifiers should be removed across text, PDFs, tables, and imaging files. Automated De-Identification can help to streamline this process. It can also help to ensure compliance and reduce manual burden. Under US law, two approaches are accepted under HIPAA: Safe Harbor and Expert Determination. Companies should also document their method and maintain a defensible audit trail.

Moreover, plan for interoperability and traceability as projects scale. Health systems should expect to exchange formats such as HL7 v2 messages, FHIR resources, DICOM images, CSVs, and device data streams. Keeping a clear lineage enables quick audits or rollbacks. Aligning payloads with the national United States Core Data for Interoperability (USCDI) framework can make sure the downstream data exchange remains predictable and compliant.

Safeguarding Privacy and Ethics in Cross-Analysis

When applying generative AI to family and current health data, healthcare data privacy and ethical AI in healthcare must remain central. Governance practices, such as HITL review and access control, set the foundation, but organizations must also align with formal regulatory standards to ensure HIPAA-compliant AI and GDPR-compliant deployments.

In the United States, HIPAA outlines two de-identification approaches: the Safe Harbor and Expert Determination methods. Both paths are valid, provided the choice is documented and evidence is retained in an audit trail.

In the United Kingdom and the European Union, health data is categorized as special-category data under GDPR. Organizations must establish a lawful basis under Article 6 and a specific condition under Article 9, often with additional safeguards, such as a Data Protection Impact Assessment (DPIA), for high-risk use cases.

Ethical safeguards go beyond compliance. Bias prevention and fairness are essential to ethical AI in healthcare. This includes monitoring outputs across patient subgroups, retraining with representative data, and ensuring human oversight when AI influences triage or outreach lists. Reference frameworks such as the NIST AI Risk Management Framework provide practical guidance on implementing these principles in daily operations.

Together, regulatory compliance and ethical design ensure that generative AI strengthens trust while protecting patients as it integrates family history and current health indicators into clinical workflows.

Practical Steps to Start Implementing This Approach

To put these principles into action, let’s examine the steps organizations can take:

- Start with Focused Use Cases: Select scenarios where combining family medical history with current indicators can demonstrate clear value, for example, hereditary cardiovascular risk or breast/colon cancer screening. Define outcome metrics in advance, such as reduced referral times, more accurate test ordering, or improved patient adherence. This makes implementing generative AI in healthcare easier by proving value in a controlled scope.

- Build a Secure Data Sandbox: Ingest de-identified clinical notes, labs, vitals, imaging reports, and registry data. Maintain lineage, provenance, and access controls from the start. Establishing a clean vocabulary and terminology layer early prevents rework later and lays a foundation for broader healthcare AI adoption.

- Prototype with a Healthcare LLM: Begin with practical tasks like extracting family-history details, summarizing notes, and generating short, patient-ready risk explanations. Keep prompts auditable, and validate outputs with clinicians to ensure clinical reliability.

- Add Multimodal Reasoning: If imaging is critical, implement workflows that allow a model to read scans and reports together, ensuring findings are linked to the context of lab and family history. Extend the same approach to wearables or device data where relevant.

- Operationalize De-Identification: Standardize PHI removal across text, tables, PDFs, and DICOM so analysts can safely explore patterns. Automated de-identification accelerates approval cycles and supports compliance.

- Clinically Validate Before Scaling: Run a prospective pilot with chart review. Measure real-world outcome improvements, not just model accuracy. Apply reporting frameworks (e.g., CONSORT-AI, TRIPOD-AI) to ensure rigorous clinical validation of AI practices and create an internal playbook that allows other teams to confidently reuse validated workflows.

Key Takeaway: Smarter Cross-Ana

Generative AI provides healthcare teams with a dependable way to connect family history with current health status. The payoff is earlier risk detection, clearer diagnostic support, and more personal care plans.

With the proper guardrails, de-identification, human review, auditability, and privacy-first deployment, you move faster while strengthening patient trust. Start small. Prove value. Scale with confidence.

John Snow Labs offers healthcare-specific LLMs, de-identification software, and the Generative AI Lab to help you safely implement cross-analysis of family and health data.

Schedule a demo today to explore how your organization can adopt generative AI for earlier detection and better prevention.

FAQs

1. How does generative AI improve the use of family medical history in healthcare?

It extracts key details from unstructured text (e.g., relatives, conditions, ages at onset), builds timelines, links those to current indicators, and answers focused clinical questions.

2. What types of current health indicators can be cross-analyzed with AI?

Companies can cross-analyze labs such as lipids and HbA1C and vitals like BP and weight with AI. They can also analyze medication histories and symptom notes, as well as wearable metrics like sleep and resting HR.

3. How is patient data kept private and HIPAA-compliant?

Use de-identification methods like Safe Harbor or Expert Determination to keep patient data secure. You can also use least-privilege access, immutable audit logs, and deployments that keep PHI inside your network.

4. Can AI predict disease risks earlier than traditional methods?

AI doesn’t replace clinicians, but it can surface patterns across family history and current signals that prompt earlier workups and more targeted screening.

5. What are the first steps for hospitals and clinics to adopt this approach?

Choose a narrow use case and set outcome metrics. After that, build a secure de-identified sandbox and prototype with a healthcare LLM. You can also add multimodal reasoning where relevant. Finally, run a prospective pilot before scaling.