In the evolving landscape of healthcare and life sciences, innovation alone is no longer enough. Executive leadership across hospitals, payers, and pharma must now answer a more complex question: Can this AI solution drive measurable impact while meeting the rigorous demands of regulatory compliance?

For a growing number of organizations, large language models (LLMs) promise to transform workflows from documentation and diagnosis support to risk adjustment and quality measurement. Yet without governance, these same models risk becoming unscalable liabilities. The stakes are high: HIPAA, GDPR, and FDA oversight now extend well beyond data storage and touch every layer of the AI lifecycle, from training inputs to prompt engineering.

The rising tide of regulation

These results align with a broader trend. Agencies across the globe are formalizing expectations around AI and LLMs. The FDA’s AI/ML Action Plan requires that health AI systems incorporate lifecycle monitoring, including real-world performance analysis and human oversight. The EU AI Act mandates strict governance controls for high-risk applications, including healthcare among them. In parallel, HIPAA’s evolving interpretation increasingly includes model transparency and the traceability of decisions involving protected health information.

For executive teams, the message is clear: trust in AI is no longer earned solely through accuracy; it must be underpinned by accountability.

Governance as the foundation of deployment

A robust LLM governance framework offers more than just peace of mind. It is the infrastructure that enables sustainable AI adoption. With Generative AI Lab, John Snow Labs operationalizes governance across the entire AI stack:

Through role-based workflows, leadership teams can ensure that only approved personnel fine-tune or modify deployed models. Every change is logged, time-stamped, and reviewable, satisfying not just IT policy, but GPRD, GxP and HIPAA audit standards. This becomes especially critical when AI is deployed across multiple departments or business units.

Human-in-the-loop validation is deeply embedded, allowing clinical experts to verify outputs, assess hallucination risk, and score fairness, creating a defensible record of human oversight. Unlike open-source stacks that treat “human in the loop” (HITL) as an afterthought, John Snow Labs has made it a native capability, refined through years of work in clinical NLP and de-identification.

A framework that anticipates what’s next

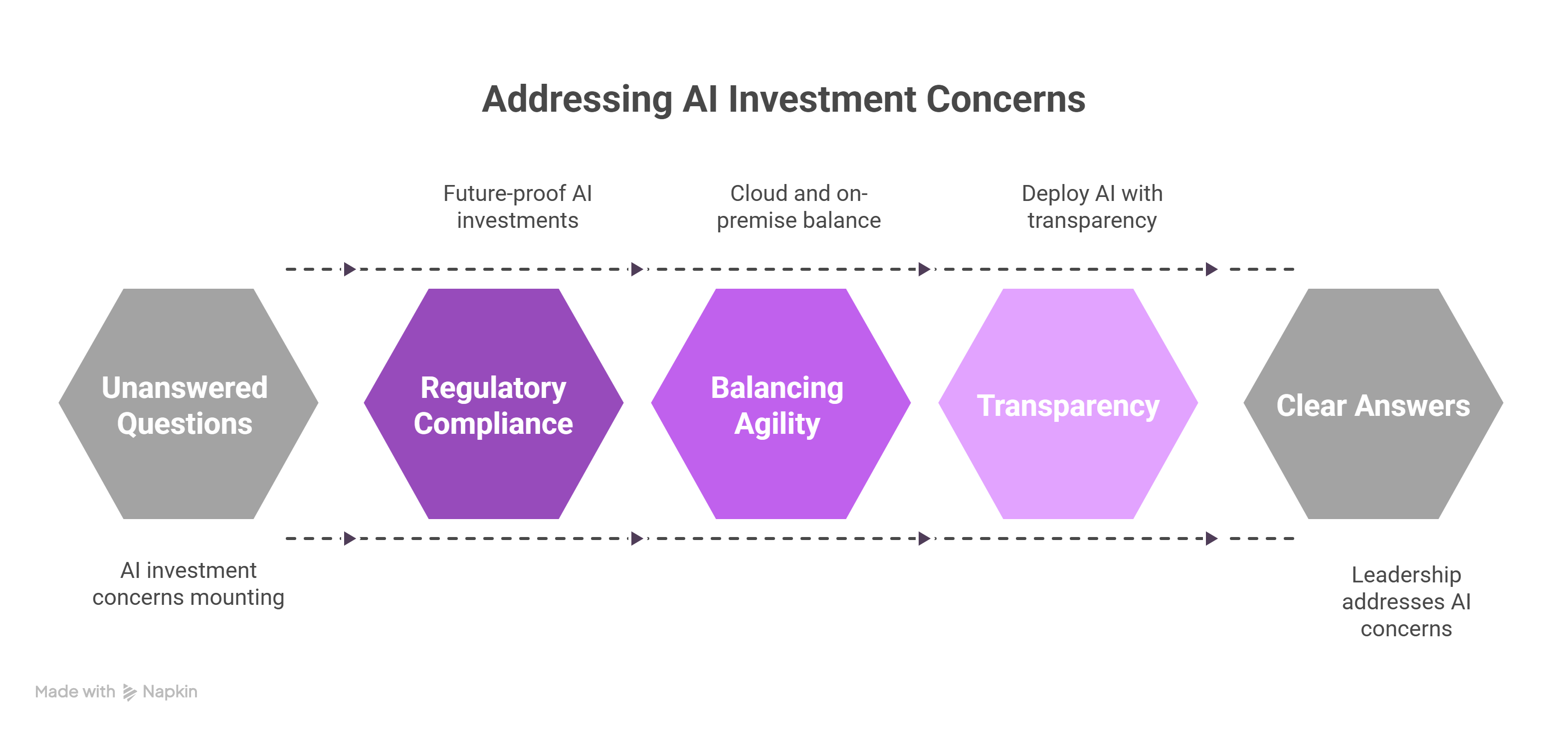

Executives face mounting questions: How do we future-proof our AI investments in the face of evolving regulations? How do we balance cloud-based agility with on-premise control? What’s the cost, not only in dollars but in organizational trust, of deploying AI without transparency?

These aren’t abstract concerns. They reflect what leadership teams must answer in boardrooms, summit panels, and regulatory filings. And they’re exactly the questions John Snow Labs is built to address.

An invitation to lead with confidence

Healthcare AI no longer lives in the lab, it lives in clinical notes, risk scores, and treatment decisions. For innovation to drive sustainable value, it must be governed. John Snow Labs offers more than tools; it offers a framework for confidence, built on years of clinical expertise, compliance alignment, and domain-specific engineering.

If your team is charting the course for compliant, scalable AI, you may want to explore how Generative AI Lab can help transform governance from a barrier into a business advantage.