Why must medical‑AI systems be engineered for compliance and beyond?

The EU AI Act classifies many healthcare AI systems; especially diagnostics, clinical decision support and device‑embedded AI as high‑risk, with extensive obligations around data governance, transparency, human oversight and ongoing monitoring.

For organisations deploying medical‑AI, compliance is not simply a tick‑box; it is foundational to product viability, market access, and trust. Without building for compliance from the beginning, systems risk regulatory rejection, delayed roll‑out or disqualification from key European markets.

But engineering for survival means going beyond compliance, designing systems that are resilient, trustworthy, auditable and aligned with quality frameworks.

What are the core obligations under the EU AI Act for healthcare AI?

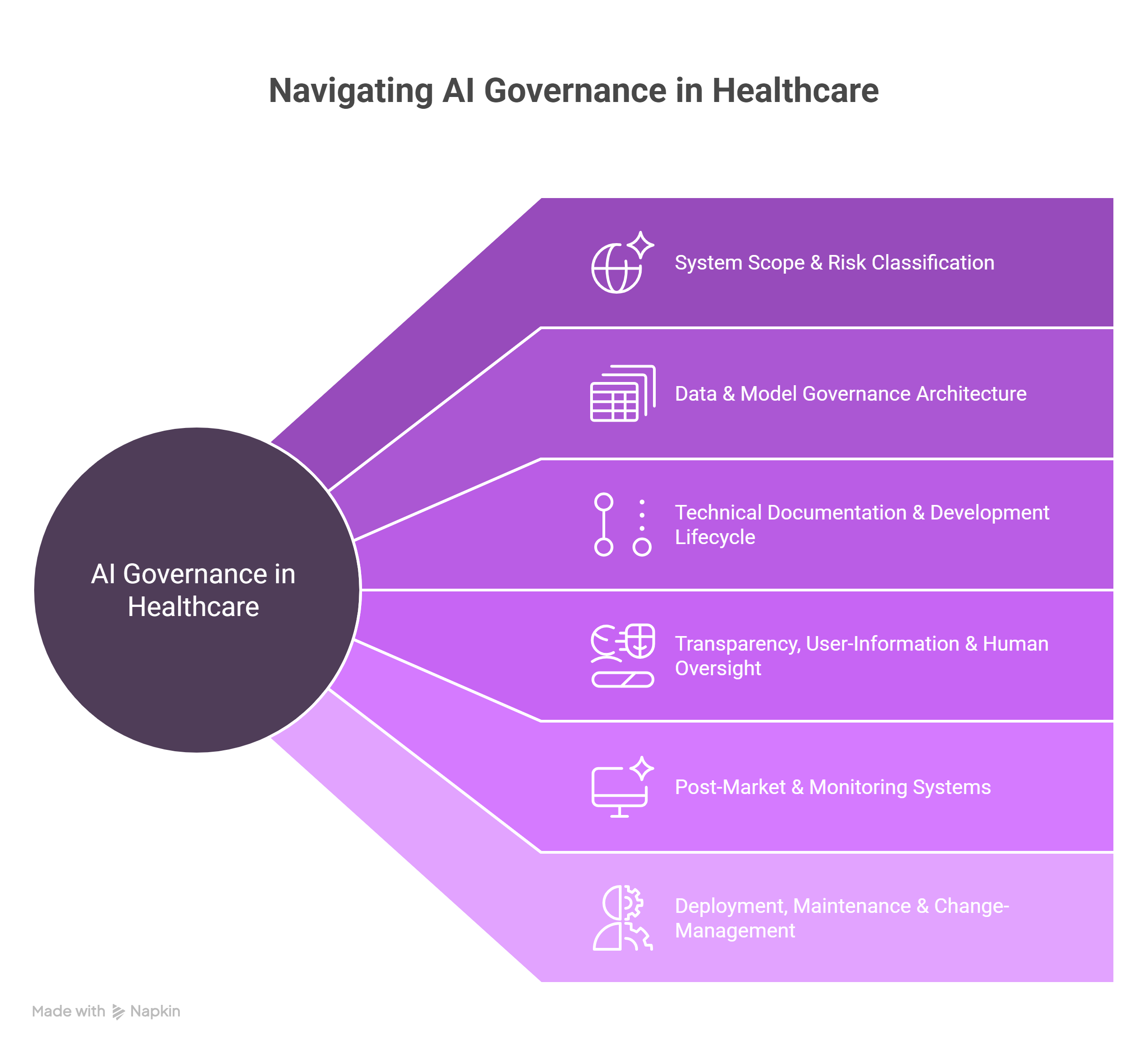

To engineer a medical‑AI system that survives, developers must account for several layered obligations:

- Risk‑classification: AI systems for medical purposes are often classified as high‑risk.

- Data governance and quality: Systems must rely on high‑quality, representative data.

- Technical documentation: Detailed records of system design, performance, robustness, mitigation of bias, etc.

- Transparency and human oversight: Users (clinicians and patients) must be informed of the system’s purpose, capabilities, limitations. Human oversight mechanisms must be implemented.

- Post‑market monitoring and incident reporting: Ongoing performance evaluation, logging, processes for serious incident handling.

- Interplay with existing regulation: Systems may also fall under EU MDR/EU IVDR or other medical‑device laws, requiring integrated compliance efforts.

How to engineer a compliant medical‑AI system: architecture, process and governance

Here is a structured engineering play‑book for building a medical‑AI system that meets and exceeds the EU AI Act requirements:

- Define system scope and risk classification

- Map clinical use‑case: Is it decision‑support, autonomous diagnosis, triage, image interpretation?

- Determine if the system qualifies as “high‑risk” under the AI Act (likely yes in healthcare).

- Align with device‑classification (MDR/IVDR) to understand which conformity pathways apply.

- Design data and model governance architecture

- Build pipelines that ingest diverse clinical modalities (EHR text, images, labs, genomics) with traceability.

- Document data provenance, representativeness, completeness, bias‑mitigation strategies.

- Establish model versioning, change‑control, audit logs, human‑in‑loop steps.

- Technical documentation and development lifecycle

- Maintain design history file equivalent: requirement specs, architecture diagrams, risk‑management evidence.

- Demonstrate model robustness: performance metrics, adversarial testing, robustness to drift.

- Integrate explainability features: why did the model output what it did. Link to provenance.

- Transparency, user‑information and human oversight

- Provide clinician users with clear instructions for use, limitations, performance characteristics.

- Embed human‑in‑the‑loop controls: override options, alerts, escalation workflows.

- Ensure user interface clearly flags AI‑supported suggestions.

- Post‑market and monitoring systems

- Establish continuous performance tracking: key metrics, drift detection, error analyses.

- Logging of serious incidents.

- Establish a process for model update.

- Define governance committees for oversight: data/AI ethics board, safety board.

- Deployment, maintenance and change‑management

- Version control and change‑management must tie into documentation. Changes to model or data must trigger re‑assessment.

- Deploy in controlled environments (private cloud, on‑premise) with appropriate security, role‑based access.

- Ensure integration with clinical workflow (EHR, RIS, PACS) while maintaining clear separation of AI model outputs and decision ownership.

How John Snow Labs supports the engineering‑of‑healthcare‑AI for EU compliance

John Snow Labs offers a suite of capabilities and infrastructure designed to help healthcare AI providers navigate this regulatory environment. By leveraging these tools, healthcare AI organizations can shift from ad‑hoc compliance to embedded regulatory alignment.

- Healthcare‑specific NLP & data‑pipelines: These ensure clean, curated clinical text, entity/extraction outputs, data provenance and audit logs addressing key data governance obligations.

- Generative AI Lab & model orchestration: A no‑code/low‑code platform enabling pipeline chaining, model lifecycle control, human‑in‑the‑loop integration and change‑history management, facilitating transparency and oversight.

- Secure deployment options and audit‑ready architecture: On‑premise or private‑cloud installations with logging, role‑based access and environment controls aligned with high‑risk healthcare AI use‑cases.

- Risk‑management and documentation frameworks: With experience in clinical NLP and medical‑AI productization, we support clients in building the documentation, version control, performance monitoring and governance frameworks required under EU regulation.

- Change‑control and versioning pipelines: As model changes are required (for example, new guideline updates or retraining), John Snow Labs platforms support tracing, rollback, impact assessment and audit logs.

Why go beyond compliance and embed trust‑by‑design?

Compliance alone is necessary but not sufficient. Building systems that succeed in practice and remain viable over time requires:

- Scalability and adaptability: Clinical practice evolves rapidly; your AI must support ongoing updates without breaking compliance.

- Trust and clinician adoption: Transparent, explainable systems foster clinician trust, which improves safety and usage data, feeding back into performance.

- Interoperability: Alignment with standards (HL7 FHIR, DICOM, etc.), metadata tracking and system integration avoid data silos.

- Continuous learning and auditing: Systems must be set up for feedback loops, error detection and improvement. This aligns with evolving regulatory expectations around monitoring.

- Market differentiation: Compliance becomes a baseline. Trustworthiness, auditability, and integration become competitive advantages in healthcare product markets.

What happens if you don’t engineer for survival?

Failing to embed compliance and resilience can result in:

- Market exclusion in the EU (since launching high‑risk AI without conformity may be prohibited or heavily restricted)

- Regulatory enforcement risk (large fines, reputational damage)

- Slow time‑to‑market due to rework under regulatory scrutiny, increased cost, missed innovation windows

- Low clinician trust, poor adoption, limited real‑world impact and possible legal liability if oversight is insufficient.

Conclusion: Engineering now for tomorrow’s regulation and clinical reality

The EU AI Act marks a paradigm shift for medical‑AI systems: compliance is indispensable, but survival in the market demands much more. Healthcare AI vendors and product teams must embed governance, transparency, human‑in‑the‑loop, continuous monitoring and version‑control architectures from day one.

John Snow Labs helps organizations transition from regulatory fear to operational engineering. By engineering for compliance and resilience, organizations can launch AI systems that not only meet regulatory obligations, but gain clinician trust, deliver real‑world value and survive as products over time.

FAQs

Q: If an AI system is already CE‑marked under MDR, does it automatically comply with the EU AI Act?

A: Not necessarily. While MDR CE‑marking addresses safety and performance, the AI Act adds specific obligations around transparency, data governance, human oversight, incident logging and change‑management.*

Q: When do the EU AI Act obligations become applicable for high‑risk medical AI systems?

A: The regulation entered into force August 1 2024; obligations for high‑risk systems may apply 24‑36 months after depending on type.**

Q: Does building for compliance hinder innovation?

A: On the contrary; it creates a foundation for trustworthy AI, faster market entry, fewer regulatory delays and stronger clinician/user adoption.