Why is transparency essential in health AI systems?

Artificial Intelligence (AI) is reshaping healthcare by enabling scalable diagnostics, decision support, and operational efficiency. Yet, adoption depends on one critical factor: trust. Clinicians, patients, and regulators need assurance that AI models are reliable, safe, and explainable. Transparency measures are the foundation of that trust, ensuring that AI-driven insights can be understood, validated, and integrated into clinical care.

Transparency in health AI is not only an ethical requirement but also a regulatory one. Frameworks like the FDA’s Good Machine Learning Practice (GMLP) and the European Union’s AI Act emphasize explainability, auditability, and fairness. Without these measures, AI adoption risks stagnation due to uncertainty and lack of accountability.

What does transparency mean in the context of health AI?

Transparency in health AI refers to making the design, functioning, and decision-making of AI systems understandable to stakeholders. It involves:

- Explainability: Providing human-interpretable insights into how predictions or recommendations are generated.

- Auditability: Ensuring that AI workflows, from data input to output, are traceable for compliance and validation.

- Data Provenance: Documenting the source, quality, and handling of data used to train and validate models.

- Bias Detection and Mitigation: Making visible the steps taken to identify and reduce inequities in model outputs.

In healthcare, transparency must serve both clinical experts (who need detail to validate AI outputs) and non-experts (patients, administrators, regulators) who require clarity without overwhelming technical jargon.

How can transparency be embedded into AI model development?

Building transparency starts at the design stage and extends through deployment. Key measures include:

- Model Documentation: Using tools like model cards and datasheets to describe training data, validation processes, and intended use cases.

- Validation Protocols: Conducting internal and external validations across diverse populations to ensure generalizability.

- Version Control and Traceability: Tracking model iterations and maintaining logs of changes for accountability.

- Performance Reporting: Publishing clinically relevant metrics such as sensitivity, specificity, and fairness indicators.

By embedding these practices, developers ensure that transparency is not an afterthought but a core design principle.

What role does explainable AI (XAI) play in healthcare?

Explainable AI (XAI) provides insight into how AI systems arrive at specific decisions. In healthcare, this is vital for:

- Clinical Validation: Radiologists or oncologists can review AI-generated heatmaps or extracted entities to confirm outputs.

- Error Analysis: Understanding why a model failed helps refine it for future use.

- Patient Communication: Transparent reasoning enables clinicians to explain AI-supported decisions to patients, improving trust and shared decision-making.

For example, saliency maps in imaging AI highlight which regions influenced predictions, while NLP pipelines can provide highlighted text spans to support extracted entities.

How does transparency support regulatory compliance?

Transparency measures align closely with healthcare regulatory requirements:

- FDA’s GMLP calls for clear documentation of training and testing data.

- HIPAA and GDPR demand secure, auditable data handling.

- EU AI Act classifies healthcare AI as high-risk, requiring explainability and traceability.

Transparent AI systems not only meet compliance requirements but also make audits smoother and faster, reducing organizational risk.

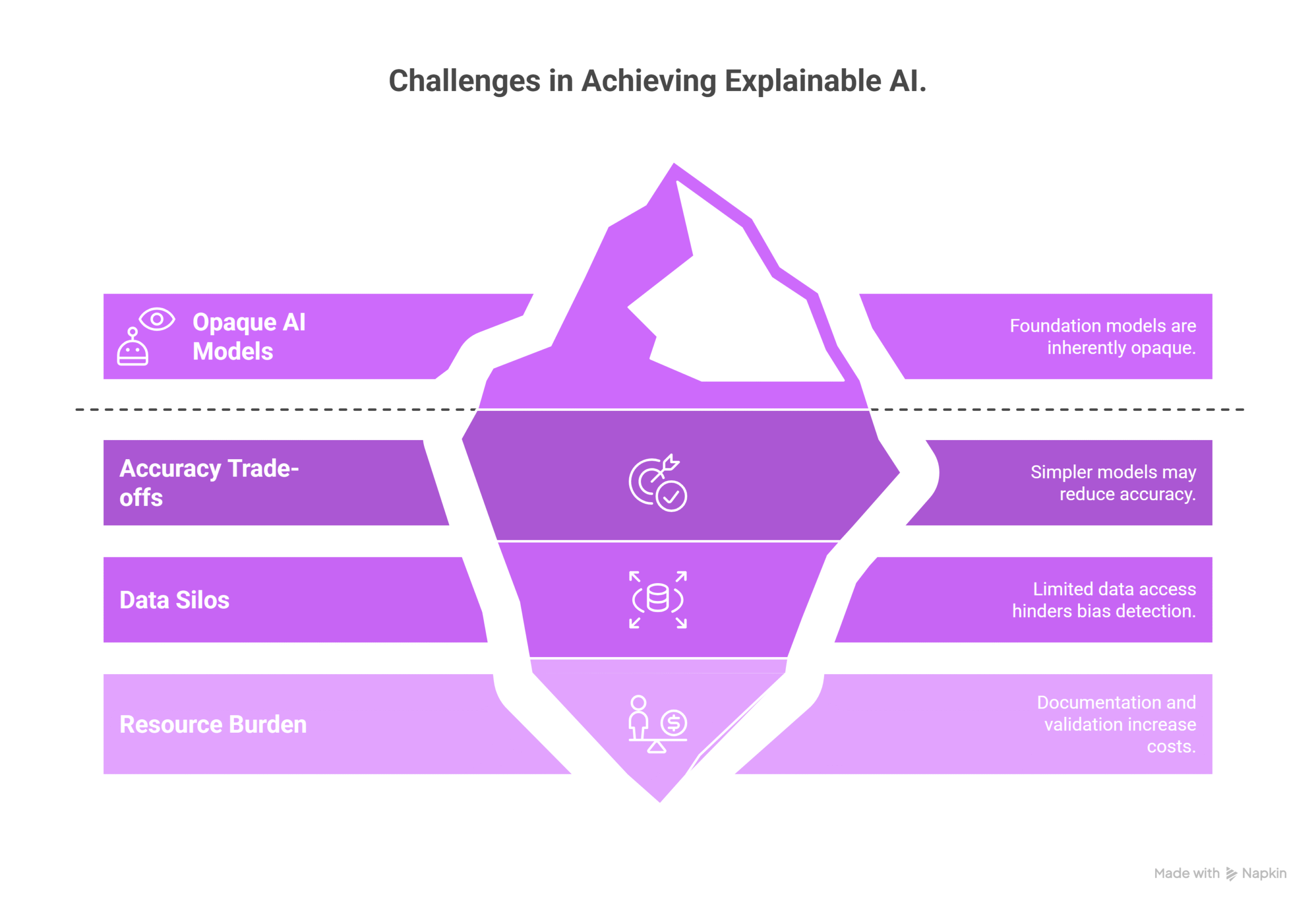

What are the challenges in implementing transparency?

Despite its importance, transparency in health AI faces several hurdles:

- Complex Models: Foundation models and deep neural networks are inherently opaque.

- Trade-offs: Simplifying models for explainability may reduce accuracy.

- Data Silos: Limited access to diverse datasets makes bias detection difficult.

- Resource Burden: Documentation and validation add cost and time.

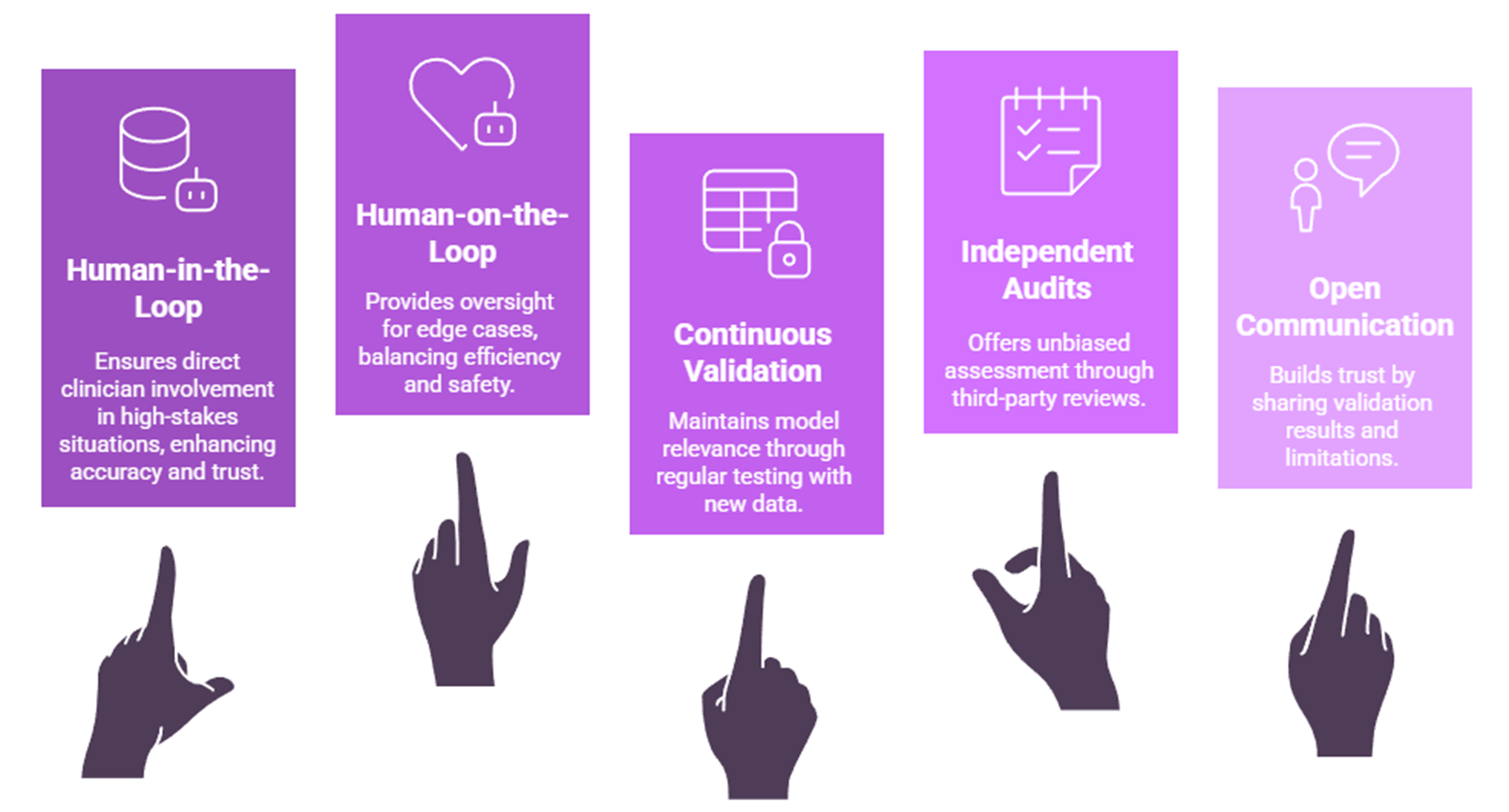

What best practices can organizations adopt for trustworthy health AI?

Organizations building or deploying health AI can adopt:

- Human-in-the-Loop (HITL) Approaches: Keeping clinicians engaged directly in reviewing all AI outputs of a particular workflow, in high-stakes situations.

- Human-on-the-Loop (HOTL) Approaches: Clinicians provided oversight and intervention only when necessary, as for example for edge cases, acting more as a supervisor.

- Continuous Validation: Regularly testing models against new patient data to ensure relevance.

- Independent Audits: Leveraging third-party reviews for unbiased assessment.

- Open Communication: Sharing validation results and limitations with stakeholders.

What does the future of transparent health AI look like?

The future points toward standardized frameworks for transparency and explainability in health AI. Emerging trends include:

- Federated Learning and Privacy-Preserving AI: Supporting transparency while protecting patient data.

- Interactive Explainability Tools: Allowing clinicians to probe AI reasoning dynamically.

- Regulatory-Grade Audit Trails: Automated, immutable records of model decisions and data usage.

John Snow Labs is actively developing explainable, auditable NLP and LLM pipelines to meet these needs, helping healthcare organizations deploy trustworthy AI at scale.

Why does transparency matter for the future of health AI?

Transparency is the bridge between innovation and adoption. Without it, even the most accurate AI models risk being sidelined due to lack of trust. By prioritizing explainability, auditability, and fairness, healthcare organizations can safely harness AI’s potential while ensuring accountability.

John Snow Labs provides transparent, domain-specific AI solutions that balance innovation with compliance. From NLP pipelines to domain specific large language models, our systems are built with explainability at their core.

Interested in deploying trustworthy AI? Explore our Healthcare AI offerings or contact us to discuss how we can support your organization.

FAQs

What is transparency in health AI?

Transparency means making AI systems understandable and auditable, including their data sources, reasoning, and outputs.

Why is transparency important in healthcare AI?

It builds trust with clinicians, patients, and regulators, ensuring safe adoption and compliance with laws.

How do explainability tools work in health AI?

They highlight the reasoning behind outputs, such as marking text spans in NLP or visual regions in imaging AI.

Can transparency reduce AI bias?

Yes. By documenting datasets and publishing fairness metrics, organizations can detect and mitigate biases in model outputs.

Does transparency slow down AI development?

While it adds steps, transparency ultimately accelerates adoption by reducing resistance and meeting regulatory requirements.

Supplementary Q&A

How does HITL support transparency?

Human-in-the-loop workflows allow clinicians to validate and refine AI outputs, creating a feedback loop that improves accuracy and accountability.

What is the difference between human-in-the-loop (HITL) and human-on-the-loop (HOTL)?

Human-in-the-Loop (HITL) involves humans directly participating in critical AI processes, making decisions and refining outputs at key points, while Human-on-the-Loop (HOTL) features a human providing oversight and intervention only when necessary, acting more as a supervisor for an already functioning system. HITL is hands-on, used for high-stakes or complex situations, whereas HOTL is supervisory, suitable for mature systems with high volumes of routine tasks.

What are model cards and datasheets in AI?

They are documentation tools that describe a model’s purpose, training data, performance, and limitations, supporting informed deployment.

Is transparency only relevant for regulators?

No. Patients and clinicians also benefit from clear explanations of AI outputs, improving care quality and shared decision-making.