Many people I know, started reading and learning about Machine learning after realizing its power through the evolution of face recognition. Everyone is wondering how can machine learning help in face recognition? Does this also need training datasets? What do I need to know to understand such techniques and just understand the procedures of face recognition?

China and Face Recognition

In China, Facial recognition is becoming one of the most important sectors to invest your money in. As local governments are adopting surveillance strategies, China’s State Council declared that by the year 2030, Artificial Intelligence industry investments may reach $150 billion.

Sense time and Megvii are the top leading Chinese companies working in the field of Artificial Intelligence and face recognition. Their work was markedly boosted by the government and police need for developing surveillance technologies.

Do I need training datasets in the face recognition process?

Very large training datasets are not always good as most people think. Proper size of training datasets may vary from a condition to another depending on many factors. If your data has too many variables, this might push you to run the model on the whole data which might lead to poor accuracy. Also, you may find a lot of correlated variables. All these factors can be considered risks that could have a negative impact on your project.

It is clear now that determining how much training data your project need is not an easy task. This task sometimes does not go smoothly while you are the sole decision-maker. Try to search for specialized data scientists who can help you in this work. Determining the amount of data and providing you with precise and clean data could save you 65% of your time. John Snow Labs is one of those successful companies who have a team of data experts chosen from 17 countries. 80% of the team are MSc holder while 33% are PhD holders. The diversity of the team enables it to deal with data projects in more than 20 different languages.

To be able to handle and mitigate these risks, you must have a basic understanding of the concepts of Neural Networks, dimensionality reduction, Eigenvectors, Principal Component Analysis (PCA).

Let us first understand what is meant by PCA?

PCA

A statistical method that aims to make data visualization and navigation a simple process. It focuses on extracting smaller number of uncorrelated variables (Principal Components) from a huge number of variables found in huge datasets.

The main target is to get the maximum the maximum variance amount with the least number of principal components.

PCA for images

The know-how of machine learning science for face recognition is exciting.

Let us imagine that we have a square image of size 400×400 pixels. This image is represented as a 400×400 matrix. Each element in the matrix will represent the intensity value of the image.

Now, if you have an image that needs recognition, machine learning algorithms check the differences between the target image and each of the principal components.

The process performance is much better if your applied PCA and the differences are calculated from the ‘transformed’ matrix.

PCA is important also in other fields than face recognition like image compression, neuroscience, and computer graphics. If you do not know what is meant by a transformation, the next paragraph about Eigenvalues and Eigenvectors will give you a simple idea of what this means.

Eigenvalues and Eigenvectors

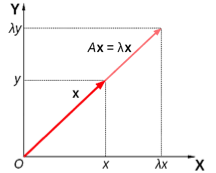

An eigenvector is expressed by a real nonzero eigenvalue, that points in a direction that is stretched by a transformation.

Figure 1: Interpupillary Distance (IPD)

On the other side, the eigenvalue is the factor by which it is stretched.

A negative eigenvalue means that the direction is reversed.

A typical application for the concepts of Eigenvalue and Eigenvectors is image compression.

Considering less significant eigenvectors is an effective way to decrease the storage size of the image (image compression).

Dimensionality Reduction

This topic is definitively one of the most interesting ones, it is great to think that there are algorithms able to reduce the number of features by choosing the most important ones that still represent the entire dataset. One of the advantages pointed out by authors is that these algorithms can improve the results of the classification task.

Vector Basis Transformation

To design a machine learning neural network to recognizes faces you may need to undergo a transformation for all the pixels into new ones. The new basis should describe specific face fine details (e.g.: interpupillary distance (IPD)).

Machine learning process for neural networks targets to focus on the set of bases vectors related to the most important faces’ landmarks.

If you do not know what is meant exactly by neural networks, read the next paragraph.

Artificial Neural Network for Machine Learning

A set of algorithms used for machine learning that models data through a graphical representation of mathematical models that simulate neurons of the human nervous system works.

While face recognition relies on complex mathematical models, exploring advancements like Generative AI in Healthcare and Healthcare Chatbot technology highlights the broader application of AI in transforming how data is processed and utilized across industries.