In LLM (Large Language Models) evaluation, a single error like a hallucination in a drug interaction summary, can cause problems. It may lead to compliance issues or delays in clinical trials. In these cases, context is important. It helps explain the details.

Consider this example: You review a model’s output on clinical trial data. You find a flagged entity, such as an incorrect dosage reference. Your annotation tool requires you to enter the reason in a short meta field. The full explanation, the check against PubChem data or the conflict with trial arm KLP-112 becomes hard to share. This information gets lost when you pass it to fine-tuning teams or auditors. This can waste time. In pharmaceutical or healthcare operations, it may add hours of extra work per batch of evaluations.

Generative AI Lab 7.4 now addresses this and improves LLM projects with clear changes. It focuses on two main features: long-form comments for annotations at the chunk-level and tooltips for XML configurations. These features reduce common problems. They help evaluators spend less time on basic tasks. This allows more focus on improving models for important decisions. Examples include summaries without errors in large labs like Roche or evaluations of secure prompts in operations like DocuSign.

If you work on LLM evaluation and comparison projects, such as tagging outputs like hallucinations or entities in generated text, these features will help. They make the process smoother. Below, we explain each feature with details on how it works in your workflow.

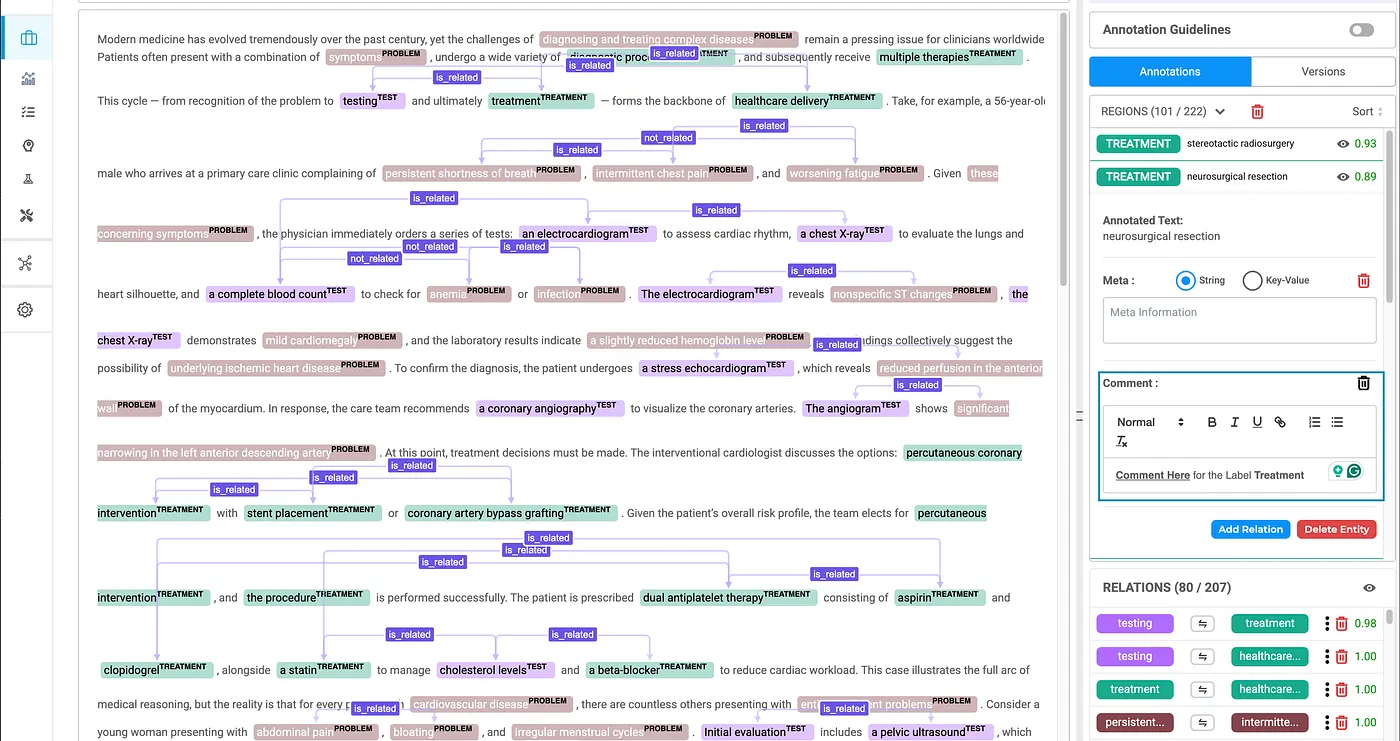

Add Detailed Comments to Annotations

Think of your annotation widget during an evaluation. You are reviewing a batch of 500 prompts. The prompts test an LLM’s understanding of social determinants in clinical notes. The model outputs a chunk: “Patient X’s housing instability correlates with 20% hypertension risk.” This seems correct at first. But when you check electronic health record (EHR) data, you flag it as a hallucination. The reason? The correlation is not based on facts. Benchmarks like the i2b2–2010 de-identification sets show no such link. This could affect later risk models.

Before version 7.4, you would fit the explanation into a short meta field, perhaps 50 characters. Reviewers would need to guess or search chat logs. Now, Generative AI Lab 7.4 adds long-form comments at the chunk level. This is a separate field for each annotation span. It does not change the existing meta field.

This feature adds a layer for detailed notes. It fits the needs of evaluations that require explanations.

Benefits: From Simple Labels to Useful Data

This feature helps teams that review NER-style evaluations on LLM outputs. New users reduce their learning time by 30–50%, based on beta tests. They add context that shortens review time from days to hours. Experienced annotators complete tasks 2x faster. Comments change single labels into shared notes. One person’s note can help another improve the model.

Create XML Configurations with Help

Generative AI Lab 7.4 adds real-time tooltips for tags. These tooltips appear as you type. They provide clear descriptions and suggestions for each tag. This makes XML creation more accurate and efficient.

How It Works: Simple Guidance

Tooltips Appear as You Type: When you enter a tag, such as one for evaluations with options, the tooltip shows right away. It gives a description of the tag. It also lists expected attributes and suggests valid values. This covers all supported tags and attributes on the Customize Label page.

Easy to Use: The tooltips are built into the editor. No extra tools or settings are needed. They disappear when not needed but provide more details if you hover over them.

Benefits: From Start to Advanced Use

For new users, this helps them understand tag meanings easily. It reduces the learning time and setup errors. For experienced users, it speeds up XML configuration with real-time guidance and attribute suggestions.

Share your comments below: How do these features fit your work? We want to hear — better evaluations come from shared experiences.

Follow for updates on Generative AI Lab and more.

Originally published at https://nlp.johnsnowlabs.com