In the early spring of 2023, a mid-sized health system on the West Coast faced a pivotal decision. The CIO, Maria, had just returned from a conference buzzing with excitement over generative AI in healthcare. Vendors showcased demos with chatbots triaging patients, language models drafting clinical notes, and systems auto-coding medical charts. Maria saw promise, but also risk.

Their executive team had questions: What if the model hallucinates? Can we audit decisions? How do we ensure it doesn’t amplify bias in sensitive populations? Buzzwords as compliance, fairness, explainability, were everywhere. But few vendors offered clarity. Maria knew one thing: moving forward meant deploying AI that could be trusted.

The stakes of AI in healthcare

AI in medicine doesn’t operate in a vacuum. Predictions affect lives. A biased model misclassifying symptoms could delay care. An opaque model denying coverage could trigger legal fallout. A non-compliant system could expose the institution to HIPAA violations or fines under GDPR. These aren’t theoretical concerns, they’re operational, reputational, and ethical imperatives.

Studies have shown that large language models (LLMs) can propagate racial and gender biases present in their training data, leading to inequitable healthcare outcomes. For instance, a widely used algorithm was found to assign lower risk scores to black patients compared to white patients with similar health conditions, resulting in fewer referrals for additional care for black patients. 1

Similarly, clinical NLP systems trained without diverse data sets have underperformed in underrepresented patient groups, increasing the risk of misdiagnosis.

Maria’s team needed a new framework, something rigorous enough to earn physician trust, compliant enough for legal, and transparent enough for audit committees.

A turning point: the pilot that redefined validation

LangTest, an open source library, is not another metrics dashboard, it’s a framework purpose-built to test language models for bias, fairness, robustness, and regulatory readiness. Developed with clinical NLP in mind, LangTest helped Maria’s data science team simulate edge cases: Would the model perform equally well across different ethnic names? Would it understand negations in clinical sentences? Could it handle de-identification without leaking protected health information?

With LangTest, they discovered their chosen open-source model underperformed on negation detection and introduced false positives in black-identifying names. That single insight prevented a costly deployment misstep.

Instead of discarding the technology, the team iterated. They introduced domain-adaptive fine-tuning and augmented the training data with underrepresented clinical expressions. Bias scores improved. Compliance benchmarks were logged. Transparency became part of the model’s DNA.

This aligns with findings in recent clinical NLP evaluations showing that negation detection and demographic fairness remain significant bottlenecks for general-purpose LLMs in medical applications.

Compliance isn’t a checkbox, it’s a culture

While developers refined the model, the compliance team began mapping risks to regulatory frameworks. Could decisions be explained to auditors? Was every prediction traceable to an input? Were logs immutable?

Here, Maria turned to the Generative AI Lab by John Snow Labs. The platform offered a no-code interface for human-in-the-loop validation, ensuring that clinicians could not only annotate data but also review model outputs in context. Every annotation was versioned, every decision logged.

This approach reflects best practices proposed in healthcare AI governance frameworks, which stress the importance of human oversight and provenance logging for ethical deployment.

From model to mission

Six months later, the system went live. Their chatbot, now powered by a fine-tuned, bias-mitigated, audit-ready model, was fielding thousands of patient questions weekly. It triaged, flagged emergencies, and summarized symptoms, all under human supervision. Importantly, when a skeptical CMS auditor arrived, Maria’s team could walk through every compliance step, every test run in LangTest, and every human validation.

Not only was the rollout successful, it became a model for AI governance in her organization.

Lessons from the journey

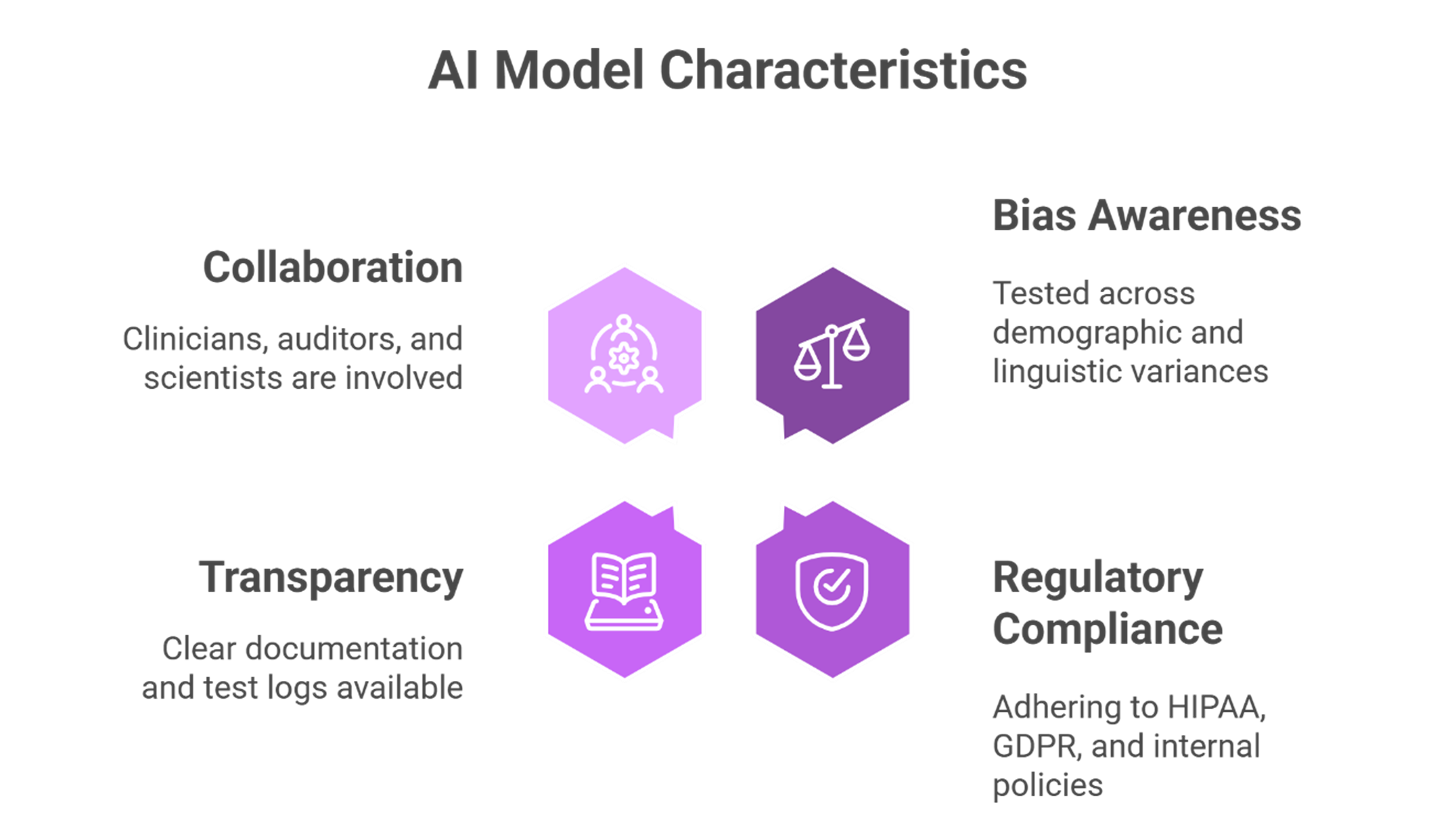

Maria’s story reflects a growing consensus: the future of healthcare AI isn’t just about capability, it’s about responsibility. AI systems must be:

- Bias-aware, tested across demographic and linguistic variances.

- Compliant, adhering to HIPAA, GDPR, and internal governance policies.

- Transparent, with clear documentation and test logs.

- Collaborative, inviting clinicians, auditors, and data scientists into the loop.

And this future doesn’t have to be distant. With frameworks like LangTest and platforms like the Generative AI Lab, health systems today can begin building AI systems that aren’t just performant but principled.

Invitation to Explore

If your organization is navigating similar questions, we invite you to explore how John Snow Labs’ Healthcare NLP, LangTest, and Generative AI Lab can support a trustworthy deployment. Let’s ensure that innovation and integrity grow hand in hand.

Explore LangTest documentation →

See how Generative AI Lab enhances auditability →

References:

[1] Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447–453. https://www.science.org/doi/10.1126/science.aax2342 [2] Moramarco, F., et al. (2022). Negation detection for clinical NLP: Evaluating performance across datasets and model architectures. Journal of Biomedical Informatics, 129, 104062. https://www.sciencedirect.com/science/article/pii/S1532046422001309 ↩