What does an ethical framework for clinical AI mean?

An ethical framework for clinical AI is a structured set of principles, guidelines, and safeguards that ensure generative AI is used responsibly in patient care. These frameworks help healthcare organizations balance innovation with safety, transparency, and fairness, while protecting patient rights and building trust.

At John Snow Labs, ethics and compliance are not afterthoughts they are built into every product, from Healthcare NLP to the Generative AI Lab and the Medical Reasoning LLM. Each solution supports HIPAA and GDPR compliance, while enabling human-in-the-loop oversight and auditability.

Why are ethical frameworks necessary for generative AI in healthcare?

Generative AI has immense potential to improve clinical documentation, diagnostics, and patient engagement. But without proper governance, it can introduce risks such as biased outputs, hallucinations, privacy violations, or over-reliance on machine-generated recommendations.

Ethical frameworks reduce these risks by ensuring transparency in how models are trained and deployed, fairness in how data is curated and used, accountability through audit trails and human oversight, and safety through rigorous validation and explainability measures. The Generative AI Lab supports these needs with built-in model governance, HITL validation, and compliance tracking.

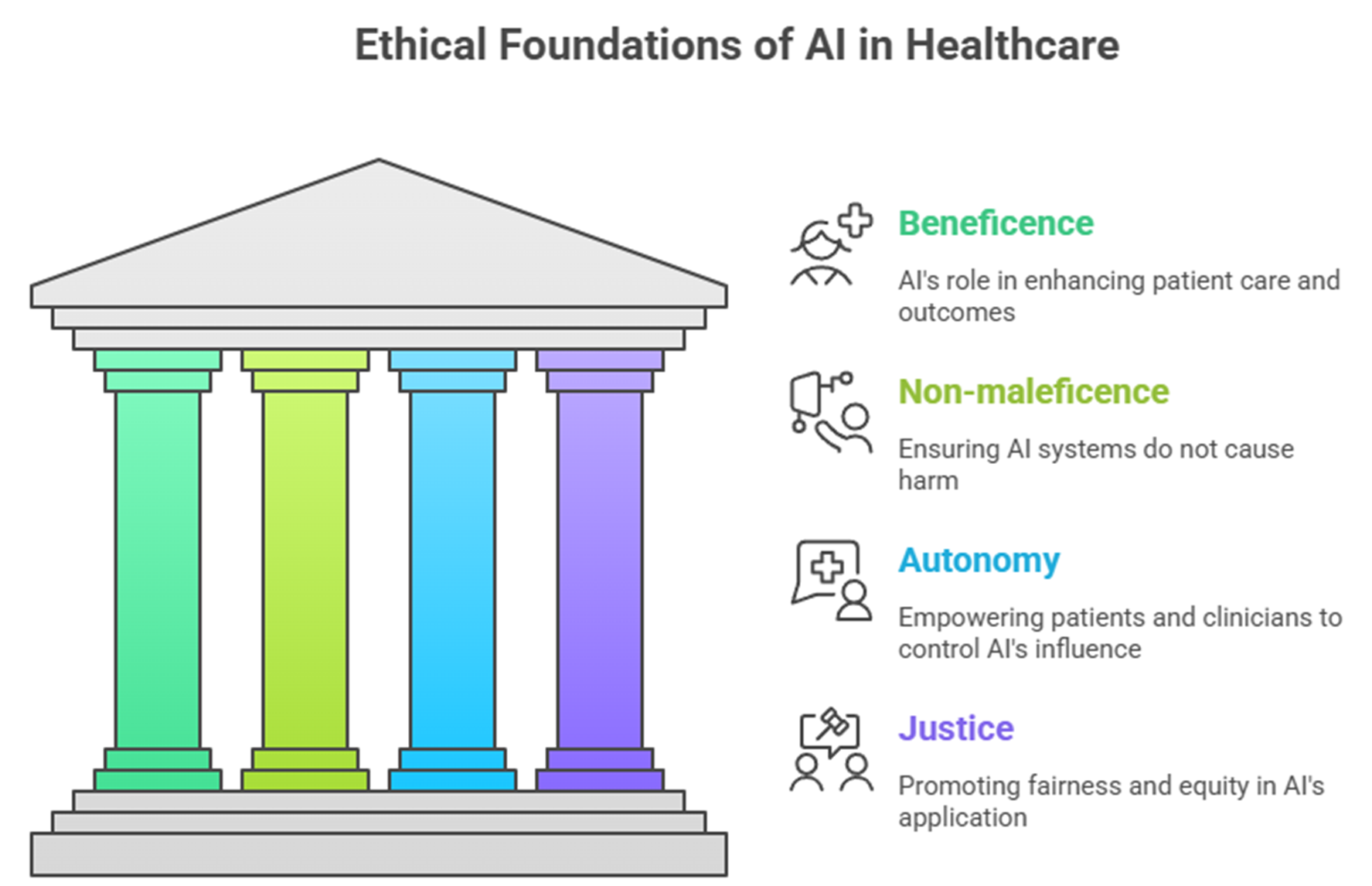

How can ethical principles guide clinical AI use?

Core ethical principles from medicine beneficence, non-maleficence, autonomy, and justice apply directly to AI. Beneficence requires that AI genuinely improves patient outcomes, such as reducing documentation time or supporting accurate diagnoses. Non-maleficence means AI systems must be tested carefully to avoid harmful recommendations. Autonomy ensures that patients and clinicians both understand the role of AI in care and retain the ability to question or override its outputs. Justice demands that AI systems perform equitably across demographics, medical conditions, and healthcare settings.

John Snow Labs operationalizes these principles through tools like assertion detection models, which clarify uncertainty in medical notes, reducing ambiguity in clinical decision-making.

What safeguards ensure ethical deployment of clinical AI?

Strong safeguards make the difference between risky AI adoption and trustworthy deployment. Human-in-the-loop oversight ensures clinicians and compliance officers validate outputs before they are integrated into care. De-identification pipelines protect privacy by removing PHI at scale, while traceability measures allow organizations to track every model version, dataset, and validation cycle. Equally important is explainability, which ensures AI-generated insights can be understood and justified.

John Snow Labs addresses all these requirements through its compliance and auditability features, giving healthcare organizations confidence that generative AI is innovative, safe, and responsible.

FAQs

How can bias in generative AI be addressed?

Bias impact is mitigated by curating diverse training datasets, testing across subpopulations, and monitoring fairness metrics. John Snow Labs models are built exclusively on healthcare data, improving domain relevance and fairness.

Are clinicians still accountable when using AI?

Yes. Ethical frameworks emphasize that AI should augment, not replace, clinical judgment. Human in the loop (HITL) validation ensures control remains with healthcare professionals.

What makes generative AI unique in ethical challenges?

Generative models can create entirely new content, which increases risks of hallucinations, misinformation, or unclear provenance. Ethical safeguards like versioning, traceability, and explainability are critical.

Can ethical frameworks slow down AI innovation?

Not necessarily. By embedding ethics into development pipelines, organizations can accelerate adoption with confidence, reducing risks of regulatory or reputational setbacks.

Supplementary Q&A

How do regulatory guidelines interact with ethical frameworks?

Regulations like HIPAA or GDPR set minimum compliance standards, while ethical frameworks go further, promoting fairness, transparency, and human dignity in AI use. Together, they form a comprehensive governance structure.

Can ethical frameworks scale across multiple healthcare settings?

Yes. With centralized platforms like the Generative AI Lab, governance policies can be codified into workflows scaling consistency across use cases, teams, and regions.

What role do patients play in ethical AI governance?

Patients benefit when organizations adopt transparent consent practices, explain how AI is used in their care, and provide opt-out mechanisms. Ethical frameworks ensure patients remain central to AI adoption.