What is consistent obfuscation and why does it matter in healthcare?

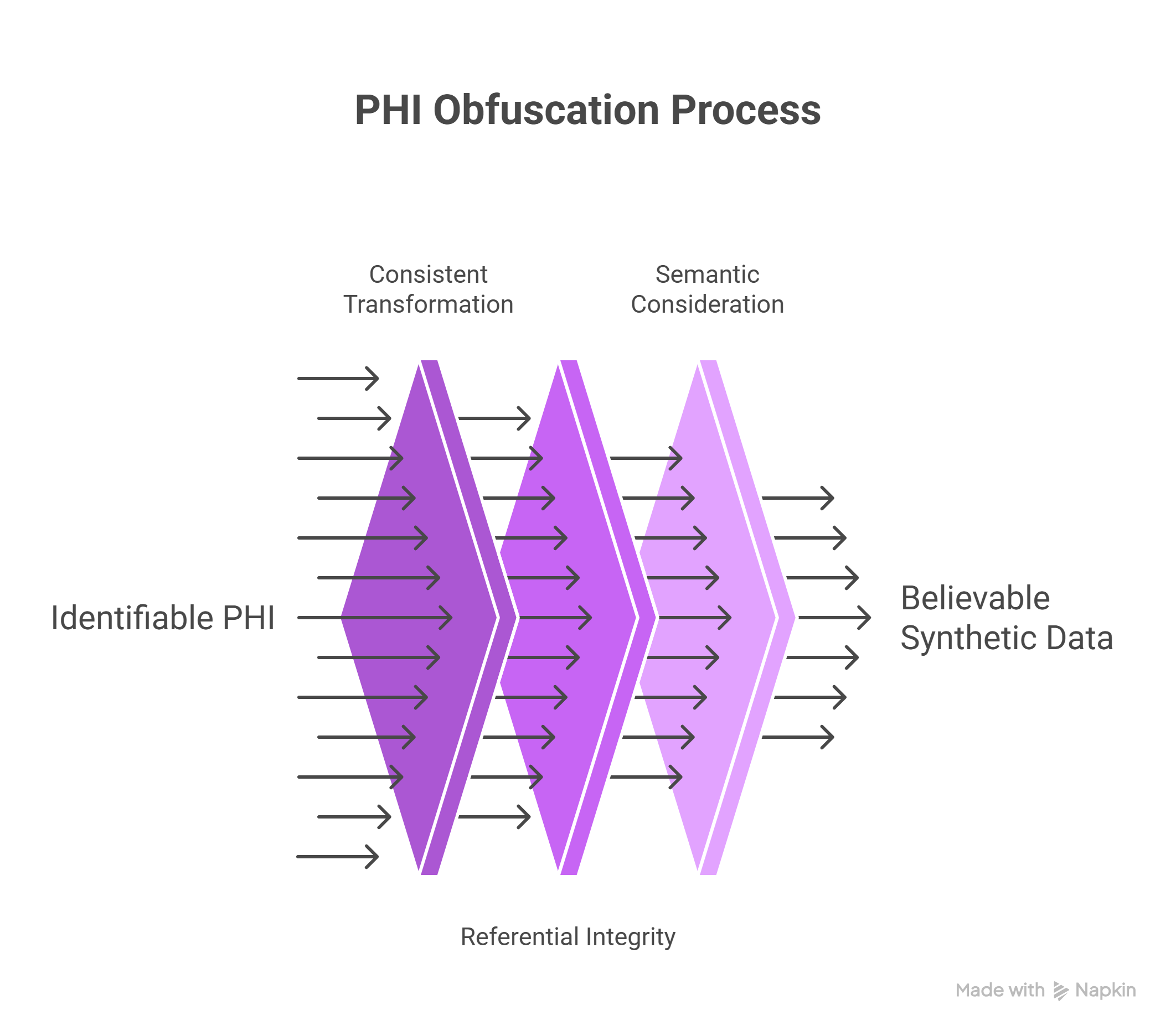

Consistent obfuscation ensures that each instance of PHI is transformed the same way across all documents and data modalities. This method replaces identifiable elements like names, addresses, or phone numbers with synthetic values that remain constant within a patient’s timeline.

For example, if “John Smith” is replaced with “Michael Doe” in one medical note, every future reference must also read “Michael Doe.” This level of repeatability ensures referential integrity, a critical factor for clinical analytics, machine learning, and regulatory audits.

John Snow Labs’ obfuscation engine doesn’t just swap out values randomly. It considers gender, age ranges, date timelines, and medical semantics. This creates synthetic data that is both believable and analytically robust.

What is deterministic tokenization and how does it support compliance?

Tokenization is the process of converting sensitive identifiers into irreversible cryptographic tokens. Deterministic tokenization means that the same input, such as a medical record number or patient ID, always produces the same output token.

These tokens are one-way and cannot be decrypted. Yet they maintain analytical utility by consistently linking data across different systems and formats. This balance between security and usability is essential in regulated healthcare environments.

For instance, a patient’s tokenized ID can be used to link lab results, physician notes, and imaging reports, without ever exposing the underlying identifier. This supports secure, traceable, and reproducible analytics.

How do obfuscation and tokenization improve AI workflows?

- Maintain patient-level continuity without identity risk

- Enable secure linking across data silos and timeframes

- Reduce the burden of manual redaction

- Preserve data realism and usability for model training

- Ensure compliance with HIPAA and GDPR

The John Snow Labs pipeline combines both obfuscation and tokenization, giving healthcare AI teams the tools they need to build scalable, privacy-compliant solutions.

How to learn more?

Dr. Youssef Mellah covered these methods in a recent webinar on regulatory-grade de-identification. He detailed the technology behind tokenization, showed real-world case studies, and explained how these methods help organizations move from reactive compliance to proactive innovation.

👉 Access the full webinar replay here

FAQs

What is consistent obfuscation in healthcare data?

It replaces PHI with realistic synthetic values that remain the same across documents, maintaining data utility for longitudinal analysis while protecting identity.

Is tokenization reversible?

No. The tokenization used is deterministic and one-way. It allows linkage without ever revealing the original identifier.

Can these tokens be used for audits or compliance checks?

Yes. Since each patient ID consistently maps to the same token, internal audits can track records safely without accessing real PHI.

What types of identifiers can be tokenized?

Common examples include patient IDs, social security numbers, phone numbers, and composite identifiers like lab accession numbers or encounter codes.

How is this different from basic redaction?

Redaction removes data entirely. Obfuscation and tokenization preserve structure and linkage, making the data usable for research and machine learning.

Supplementary Q&A

Does tokenization support federated learning or cross-site data aggregation?

Yes. When the same deterministic algorithm is used across sites, tokenization enables secure and private cross-site data aggregation, which is vital for federated AI and multi-institution studies.

Is consistent obfuscation compliant with international regulations?

Yes. John Snow Labs’ system meets HIPAA Safe Harbor and Expert Determination standards, as well as GDPR anonymization guidelines. This allows cross-border collaborations while remaining compliant.