When GE Healthcare’s EDISON platform needed to transform radiology report processing for pharmaceutical partners, the engineering challenge extended far beyond extracting clinical findings from unstructured text. The real problem emerged in the gap between a radiologist typing “recommend follow-up CT in 6 months” and a patient actually receiving that scan. Between the recommendation buried in a narrative report and the scheduled appointment lies a chasm where between 1% and 35.7% of patients disappear entirely.*

This is not a workflow problem that manual oversight can solve. A multi-hospital study tracking over 1.5 million radiology exams found follow-up adherence rates ranging from approximately 11% to 52% depending on imaging modality and institution.* Even in health systems with sophisticated electronic health records, the fundamental architecture creates failure: critical information lives in free-text reports, scheduling requires manual interpretation, and no systematic mechanism tracks whether recommendations become actions.

This pattern is consistent. Missed follow-ups are not edge cases or exceptions. They are the predictable outcome of workflows that rely on human memory, manual chart review, and disconnected systems to close the loop between radiologist recommendation and patient care.

Why traditional workflows cannot scale follow-up tracking

The follow-up failure problem has multiple structural causes, all of which resist conventional solutions:

Unstructured radiology reports make systematic tracking impossible. Radiologists document follow-up recommendations in narrative text with enormous variation. “Repeat imaging in 6-12 months,” “recommend follow-up chest CT,” “correlate with clinical findings and consider MRI if symptoms persist”, each phrasing requires human interpretation to extract the actionable recommendation, timing, modality, and urgency. Health systems with tens of thousands of radiology reports monthly cannot manually audit every report for follow-up recommendations.

Fragmented communication creates handoff failures. The radiologist issues a recommendation. The referring physician must see it, interpret it, and either order the follow-up or communicate it to the patient. The patient must schedule the appointment. The scheduling staff must understand the clinical context. Each handoff introduces failure risk. Studies confirm that missed follow-up imaging has been directly linked to delayed diagnoses, including missed cancers and disease progression.*

Patient mobility and external imaging centers break tracking entirely. Patients change insurance, move to different health systems, or obtain follow-up imaging at outside facilities. Without data sharing across organizations, tracking systems lose visibility. Even internally, patients who miss appointments often fall off tracking lists unless care coordinators manually follow up.

Resource constraints prevent systematic chart audits. Radiology departments already operate under significant workload pressure. Imaging volumes continue to rise, and radiologist burnout is well-documented.* Asking overloaded clinicians and administrators to manually track thousands of follow-up recommendations is not sustainable.

The workflow failures are predictable. What has changed is that specialized healthcare artificial intelligence now provides the technical architecture to systematically extract, track, and act on follow-up recommendations at enterprise scale.

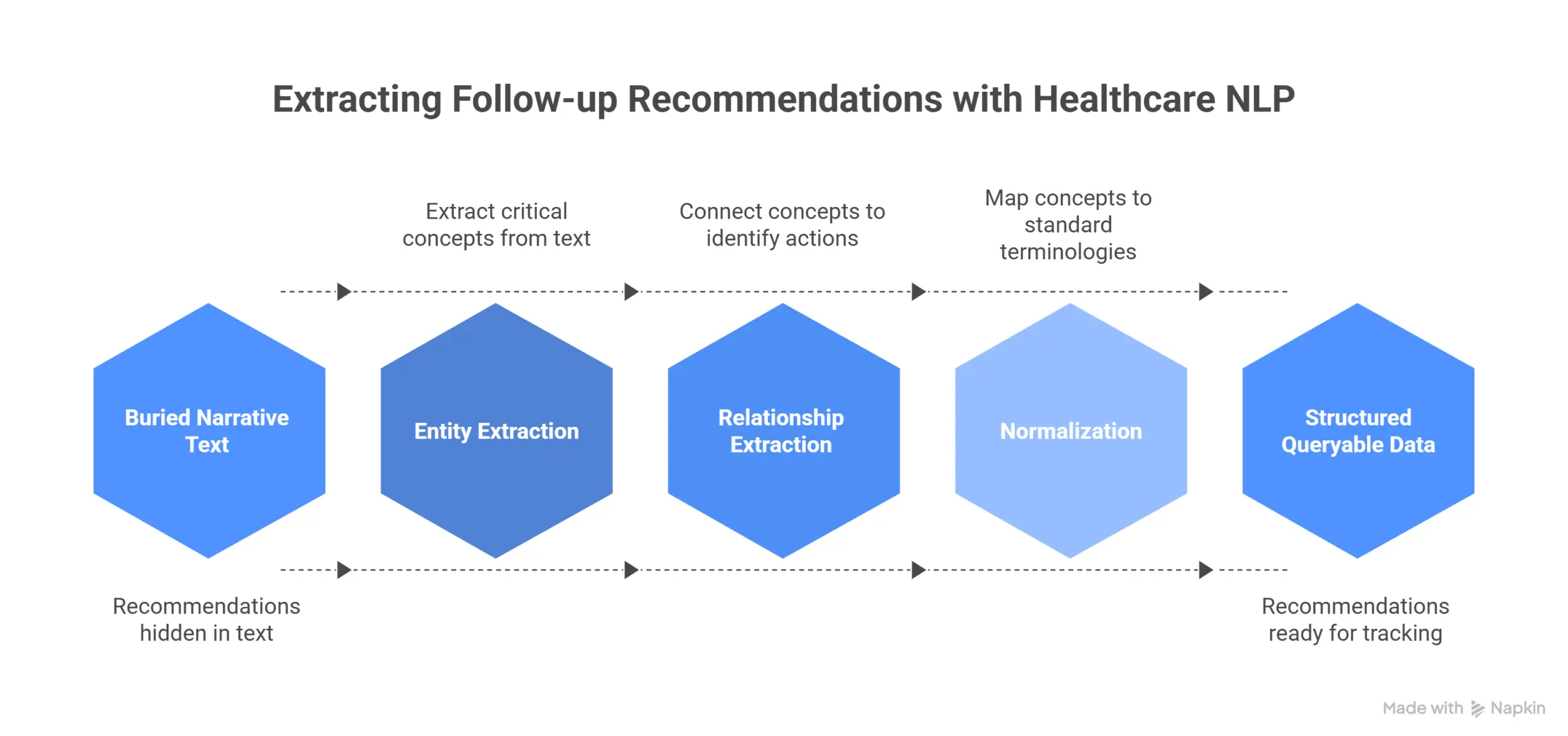

How production Healthcare NLP architectures extract follow-up recommendations

GE Healthcare’s collaboration with John Snow Labs demonstrates the extraction architecture in practice. Their EDISON platform, presented by Rajesh Jacob in a recent AI Summit Session, provides customizable, modular software for HIPAA-compliant healthcare solutions, aggregating and analyzing data from connected medical devices, sensors, and health information systems. For radiology report processing, the challenge was building an end-to-end solution that could extract and link all critical concepts across diverse report formats.

The implemented pipeline extracts and connects: dates, imaging tests, test techniques, risk factors, body parts, measurements, and general findings. More importantly, it links these concepts to identify the type and technique of the procedure, which body part was examined, when the procedure was performed, and what conclusions require follow-up action. This moves follow-up recommendations from buried narrative text into structured, queryable data that tracking systems can process.

MiBA’s oncology implementation, as explained by Scott Newman, validates this approach at significantly larger scale. Processing 1.4 million physician notes and approximately 1 million PDF reports and scans, including radiology and pathology documents, their comprehensive natural language processing pipeline identified 113.6 million entities, averaging 80 entities per note. Entity extraction achieved combined F1-score of approximately 93%. For relationship extraction across 25 distinct relationship types, the system achieved 88% F1-score, averaging 22 relationships per note.

These are not research prototypes. These are production systems processing millions of clinical documents, extracting temporal relationships, anatomical locations, and recommended actions with regulatory-grade accuracy.

The technical architecture that enables this performance differs fundamentally from general-purpose artificial intelligence approaches. Specialized Healthcare NLP uses over 2,800 pre-trained clinical models for entity extraction, relation extraction, and assertion detection. These models normalize to standard medical terminologies including SNOMED CT, ICD-10, LOINC, and RxNorm. When MiBA’s pipeline extracts “follow-up MRI of left temporal lobe in 3 months,” it captures not just the recommendation but the anatomical site mapped to standardized codes, the modality, and the temporal relationship, all structured data that downstream tracking systems require.

Systematic benchmarking demonstrates why specialized healthcare NLP outperforms general-purpose large language models on clinical text. A study assessing 48 medical expert-annotated clinical documents showed Healthcare NLP achieving 96% F1-score for protected health information detection, significantly outperforming Azure Health Data Services at 91%, AWS Comprehend Medical at 83%, and GPT-4o at 79%.* The 17-percentage-point gap between specialized healthcare NLP and GPT-4o is not academic. It defines which systems can reliably extract clinical recommendations without dangerous misses.

The CLEVER study’s blind physician evaluation reinforced this performance gap. Medical doctors preferred specialized healthcare natural language processing 45% to 92% more often than GPT-4o on the dimensions of factuality, clinical relevance, and conciseness across 500 novel clinical test cases.* For radiology follow-up extraction, where missing a recommendation can delay cancer diagnosis, this precision advantage is not negotiable.

The four-layer architecture for systematic follow-up tracking

Preventing lost patients requires more than accurate extraction. It requires end-to-end architecture that connects extraction, tracking, clinical decision support, and human oversight. Across the implementations we have seen, four architectural layers emerge as essential:

Layer 1: Real-time extraction and structured registration

Every radiology report is processed at or near the time of completion. Natural language processing extracts follow-up recommendations including imaging modality, anatomical site, timing interval, and clinical justification. This data populates a structured follow-up registry, not as free text, but as queryable, timestamped records with standardized anatomical and procedural codes. Ohio State University demonstrates this portability: their research infrastructure processes over 200 million clinical notes using configuration that runs unchanged on both Azure Databricks and on-premise environments, with consistent logging for monitoring and compliance.*

Layer 2: Automated tracking and alert generation

Once recommendations enter the registry, tracking systems monitor whether follow-up orders are placed, appointments are scheduled, and exams are completed. When follow-ups fall overdue based on recommended timing, the system generates alerts to care coordinators, referring physicians, or radiology departments. In 2025, a radiology department using an artificial intelligence-driven follow-up detection system caught a previously missed abdominal aortic aneurysm surveillance scan. The system triggered urgent referral, preventing a potentially fatal outcome.* This is the operational value: systematic detection prevents critical findings from slipping through workflow gaps.

Layer 3: Clinical decision support and prioritization

Not all follow-up recommendations carry equal urgency. Small lung nodules, aneurysm measurements, suspicious lesions, and incidental findings each have different risk profiles and guideline-recommended intervals. Roche’s implementation demonstrates how specialized healthcare large language models can match patient profiles with clinical guidelines. Their system analyzes comprehensive patient data including genetic, epigenetic, and phenotypic information, then accurately aligns individual patients with relevant National Comprehensive Cancer Network clinical guidelines. For oncology care, this ensures each patient receives tailored treatment recommendations based on the latest guidelines.* The same architecture applies to radiology: embedding guideline-based intervals and risk thresholds allows systems to prioritize high-risk follow-ups and surface actionable findings before they become emergencies.

Layer 4: Human-in-the-loop oversight and continuous quality improvement

Automated tracking does not replace clinical judgment. Critical follow-ups, ambiguous findings, and complex clinical contexts require clinician review. Care-Connect and Spryfox’s framework, detailed by Christian Debes in this webinar, demonstrates this balance. Reaching regulatory-grade accuracy in clinical data abstraction requires augmenting automated natural language processing with human-in-the-loop workflows. Their quality assurance framework uses clinician-developed logic to filter and rank entities for manual review, reducing workload while maintaining high levels of safety. For radiology follow-up tracking, this means automated systems flag recommendations and track completion, but care coordinators review high-priority cases, resolve scheduling conflicts, and handle patient communication.

This four-layer architecture is what separates effective implementations from technology demos. Extraction alone is insufficient. Tracking without clinical prioritization generates alert fatigue. Automation without human oversight introduces liability risk. The systems that work combine all four layers into integrated workflows.

What radiology departments learn from large-scale deployments

The implementations processing millions of documents reveal patterns that smaller pilots miss:

Integration complexity dominates deployment timelines. The technology itself, natural language processing models, tracking logic, alert systems, is mature. The challenge is connecting radiology information systems, electronic health records, scheduling platforms, and communication tools into cohesive workflows. Legacy systems, proprietary data formats, and poor interoperability standards extend implementation timelines. Organizations using modern data platforms like Databricks can deploy Healthcare NLP pipelines in weeks. Organizations with fragmented legacy infrastructure may require months of integration work before automated tracking becomes operational.

False positive management determines clinical acceptance. Overly sensitive follow-up detection creates alert fatigue. If every ambiguous phrase in a radiology report triggers follow-up flags, clinicians learn to ignore alerts. TriNetX’s work on smoking status extraction from provider notes demonstrates the importance of handling site-level variation. Their pipeline scales from one site to many by fine-tuning models to overcome institutional differences in documentation patterns, creating structured, harmonized labels that bring consistency across networks.* Radiology follow-up extraction requires similar tuning: understanding institutional reporting conventions, identifying true recommendations versus conditional suggestions, and calibrating sensitivity to maintain signal without overwhelming clinicians with noise.

Patient engagement cannot be ignored. Automated tracking identifies missed follow-ups, but patients still need reminders, appointment assistance, and follow-through support. Systems that generate alerts to clinicians but do not close the loop with patients shift responsibility without solving the problem. Lunar Analytics’ agentic artificial intelligence platform for pharmacy benefits management, as detailed by Spencer Schaefer, demonstrates multi-agent coordination: their system includes agents for automated prior authorization, longitudinal care companion, personal health analytics, and other functions, using John Snow Labs’ medical language models to enable secure, real-time processing that improves efficiency and patient outcomes. Radiology tracking systems need similar patient-facing capabilities: notification systems, scheduling assistance, and care navigation that help patients complete recommended follow-ups.

Auditability and liability protection require comprehensive logging. Healthcare organizations implementing follow-up tracking are creating documentation of clinical recommendations, alerts generated, actions taken, and follow-ups completed or abandoned. This audit trail has liability implications. If an automated system flags a follow-up recommendation but no action results, responsibility must be clearly defined. Dandelion Health’s de-identification process, explained in detail by Ross Bierbryer, demonstrates the importance of maintaining full provenance. Their approach requires HIPAA compliance through expert determination, tracking every processing step to maintain patient privacy while preserving data utility. Follow-up tracking systems need equivalent rigor: logging when recommendations were extracted, when alerts were sent, who reviewed them, and what actions resulted.

The Precision Gap That Defines Clinical Trust

When radiology departments evaluate follow-up tracking solutions, the fundamental question is whether automated extraction meets the accuracy threshold required for clinical workflows. General-purpose large language models cannot.

The systematic assessment on 48 medical expert-annotated clinical documents is instructive. Healthcare NLP achieved 96% F1-score for protected health information detection compared to GPT-4o’s 79%.* More revealing: GPT-4o completely missed 14.6% of protected health information entities, while Healthcare NLP missed only 0.9%. For radiology follow-up recommendations, a 14.6% complete miss rate means more than one in seven critical follow-ups never enters the tracking system. That is not acceptable for clinical deployment.

The CLEVER study’s physician evaluation validated this performance gap across multiple clinical tasks. For clinical summarization, medical doctors preferred specialized models over GPT-4o 47% to 25% on factuality, 48% to 25% on clinical relevance, and 42% to 25% on conciseness. For clinical information extraction, specialized natural language processing held a 10-13% advantage across evaluation dimensions.*

This superior performance was achieved using an 8-billion-parameter model that can be deployed on-premise, demonstrating that domain-specific training enables smaller models to outperform much larger general-purpose systems in precision-critical tasks.* For radiology departments, this has practical implications: specialized healthcare NLP operates with fixed-cost local deployment rather than per-request cloud API pricing. The same benchmark study found Healthcare NLP reduces processing costs by over 80% compared to Azure and GPT-4o.*

Precision and cost efficiency both stem from the same architectural decision: building models specifically for healthcare rather than adapting general-purpose systems. MiBA’s 93% F1-score for entity extraction across 1.4 million physician notes, Dandelion Health’s HIPAA-compliant de-identification process, and GE Healthcare’s end-to-end radiology extraction pipeline all share this foundation. They use Healthcare NLP trained on clinical data, validated on medical expert annotations, and optimized for healthcare workflows.

When Follow-Up Tracking Becomes a Quality and Value Metric

Healthcare delivery is shifting toward value-based models and population health management. In this context, imaging follow-up adherence transforms from a workflow issue into a measured quality metric. Recent advances in imaging artificial intelligence emphasize how these systems can transform not only image interpretation but care coordination, reducing follow-up delays, identifying incidental findings, and improving overall diagnostic safety.*

Radiology departments already face increasing imaging volumes with limited radiologist capacity. Systematic follow-up tracking addresses this pressure by ensuring that diagnostic work leads to completed patient care, not lost recommendations. Early adopters demonstrate that artificial intelligence-enabled follow-up tracking turns insight into action, improving safety, outcomes, and liability risk management.

The technology deployment pattern we see emerging combines extraction accuracy, integration with existing clinical systems, automated tracking with human oversight, and auditability for quality improvement. Ohio State University’s architecture illustrates enterprise-scale implementation: portable configuration, parallelization for high throughput, consistent logging for monitoring and compliance, with outputs surfaced to researchers through governed data environments.* This is not radiology-specific. It is the architectural template for systematic extraction and tracking across clinical workflows.

Critical implementation considerations for radiology leadership

Radiology departments considering follow-up tracking systems should evaluate several technical and operational factors:

Data integration requirements: Effective tracking requires connections to radiology information systems, electronic health records, scheduling platforms, and potentially patient communication tools. Organizations should assess existing infrastructure, data standards, and integration capabilities before selecting solutions. Healthcare NLP integrates with Databricks, AWS, Azure, and on-premise environments, providing flexibility for diverse technical architectures.

Extraction model validation: Generic Large Language Models are insufficient for clinical deployment. Organizations should require evidence of model performance on radiology-specific text, including diverse report formats, institutional documentation patterns, and edge cases. The benchmark data demonstrates that specialized healthcare models outperform general-purpose LLMs by 17 percentage points on clinical text processing.*

Alert logic and false positive management: Systems must balance sensitivity (catching all recommendations) with specificity (avoiding alert fatigue). Implementation should include tuning phases where alert thresholds, prioritization rules, and workflow integration are adjusted based on actual clinical volumes and staff capacity.

Governance and accountability frameworks: Automated tracking creates documentation of recommendations and actions. Organizations need clear policies defining clinician responsibility when alerts are generated, escalation procedures for high-priority follow-ups, and processes for handling patients who cannot be reached or decline follow-up care.

Capacity planning for increased follow-up volumes: Effective tracking systems surface previously missed follow-ups. Radiology departments and scheduling staff must have capacity to absorb increased volumes, or the bottleneck simply shifts from detection to execution. Implementation planning should include resource allocation for care coordination and patient engagement.

Organizations seeking to evaluate Healthcare NLP capabilities can join live demos or review published customer case studies across multiple clinical specialties.

Looking forward: from detection to prevention

The implementations we have reviewed, GE Healthcare’s radiology extraction, MiBA’s million-document processing, Ohio State’s 200-million-note infrastructure, Roche’s guideline-matching systems, represent more than follow-up tracking. They demonstrate a fundamental shift in how healthcare organizations approach unstructured clinical data.

The traditional model treats clinical notes as documentation for human readers. The emerging model treats clinical text as structured data that has not yet been extracted. Once extracted, recommendations become trackable obligations, clinical findings become longitudinal patient records, and quality metrics become measurable outcomes rather than aspirational goals.

For radiology specifically, this transformation means moving from “image, interpret, report” to “image, interpret, extract, track, and verify completion.” The technology to enable this exists. The accuracy has been validated. The production deployments are operational.

The barrier is not technical capability. The barrier is organizational commitment to treating follow-up not as an afterthought but as a measured, managed, and optimized process. The health systems that implement systematic tracking first will reduce liability exposure, improve patient outcomes, and establish competitive differentiation in value-based care arrangements. Those that wait will continue losing patients in the gap between recommendation and action.

The radiology departments already deploying these systems are not conducting pilots. They are processing millions of documents, preventing missed diagnoses, and building the operational infrastructure that value-based healthcare requires. The question for other organizations is not whether this transformation will happen, but whether they will lead it or follow it.

FAQs

How does radiology follow-up tracking integrate with existing EHR and PACS systems?

John Snow Labs’ Healthcare NLP integrates with major platforms including Databricks, AWS, Azure, and on-premise environments. GE Healthcare’s EDISON implementation shows how end-to-end radiology processing extracts structured data from narrative reports and feeds it into existing clinical workflows, enabling automated tracking without requiring radiologists to change documentation patterns. Ohio State University’s architecture demonstrates portability: their pipeline runs unchanged on both Azure Databricks and on-premise environments with consistent logging and monitoring. The challenge is typically not the NLP technology itself but connecting diverse legacy systems including radiology information systems, electronic health records, scheduling platforms, and patient communication tools. Organizations using modern data platforms can deploy extraction pipelines in weeks, while those with fragmented legacy infrastructure may require months of integration work.

What is the difference between generic AI and specialized healthcare NLP for radiology?

General-purpose large language models lack the medical terminology precision and regulatory-grade accuracy required for clinical workflows. Healthcare NLP uses over 2,800 pre-trained clinical models for entity extraction, relation extraction, and assertion detection, with normalization to standard terminologies including SNOMED CT, ICD-10, LOINC, and RxNorm. Systematic benchmarking on 48 medical expert-annotated documents showed Healthcare NLP achieving 96% F1-score for protected health information detection compared to GPT-4o’s 79%. More critically, GPT-4o completely missed 14.6% of entities while Healthcare NLP missed only 0.9%. For radiology follow-up recommendations, a 14.6% complete miss rate means more than one in seven critical follow-ups never enters the tracking system. This precision gap is why medical doctors prefer specialized healthcare models 45-92% more often than GPT-4o on clinical tasks including factuality, clinical relevance, and conciseness.

Can AI-based follow-up tracking prevent medical malpractice related to missed imaging?

Systematic follow-up tracking creates an auditable trail of recommendations, alerts, and actions, which reduces liability exposure. In 2025, a radiology department using an artificial intelligence-driven follow-up detection system caught a previously missed abdominal aortic aneurysm surveillance scan, triggered urgent referral, and prevented a potentially fatal outcome. However, artificial intelligence is a tool for workflow automation and quality improvement, not a replacement for clinical judgment. Organizations should implement tracking within governance frameworks that clearly define clinician accountability when automated alerts are generated, escalation procedures for high-priority follow-ups, and human-in-the-loop oversight. Care-Connect and Spryfox’s framework demonstrates this balance: their quality assurance system uses clinician-developed logic to filter and rank entities for manual review, reducing workload while maintaining high levels of safety. The liability protection comes from systematic detection combined with clear accountability, not from automation alone.

How long does it take to implement AI-powered radiology follow-up tracking?

Implementation timelines depend primarily on existing infrastructure and data integration complexity, not on the natural language processing technology itself. Organizations using modern data platforms like Databricks can deploy Healthcare NLP pipelines in weeks. Ohio State University’s research infrastructure processes over 200 million clinical notes using portable configuration that runs unchanged on both Azure Databricks and on-premise environments, demonstrating enterprise-scale deployment patterns. Organizations with legacy radiology information systems, proprietary data formats, and fragmented electronic health records may require months of integration work before automated tracking becomes operational. The critical path is typically connecting diverse systems including radiology reporting, scheduling platforms, and clinician alert mechanisms, then tuning alert logic to avoid false positives while maintaining sensitivity for critical follow-ups. Organizations should plan for a tuning phase after initial deployment where prioritization rules and workflow integration are adjusted based on actual clinical volumes and staff capacity.

What happens when patients obtain follow-up imaging at external facilities?

Patient mobility and external imaging centers present a fundamental tracking challenge. When patients change insurance, move to different health systems, or obtain follow-up scans at outside facilities, internal tracking systems lose visibility unless data sharing mechanisms exist. Some health information exchanges enable cross-organizational data sharing, but coverage is inconsistent. Effective implementations incorporate patient engagement: notification systems, scheduling assistance, and follow-up communication that help patients complete recommended imaging within the originating health system. Lunar Analytics’ platform demonstrates multi-agent coordination for pharmacy benefits, using medical language models to enable longitudinal care companion functionality and personal health analytics. Radiology tracking systems need similar patient-facing capabilities to maintain contact when patients are lost to follow-up or obtain care elsewhere. Organizations should also plan for manual processes where care coordinators reach out to patients who miss scheduled follow-ups or request outside imaging records for documentation.

How do follow-up tracking systems handle ambiguous or conditional recommendations?

Radiology reports often contain conditional language: “consider MRI if symptoms persist,” “correlate with clinical findings,” “further imaging may be warranted depending on risk factors.” These ambiguous recommendations require clinical judgment, not just automated extraction. Specialized healthcare NLP models use assertion detection to distinguish definite recommendations from conditional suggestions, negations, and historical references. However, the most effective implementations combine automated extraction with human-in-the-loop review for ambiguous cases. Roche’s system for matching patients with National Comprehensive Cancer Network clinical guidelines demonstrates how specialized healthcare large language models can incorporate complex clinical context to make appropriate recommendations. For radiology follow-up, this means extraction systems flag conditional recommendations for clinician review rather than automatically generating tracking alerts. The referring physician or care coordinator evaluates clinical context and determines whether follow-up is actually indicated. Organizations implementing tracking systems should tune sensitivity thresholds during deployment to balance capturing all recommendations against overwhelming clinicians with alerts for every conditional suggestion in radiology reports.

What solutions does John Snow Labs offer for radiology workflow automation?

John Snow Labs provides Healthcare NLP for clinical entity extraction and relationship mapping, Visual NLP for processing scanned documents and DICOM images, Medical LLM for generative artificial intelligence tasks including clinical text summarization, and Generative AI Lab for human-in-the-loop validation workflows. These integrate with existing radiology systems to extract follow-up recommendations, track completion, and enable quality assurance at scale. The architecture is built on Apache Spark for distributed processing, integrates with Databricks, AWS, Azure, and on-premise environments, and normalizes extracted entities to standard terminologies including SNOMED CT and ICD-10. GE Healthcare’s EDISON platform demonstrates end-to-end radiology processing: extracting and linking dates, imaging tests, techniques, body parts, measurements, and findings from narrative reports. Organizations can explore capabilities through live demonstrations, review customer case studies across multiple specialties, or access technical documentation for implementation details.