In the middle of the crowded ER, Dr. Patel scrolls through a chest X-ray. Something feels off. The image is clear, but the patient’s recent shortness of breath doesn’t quite align with the findings. She types her observations into the report, pauses, and rechecks the scan.

Now, imagine she had a second set of eyes, not just digital, but multimodal. One that not only sees the image but understands the language of her notes. That’s the promise of Vision-Language Models (VLMs) in radiology, and it’s already becoming a reality.

What Makes Medical VLM-24B Different from Other AI Tools?

The turning point came with the release of Medical VLM-24B, a specialized vision-language model built by John Snow Labs for real-world healthcare needs. Dr. Patel didn’t need another general AI assistant. She needed something trained on 5 million medical images and 1.4 million clinical documents, a model fluent in the language of both pixels and pathology.

Medical VLM-24B can read an MRI and the accompanying chart, correlate the findings, and highlight inconsistencies. For Dr. Patel, it’s like having a resident who never gets tired. Two heads are better than one.

How Well Does It Perform?

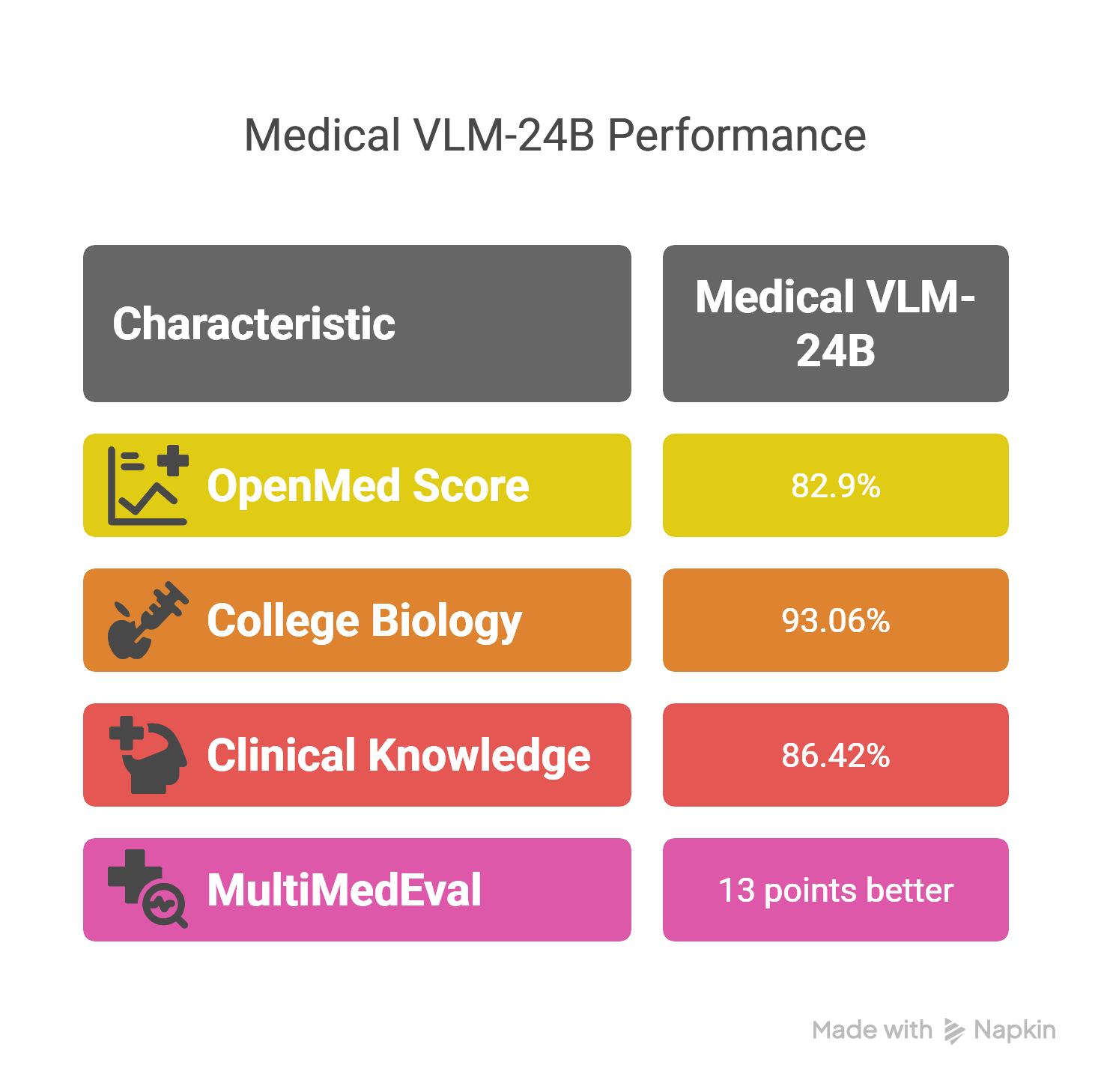

She’d heard of AI tools before, some helpful, many not. But this was different. In benchmark evaluations, Medical VLM-24B didn’t just perform, it excelled. It scored 82.9% on OpenMed, including 93.06% in College Biology and 86.42% in Clinical Knowledge. On the MultiMedEval benchmark, which tests AI across 23 datasets in 11 medical domains, it outperformed general models by 13 points.

That was enough to convince her hospital’s innovation team to deploy it, right from the AWS Marketplace, into their production system.

Can It Understand Clinical Nuance in Radiology Reports?

Back in her report, Dr. Patel types: “There is no evidence of metastatic spread.” Simple enough. But does her AI assistant know the difference between “no evidence” and “possible indication”?

With John Snow Labs’ assertion detection models, it does. These models interpret not just the entities (like “mass” or “lesion”) but the assertion type: Present, Absent, Hypothetical, even Conditional. In fact, the fine-tuned models now outperform AWS, Azure, and GPT-4o, with up to 0.962 accuracy on clinical assertions:

- +4.2% for Present

- +8.4% for Absent

- +23.4% for Hypothetical

Dr. Patel doesn’t have to worry that subtle language will confuse the system. It sees the shades of meaning in her words as clearly as it sees the shadows in the scan.

What Does This Mean for the Future of Radiology?

For Dr. Patel, AI isn’t replacing her judgment; it’s sharpening it. Medical VLM-24B doesn’t just automate. It augments, clarifies, and sometimes even surprises. It helps her catch what the eye might miss and what the chart might understate.

With tools like these, clinicians can spend less time on guesswork and more time on what matters, patients, precision, and progress.