Radiology is central to modern healthcare, yet its rapid growth in imaging volume has strained clinical workflows and introduced variability in diagnostic interpretation. Recent advancements in artificial intelligence, particularly in Vision-Language Models (VLMs) offer a path forward. These models integrate visual and textual understanding, delivering more accurate, explainable, and scalable tools for radiological analysis.

Medical VLM-24B: A New Standard in Clinical AI

John Snow Labs’ Medical VLM-24B, released in May 2025, marks a significant evolution in healthcare-specific AI. Unlike general-purpose models, this vision-language model was trained on over 5 million curated medical images and 1.4 million clinical documents, ensuring deep alignment with real-world clinical data. It processes a broad spectrum of medical visuals from X-rays and MRIs to pathology slides and anatomical illustrations alongside associated clinical text.

The model goes beyond visual-text fusion to enable fully integrated analysis of radiological findings, medical charts, and diagrams. This comprehensive scope makes it uniquely capable of supporting diagnostic workflows across radiology, pathology, and multimodal documentation review.

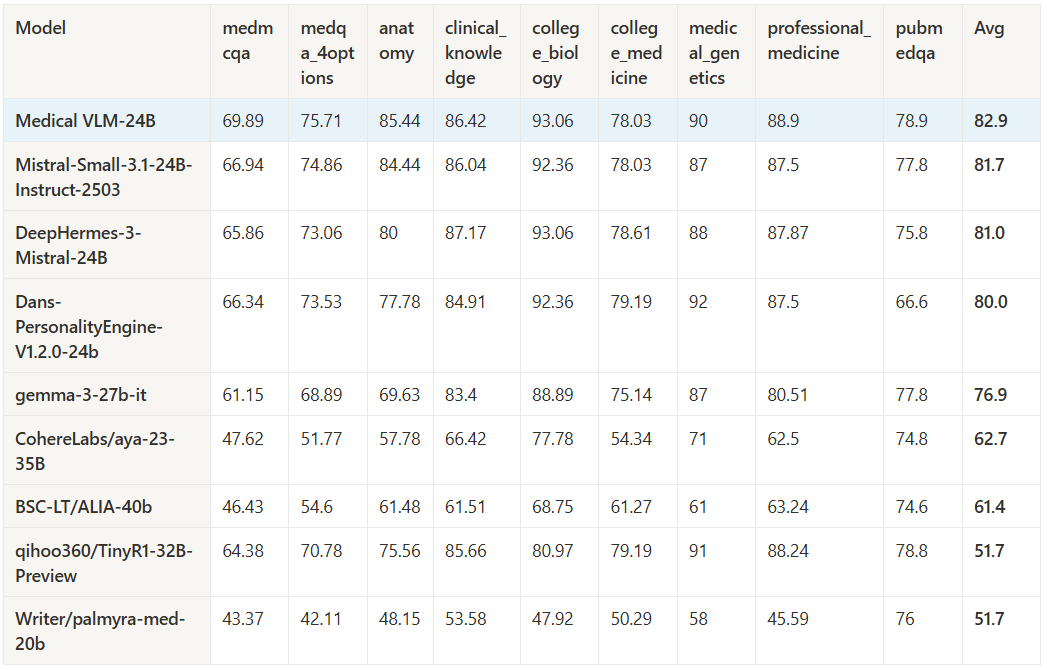

Benchmark Performance: Clinical Superiority Quantified

Medical VLM-24B delivers top-tier results across medical evaluation frameworks. It achieves an 82.9% average score on OpenMed benchmarks, with standout scores in College Biology (93.06%) and Clinical Knowledge (86.42%). Even more critically, it outperforms general-purpose VLMs by +13 percentage points on the MultiMedEval benchmark, a domain-specific evaluation covering six tasks, 23 datasets, and 11 medical specialties.

These performance metrics underscore the model’s readiness for real-world clinical environments, where general models often fall short due to insufficient medical training data. Medical VLM-24B is now available on the AWS Marketplace, enabling scalable, secure deployment via SageMaker for production-ready healthcare applications.

Assertion Detection: Beyond Negation, Toward Precision

Assertion Detection: Beyond Negation, Toward Precision

Beyond the interpretation of images, textual content contains information of critical importance for radiologists. Integration of this information, contained in clinical notes or previous radiology or pathology reports is fundamental to making better decisions. In this sense, assertion status detection is crucial in radiology, where terms like “no mass identified” and “possible lesion” carry vastly different clinical implications. John Snow Labs has developed fine-tuned large language models for assertion detection that handle complex assertion types: Present, Absent, Possible, Hypothetical, Conditional, and Associated with Someone Else².

These models reach 0.962 accuracy, significantly outperforming leading commercial solutions such as AWS Medical Comprehend, Azure Text Analytics, and GPT-4o². For instance:

- +4.2% accuracy in Present assertions

- +8.4% in Absent

- +23.4% in Hypothetical

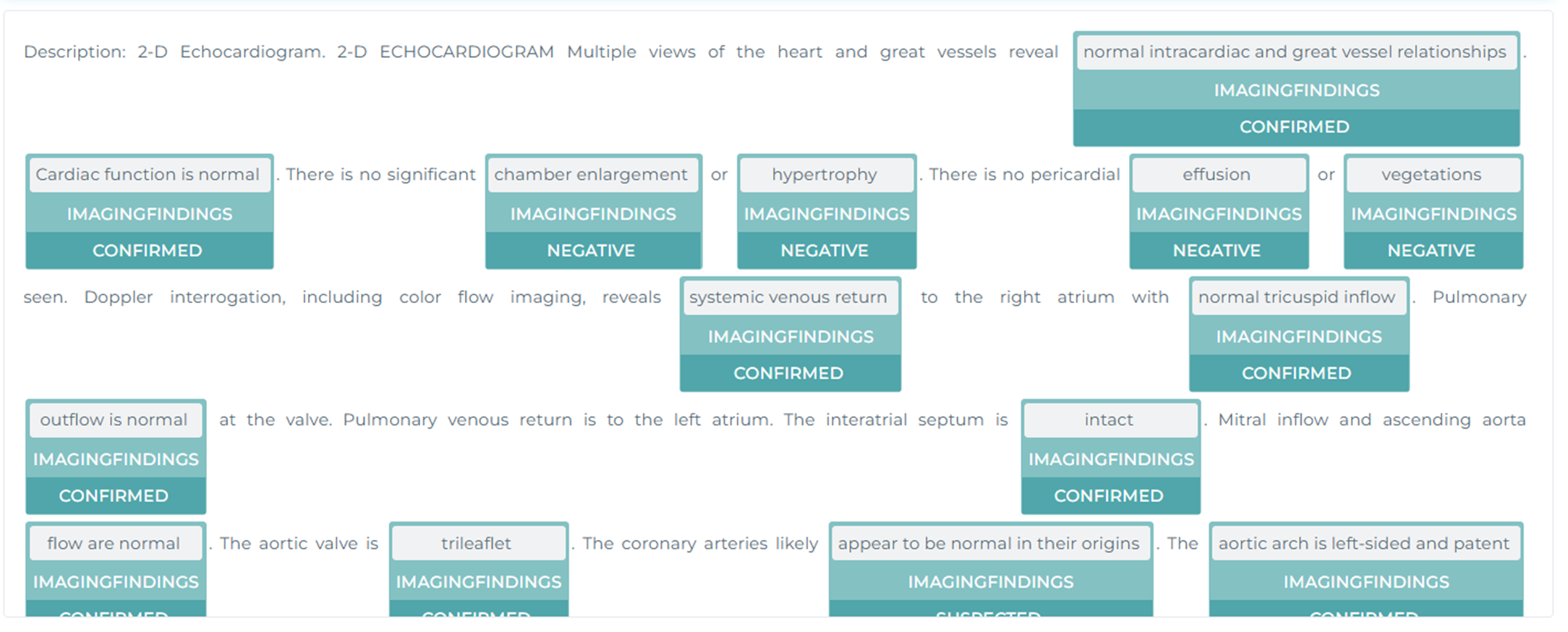

The assertion_bert_classification_radiology model further specializes in radiology-specific contexts, distinguishing between “Confirmed,” “Suspected,” and “Negative” assertions with high precision². This depth of contextual understanding is critical for parsing radiology reports, which often embed diagnostic nuance in complex sentence structures.

Transparent, Modular Pipelines for Radiology Reports

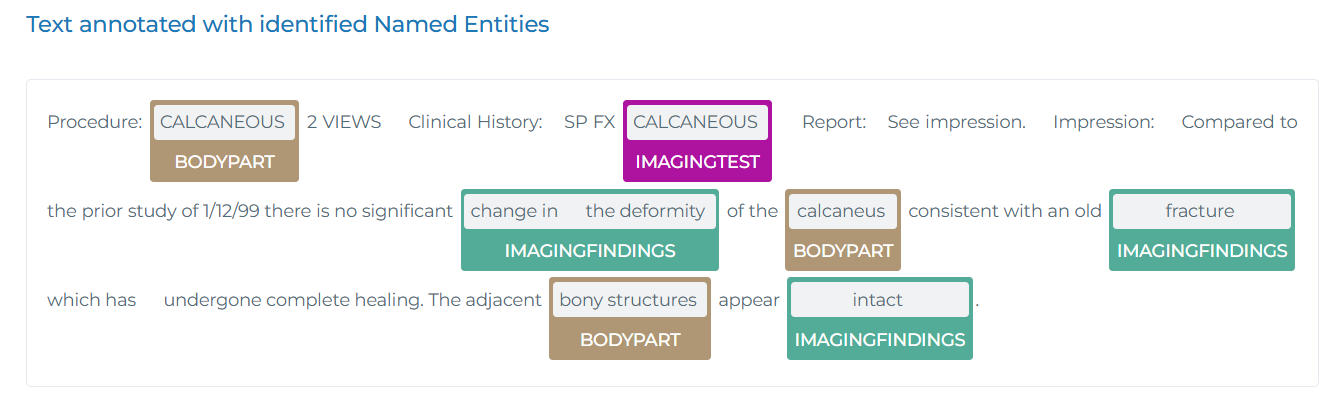

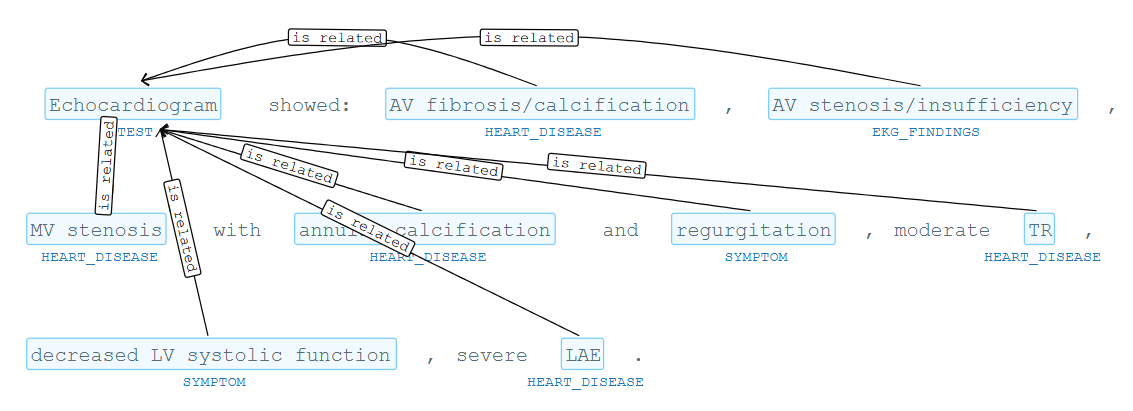

Aligned with the importance of the information contained in radiology reports John Snow Labs’ Explain Clinical Document – Radiology pipeline integrates multiple language models into a cohesive workflow:

- NER with ner_radiology

- Assertion Detection with assertion_dl_radiology

- Relation Extraction using re_test_problem_finding

Each stage contributes explainable, auditable outputs—supporting both regulatory compliance and clinician trust. Designed within the Healthcare NLP ecosystem, the pipeline offers:

- Scalable processing for large clinical datasets

- Modular components for tailored implementations

- Seamless integration with existing EHRs and analytics platforms

This level of flexibility avoids the lock-in of monolithic solutions, offering health systems a practical route to adopt AI at their own pace.

Deployment at Scale and Democratized Access

Accessibility is a key pillar of John Snow Labs’ strategy. Medical VLM-24B’s availability on AWS and its integration into Generative AI Lab enables no-code and low-code interaction. Clinicians and operational teams can configure pipelines, validate model outputs, and monitor performance without needing extensive technical expertise.

This approach supports human-in-the-loop (HITL) workflows that balance AI-driven automation with clinical oversight, helping institutions stay compliant while accelerating decision-making.

Conclusion: A Smarter, Safer Future for Radiology

Medical Vision-Language Models along with natural language processing capabilities are redefining how radiologists interact with imaging and documentation. With best-in-class benchmarks, a robust research foundation, and practical deployment pathways, John Snow Labs’ solutions offer not just innovation, but clinical viability.

Organizations looking to improve radiology efficiency, accuracy, and auditability now have a proven, scalable path forward. For a closer look at how these models can integrate with your existing workflows, we invite you to get in touch.

Assertion Detection: Beyond Negation, Toward Precision

Assertion Detection: Beyond Negation, Toward Precision