Manual data labelling and slow validation trap AI projects in endless pilots and turn audit readiness into a moving target. Generative AI Lab breaks the cycle with a no-code workspace where clinical, compliance, and data teams label faster and validate as they work.

This blog explores how Generative AI Lab’s annotation optimizes project timelines and helps teams move from pilot to production faster.

Case Study: How a Clinician Built a Romanian Colonoscopy NER Model in Two Days

Clinician turned to Generative AI Lab to prepare training data for a named entity recognition (NER) model not in common English, but in Romanian. Instead of spending weeks on manual setup, he used the platform’s pre-created workflow to generate synthetic colonoscopy reports without coding but using prompts and completing the setup in less than two days.

Problem and Challenge

The clinician faced two hurdles: a small pool of de-identified reports and a slow, manual process of creating new data. The unstructured format of reports, e.g., shorthand, inconsistent phrasing, clinical abbreviations, etc., further complicated the task. Therefore, he had to generate synthetic data, however in a secure environment and to train a reliable model with zero coding skills.

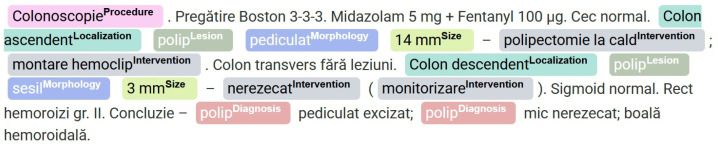

Figure 1: Color-code annotation of clinical concepts in a colorectal procedure report in GenAI Lab.

Approach

Generative AI Lab handled data creation, labelling, and training in one secure workspace. The clinician loaded 100 de-identified Romanian colonoscopy reports, then generated another 100 synthetic reports with prompt templates tuned to clinical style and frequency.

Two gastroenterologists annotated both sets in the web interface using BIO tagging across several entities.

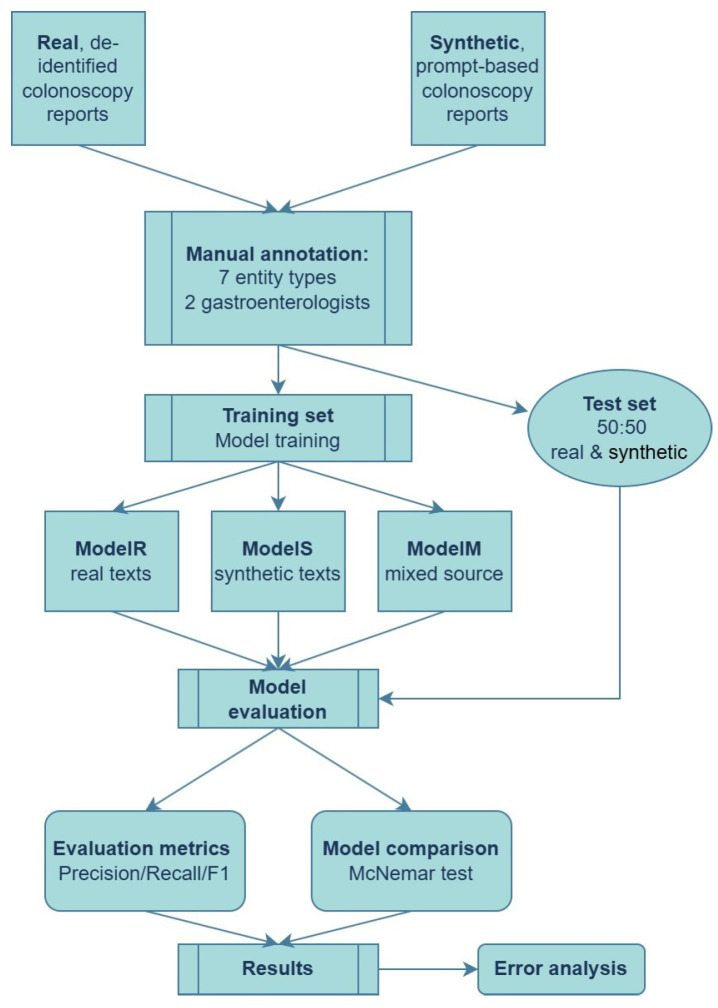

Using the Generative AI Lab platform, they then tested three versions – one on real notes, another on synthetic data, and a third on a 50/50 mix – and compared the results with built-in metrics, allowing the team to pick the most reliable model quickly.

Because everything stayed inside one workspace, setup and model comparisons finished within two days, with every step logged for review.

Figure 2: End-to-end pipeline for training and evaluating NER models on real and synthetic colonoscopy reports.

Results: Stronger Models and Faster Timelines

The outcome showed that combining real and synthetic data produced stronger models. The mixed model reached an F1 score of 0.94, outperforming the real-only (0.70) and synthetic-only (0.64) models. The model’s higher precision in extracting clinical details, especially location terms, reduced manual correction time.

Pairing synthetic notes with a real dataset, Generated AI Lab eliminated the slow “cold start” phase. The unified no-code workspace eliminated the need to move between applications and manual handoffs. With everything in one place, the team built a reliable model in two days inside a secure environment that met privacy and audit requirements.

For those interested in exploring the full article titled “Enhancing and Not Replacing Clinical Expertise: Improving Named-Entity Recognition in Colonoscopy Reports Through Mixed Real–Synthetic Training Sources,” please click here.

Results speak for itself

With Generative AI Lab, you get a single workspace that allows you to focus on a project itself, reduces the reliance on engineering support, while meeting strict regulatory and compliance needs.

Get faster to a Production-Ready Model

The first pass is automated, and reviewers can allocate the time where critical judgment is needed. AI-powered annotation, active learning, and in-browser training overcome the initial hurdle and shorten review cycles. Zero-shot prompts and built-in evaluation keep everything in one place.

Lower Dependence on Engineering and Data Science

A guided setup and reusable templates allow clinical and compliance teams to label, prompt, train, and test directly within the user interface. A shared Model and Prompt Hub facilitates one-click reuse, enabling engineers and data scientists to concentrate on advanced model tuning and deployment.

Built In Privacy and Compliance

Patient data remains within your own environment. Private, on-premise execution of large language models (LLMs) is the default, with connectors disabled unless activated and logged when enabled. Versioned models and prompts, role-based access controls, and an append-only audit log provide complete traceability for every decision. These features ensure HIPAA and GDPR compliance and simplify audit processes.

One Unified Workspace

No need for separate tools across various data types. A single interface supports text, PDFs, images, audio, video, and HTML in over 250 languages. Enterprise features like Single Sign-On (SSO), Multi-Factor Authentication (MFA), and APIs enable scalability while maintaining consistency and reusability of assets.

Ready to Accelerate Your Next AI Project?

AI-powered annotation streamlines the journey from raw data to a reliable model. With Generative AI Lab, clinicians can work more efficiently in a secure, no-code environment, reducing wait times, minimizing handoffs, and creating models that withstand clinical and regulatory scrutiny.

Request a Generative AI Lab demo to see how pre-annotation, prompt-ops, and active learning speed up the delivery of real-world models.

Frequently Asked Questions

How does Generative AI Lab shorten project setup and onboarding?

Projects start immediately with templates, versioned schemas, and one-click model reuse in a no-code workspace. Zero-shot prompts and pre-annotation create a first draft on day one, allowing edits instead of manual labelling.

Which features cut review cycles and rework?

The platform provides AI pre-annotation with Spark NLP models to reduce manual effort and active learning, sending complex cases to experts. Built-in evaluation keeps iterations short and measurable while models improve in the background.

Which deployment option is fastest?

AWS or Azure marketplace deployment installs quickly inside the organization’s VPC, using existing SSO and MFA. External connectors remain off by default, keeping PHI secure.