Our expert data science team worked around the clock to deliver the major release of Spark OCR 3.0 at the same time of our Spark NLP for Healthcare 3.0.

Spark OCR 3.0 is a commercial extension of Spark NLP for visual document understanding, including support for optical character recognition from PDF and scanned PDF documents (OCR PDF) and machine-readable DOCX, DICOM medical imaging files, and images. This release expands the library’s applicability in to significant areas: support for deep-learning based multi-modal learning, and full support for the Spark 3.x ecosystem and the implied performance boost.

Visual Document Understanding: Combining NLP and Computer Vision

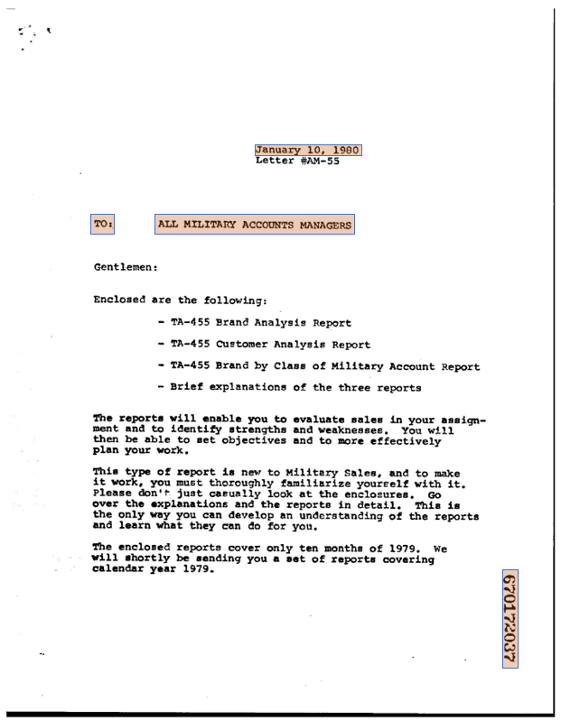

Consider the scanned image at the top of this blog post. The two most common tasks required for such documents, according to the most recent NLP Industry Survey, are:

- Document Classification: This means identifying that this image is a letter, in contrast to being an invoice or a resume, for example. The signals that this is a letter come from both the text in the image – like the date at the top and the word “Letter” right below it – as well as visual cues. The visual cues are the fact that the date and the word ‘Letter’ are at the top (and not somewhere within the main paragraphs), and the fact that there’s a header line starting with “To:” towards the top of the text.

- Named Entity Recognition (or more broadly Information Extraction): This means extracting structured facts from the image such as the letter’s date (at the top right), numeric identifier (the vertically aligned number on the bottom right), and the intended recipients (center top right after the ‘To:’ label). Note that in this case too, both the text in and around the fact to extract as well as its visual location in the text are important in order to accurately identify it.

Traditional OCR solutions first translate such an image to digital text – and then use NLP models to classify or extract information from that text. This often works but loses the visual information in the original image – for example, the difference between “look for text formatted as a date” and “look for text formatted as a date, which appears around the top right of the page”.

Machine learning techniques which can take advantage of different modalities of data – in this case, combining natural language processing and computer vision – are called multi-modal learning techniques. Spark OCR 3.0 provides the new VisualDocumentClassifier which is a trainable, scalable, and highly accurate multi-modal document classifier. It is based on the approach and deep-learning architecture proposed in LayoutLM.

Support for VisualDocumentNER (for multi-modal named entity recognition from visual documents) is underway and will be added in a future release.

Scalability & Platform Integrations

Spark OCR 3.0 welcomes 9 new Databricks runtimes that are now fully tested & supported:

- Databricks 7.3

- Databricks 7.3 ML GPU

- Databricks 7.4

- Databricks 7.4 ML GPU

- Databricks 7.5

- Databricks 7.5 ML GPU

- Databricks 7.6

- Databricks 7.6 ML GPU

- Databricks 8.0

- Databricks 8.0 ML (there is no GPU in 8.0)

- Databricks 8.1 Beta

In addition, this latest release welcomes 2 new EMR 6.x series to the Spark NLP family:

- EMR 6.1.0 (Apache Spark 3.0.0 / Hadoop 3.2.1)

- EMR 6.2.0 (Apache Spark 3.0.1 / Hadoop 3.2.1)

Spark OCR 3.0 supports all the last four major Apache Spark versions: 2.3.x, 2.4.x, 3.0.x, and 3.1.x.

Support for Apache Spark 3.0.x and 3.1.x is new in this release, along with support for Scala 2.12 with both Hadoop 2.7. and 3.2. Both CPU and GPU optimized builds are available.

The entire functionality of the Spark OCR library has been upgraded and tested on all platforms:

- Image pre-processing algorithms to improve text recognition results:

-

- Adaptive thresholding & denoising

- Skew detection & correction

- Adaptive scaling

- Layout Analysis & region detection

- Image cropping

- Removing background objects

- Text recognition, by combining NLP and OCR pipelines:

-

- Extracting text from images (optical character recognition)

- Support English, German, French, Spanish, Russian and Vietnamese languages

- Extracting data from tables

- Recognizing and highlighting named entities in PDF documents

- Masking sensitive text in order to de-identify images

- Output generation in different formats:

- PDF, images, or DICOM files with annotated or masked entities

- Digital text for downstream processing in Spark NLP or other libraries

- Structured data formats (JSON and CSV), as files or Spark data frames

- Scale out: distribute the OCR jobs across multiple nodes in a Spark cluster.

- Frictionless unification of OCR, NLP, ML & DL pipelines.

Getting Started

– Try Free here

– Live Demos here