The article explores text tokenization techniques in Spark NLP, focusing on the Tokenizer and RegexTokenizer annotators. It outlines the process of transforming raw text into meaningful tokens, demonstrating Python code for implementation. The Tokenizer annotator is discussed for basic tokenization, while RegexTokenizer is introduced for more complex scenarios using regular expressions. The article concludes by showcasing the TokenAssembler annotator, which combines tokens back into text. The examples illustrate practical applications in text preprocessing for downstream NLP tasks in Spark NLP pipelines.

Introduction

When working with text data for analysis or building machine learning models, one of the initial steps in the process is to transform the text into meaningful pieces to be used as features by the models. This step is called tokenization, which splits the text into pieces called tokens that can be words, characters, sentences, or other chunks of the text.

In this article, we will discuss some tokenization techniques and show how to apply them in the open-source library Spark NLP. More specifically, we will show how to use the following annotators of the library: Tokenizer and RegexTokenizer. In addition, we will show how to use the TokenAssembler annotator to join the tokens back into text (document annotations).

Tokenization in Spark NLP

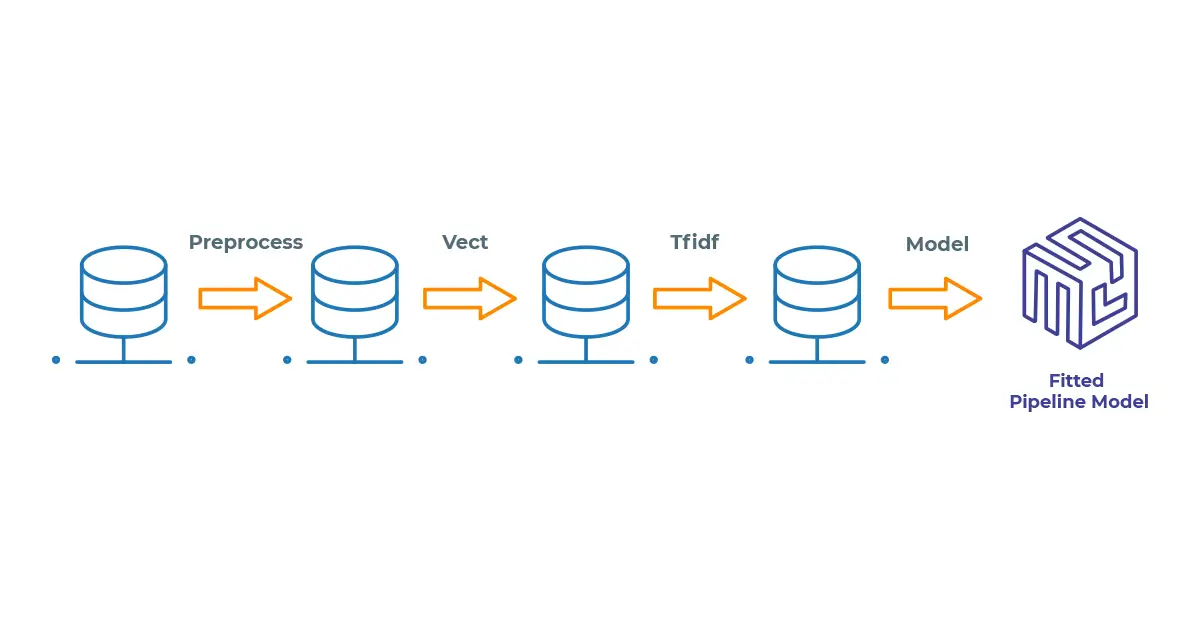

Spark NLP uses pipelines to process the data in Spark data frames, where each stage in the pipeline performs a specific task (tokenization, classification, identifying entities, etc.) and saves the resulting information in a new column of the data frame. This way, the data is distributed in the Spark cluster and processed stage by stage in a sequential manner.

Illustration of the Spark NLP pipeline

If you are not familiar with these concepts, you can check the official documentation or the Spark NLP Workshop Repository to see more examples in action.

Now, let’s see how to use the different annotators in Python.

Starting with the basic: the Tokenizer annotator

This tokenization annotator is the most used on the pipelines. It can be used simply to split text into words (by white space), but it has the capability to do much more than that. In this article, we show the basic usage of the annotator, but you can follow the documentation and workshop examples for more examples and advanced usage.

First, we must import the required packages and start the Spark session.

import sparknlp from sparknlp.base import DocumentAssembler, Pipeline from sparknlp.annotator import Tokenizer spark = sparknlp.start()

We will use the DocumentAssembler as the initial transformation of the raw text into a document annotation that is expected by the Tokenizer annotator. Also, we use the Pipeline to create a Spark pipeline to process the two stages in sequence. Finally, the Tokenizer will split the texts into tokens.

documenter = (

DocumentAssembler().setInputCol("text").setOutputCol("document")

)

tokenizer = (

Tokenizer()

.setInputCols(["document"])

.setOutputCol("token")

)

empty_df = spark.createDataFrame([[""]]).toDF("text")

nlpPipeline = Pipeline(stages=[documenter, tokenizer]).fit(empty_df)

Now, we can use it on Spark data frames to make predictions. Let’s give a simple example. Note that we fitted the pipeline in an empty data frame; this is to follow the Spark ML concepts of transformers and estimators.

text = "Peter Parker (Spiderman) is a nice guy and lives in New York."

spark_df = spark.createDataFrame([[text]]).toDF("text")

Now, to obtain the tokens, we simply use the pipeline to transform this data.

result = nlpPipeline.transform(spark_df)

result.selectExpr("token.result as tokens").show(truncate=False)

The output will be a table with:

+--------------------------------------------------------------------------------+ |tokens | +--------------------------------------------------------------------------------+ |[Peter, Parker, (, Spiderman, ), is, a, nice, guy, and, lives, in, New, York, .]| +--------------------------------------------------------------------------------+

We can see that by default the Tokenizer will split by white space and separate the punctuation into tokens, which is the default tokenization in most NLP applications. We can extend the annotator by setting additional parameters to control the splitting criteria and the length of each token. for example, let’s remove tokens with less than 3 characters and split them by white space only.

tokenizer.setSplitChars([" ", "-"]).setMinLength(3)

# Update the pipeline

nlpPipeline = Pipeline(stages=[documenter, tokenizer]).fit(empty_df)

new_example = spark.createDataFrame(

[["Hi, please read my e-mail (urgent!)"]]

).toDF("text")

nlpPipeline.transform(new_example).selectExpr(

"token.result as tokens"

).show(truncate=False)

Obtaining:

+----------------------------+ |tokens | +----------------------------+ |[please, read, mail, urgent]| +----------------------------+

This time, the word e-mail was tokenized as [e, mail], but the first token was removed due to the restriction of at least 3 characters in the token.

Expanding with RegexTokenizer

The RegexTokenizer extends the capabilities of the Tokenizer annotator by using regular expressions to split the tokens. When working with complex texts, this can be useful to keep valuable information in your pipeline.

from sparknlp.annotator import RegexTokenizer

example = "1. The investments made reached a value of $4.5 Million, gaining 85.6% on DATE**[24/12/2022]."

df = spark.createDataFrame([[example]]).toDF("text")

pattern = r'(\s+)|(?=[\[\]\*])|(?<=[\[\]\*])'

regexTokenizer = (

RegexTokenizer()

.setInputCols(["document"])

.setOutputCol("regexToken")

.setPattern(pattern)

.setToLowercase(True)

)

The pattern looks complex, but let’s break it down:

- The

(\s+)part will match one or more whitespace characters. - The

(?=[\[\]\*])part will match a position in the input string where the next character is one of[, ], or*, without actually consuming the characters. - The

(?<=[\[\]\*])part will match a position in the input string where the preceding character is one of[, ], or*, without actually consuming the characters.

The first group is the usual tokenization by words (sequential characters until a white space is reached), whereas the second and third groups help to modify the text by splitting the DATE**[24/12/2022] part. So, let’s apply it to the example:

nlpPipeline = Pipeline().setStages(

[documenter, regexTokenizer]

).fit(empty_df)

result = nlpPipeline.transform(df)

result.selectExpr(

"regexToken.result as tokens"

).show(truncate=False)

Obtaining:

+------------------------------------------------------------------------------------------------------------------------+ |tokens | +------------------------------------------------------------------------------------------------------------------------+ |[1., the, investments, made, reached, a, value, of, $4.5, million,, gaining, 85.6%, on, date, *, *, [, 24/12/2022, ], .]| +------------------------------------------------------------------------------------------------------------------------+

We were able to split the text into the relevant tokens and transform them into lowercase.

Merging tokens back with TokenAssembler

We saw how to split texts into tokens; now, we will see how to join them back to full texts using the TokenAssembler annotator. This process can be helpful when you need to make simple text preprocessing on your data and then use the obtained text for a downstream NLP task such as text classification.

Let’s see how to do that. We will work on the previous example but will also remove smaller tokens to obtain a filtered array of tokens that will be merged back into a text in a document annotation. In addition, we will add an extra text cleaning stage to apply Stemming on the tokens.

from sparknlp.base import TokenAssembler

from sparknlp.annotator import Stemmer

regexTokenizer.setMinLength(4)

stemmer = Stemmer().setInputCols(["regexToken"]).setOutputCol("stem")

tokenAssembler = (

TokenAssembler()

.setInputCols(["document", "stem"])

.setOutputCol("cleanText")

)

We will remove tokens with less than 5 characters and transform them to their stem format. The final step will be to merge the stems back into a single text.

nlpPipeline = Pipeline().setStages(

[documenter, regexTokenizer, stemmer, tokenAssembler]

).fit(empty_df)

result = nlpPipeline.transform(df)

result.selectExpr(

"text as originalText", "cleanText.result as clenText"

).show(truncate=False)

Obtaining:

This text can now be used on downstream tasks.

Conclusion

We saw how to use two different annotators from Spark NLP to perform tokenization in text data. These annotators can be used on simple and complex text data to split it into meaningful pieces that can be used on downstream NLP tasks.

The Tokenizer annotator is very powerful but can also be used to simply split texts into words, while the RegexTokenizer can extend its use with regular expressions.

Finally, we saw how to merge the tokens back into a text by using the TokenAssembler annotator. In some NLP tasks, we use tokens as input (e.g., named entity recognition), but full texts can also be used (e.g., text classification).

By combining different stages in a Spark NLP pipeline, we can use different annotators to transform the text as needed.