In Part 1 – Test Suites and Part 2- Generating Tests of this article, we presented how Generative AI Lab supports comprehensive functionality, enabling teams of domain experts to train, test, and refine custom language models for production use, across various common tasks, all without requiring any coding.

Generative AI Lab supports advanced capabilities for test case generation, test execution, and model testing across various categories by integrating John Snow Lab’s LangTest framework.

In this article, we focus on the test execution and test results exploration using Generative AI Lab.

Test Execution

When “Start Testing” is clicked, model testing commences based on the generated test cases and the configured test settings. To view the test logs, click on “Show Logs”. The testing process can be halted by clicking on “Stop Testing”. If no test cases have been generated, the “Start Testing” option will be disabled, preventing the user from initiating testing.

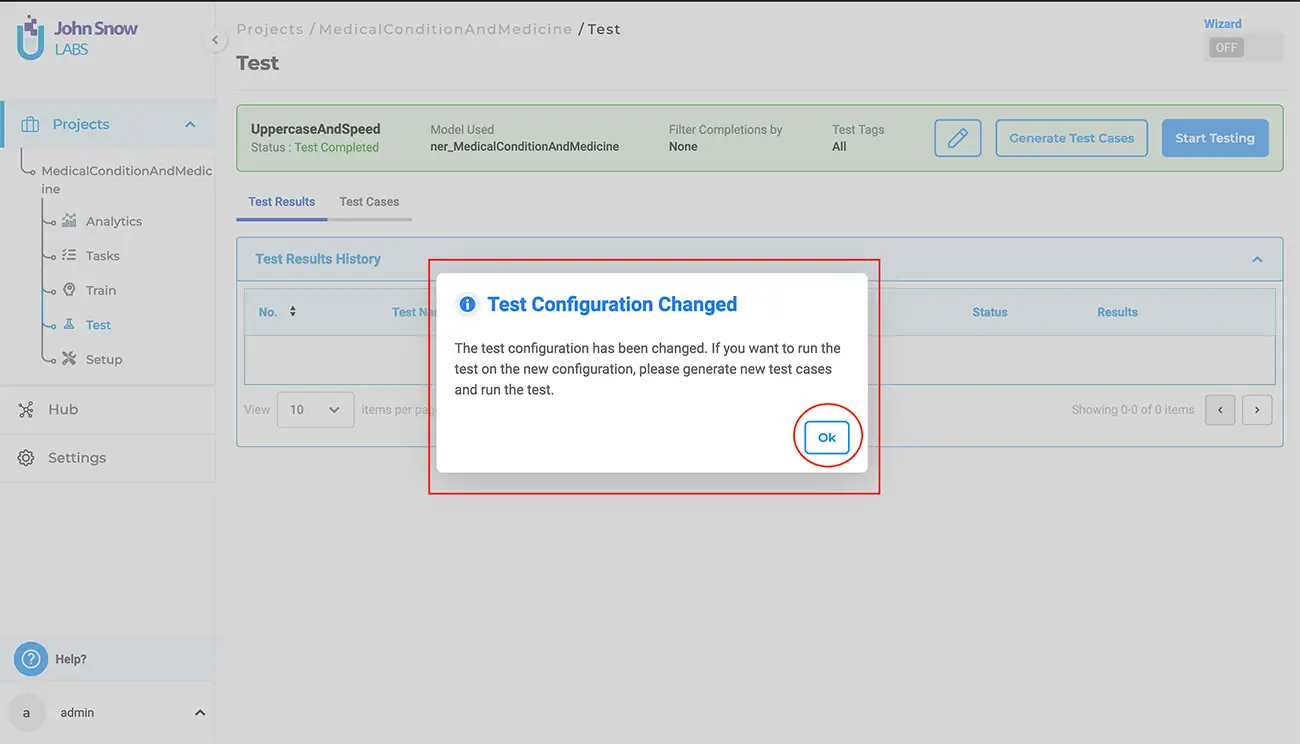

If any changes are made to the Test Settings that differ from those used to generate the test cases, clicking on “Start Testing” will trigger a pop-up notification informing the user of the configuration change. The user must either ensure that the Test Settings and Parameters match those used for test case generation or create new test cases based on the updated configuration to proceed with model testing.

View and Delete Test Results

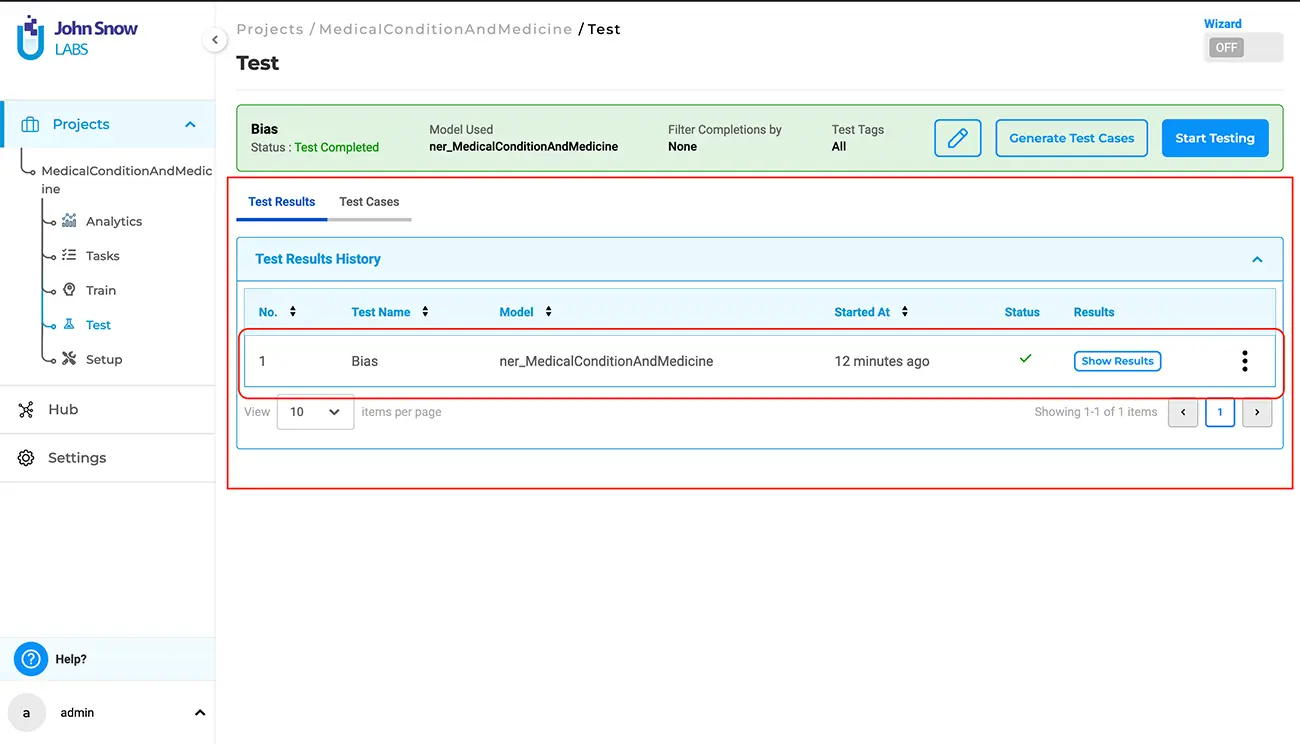

Once the execution of language model testing is complete, users can access the test results via the “Test Results History” section in the “Test Results” tab.

Under this tab, the application displays all the “test runs” and corresponding results, for every test previously conducted for the project.

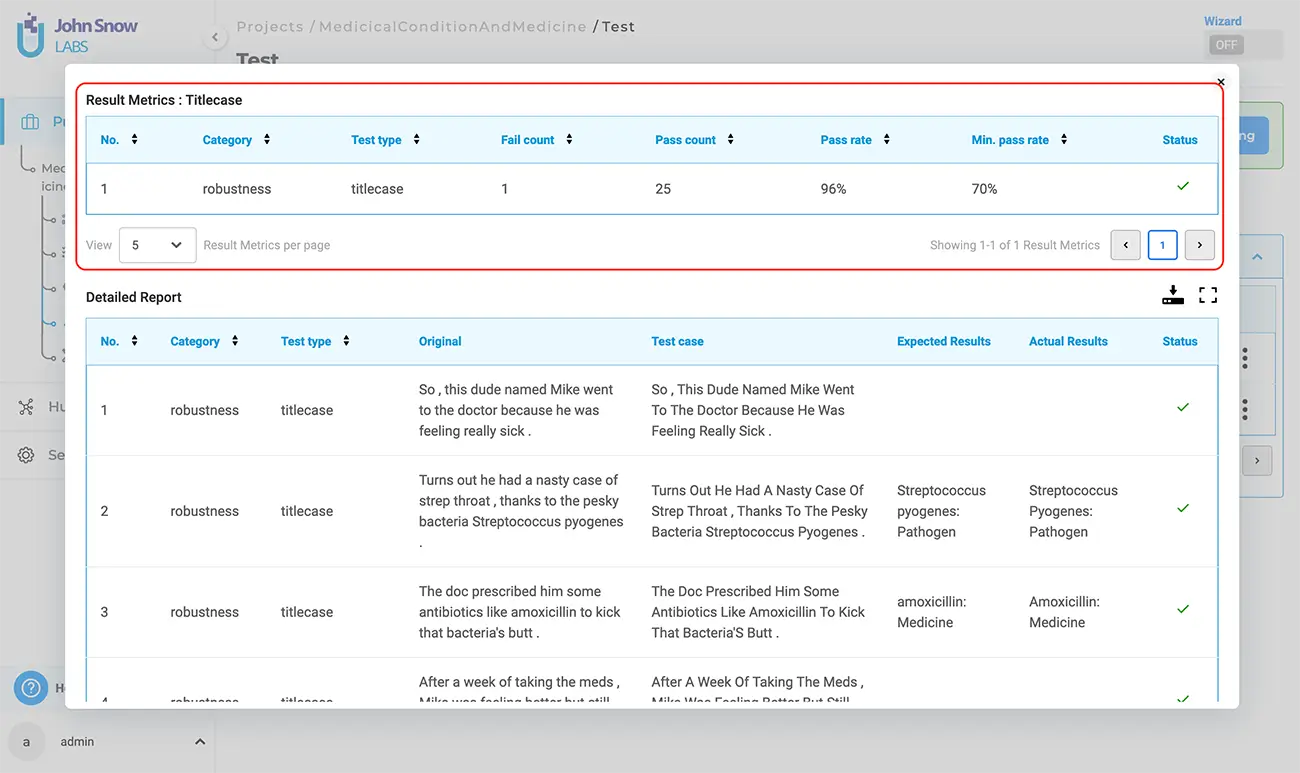

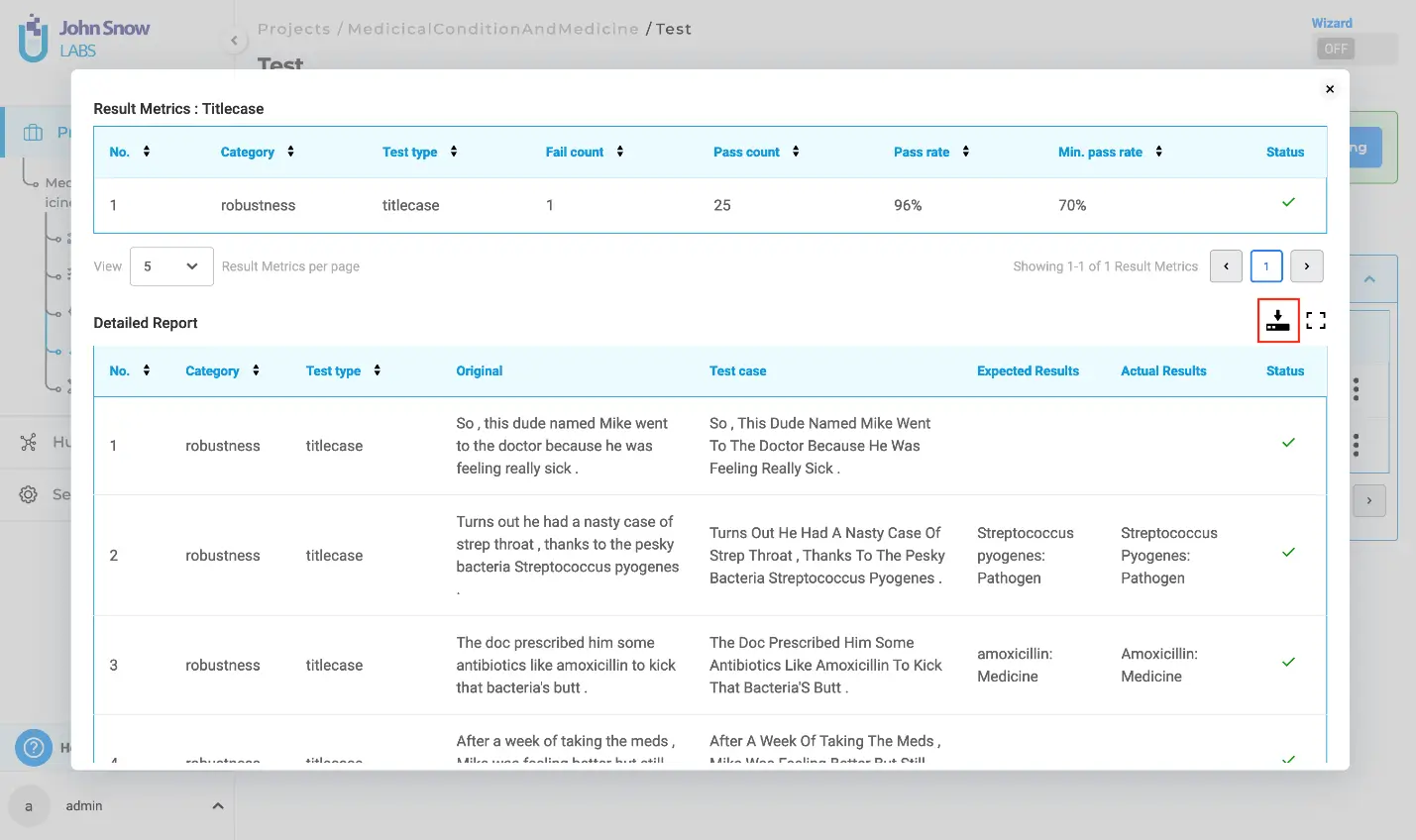

Clicking on “Show Results” will display the results for the selected test execution run. The test results consist of two reports:

- Result Metrics:

This section of the results provides a summary of all tests performed, including their status. It includes details such as “Number”, “Category”, “Test Type”, “Fail Count”, “Pass Count”, “Pass Rate”, “Minimum Pass Rate” and “Status”.

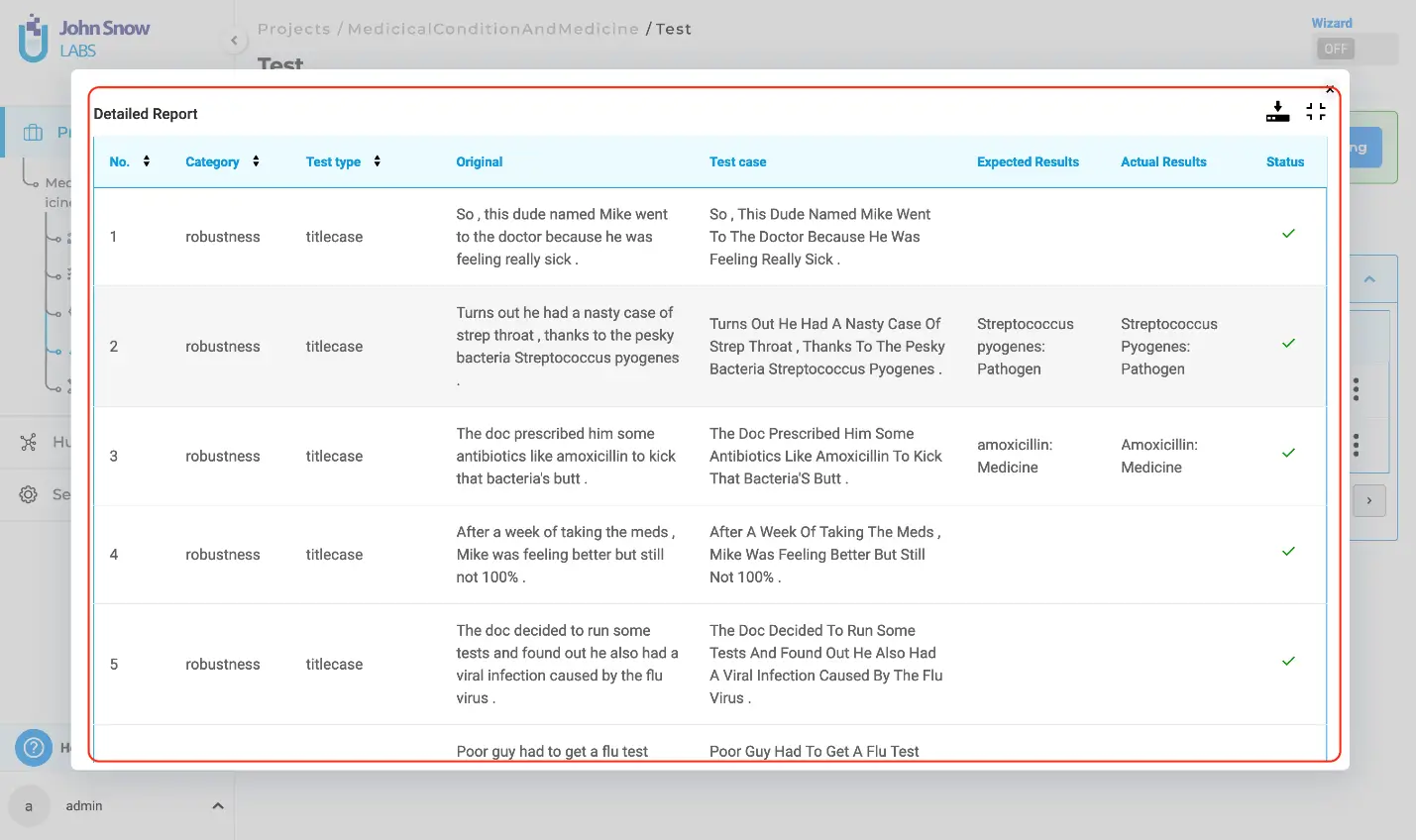

2. Detailed report

The detailed report contains information about each test case within the selected tests. It includes “Number”, “Category”, “Test Type”, “Original”, “Test Case”, “Expected Results”, “Actual Results” and “Status”.

In this context, “Expected Results” refer to the prediction output by the testing model on the “Original” data, while “Actual Results” indicate the prediction output by the testing model on the “Test Case” data generated. A test is considered passed if the “Expected Results” match the “Actual Results”; otherwise, it is deemed failed.

Users have the option to simultaneously download both reports in CSV format by selecting the download button.

Furthermore, users can delete test results from the “Test Results History” by selecting the three dots followed by the “Delete” button.

Getting Started is Easy

Generative AI Lab is a text annotation tool that can be deployed in a couple of clicks using either Amazon or Azure cloud providers, or installed on-premise with a one-line Kubernetes script.

Get started here: https://nlp.johnsnowlabs.com/docs/en/alab/install