Medical AI projects routinely deal with scanned documents and images that contain sensitive patient information. Extracting insights from these visuals is crucial – but so is protecting patient privacy. Traditionally, teams had to manually redact or blur Protected Health Information (PHI) in images and PDF files, a time-consuming process prone to human error. Now, Generative AI Lab provides an integrated visual de-identification workflow that makes anonymizing medical documents seamless. This new capability allows users to import files, automatically detect and mask patient identifiers using AI, review the results with live previews, and export only the anonymized documents – all within a single platform. The result is faster, more reliable medical document de-identification that maintains compliance without disrupting your annotation workflow.

End-to-End De-Identification from Import to Export

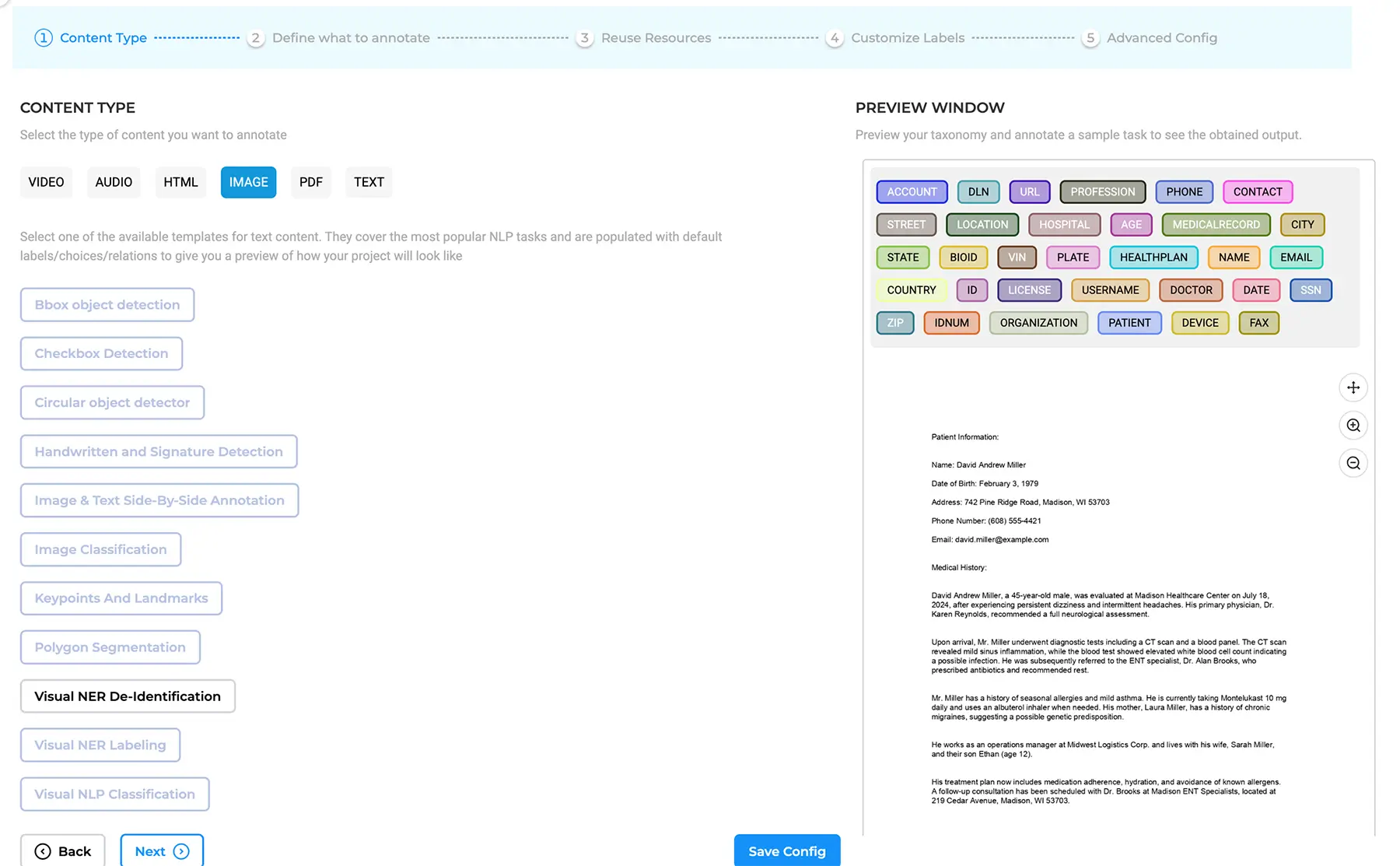

At the core of this feature is a dedicated Visual NER De-Identification project type. When creating a project, users can choose this option to ensure that every step – from dataset import and OCR pre-processing to annotation review and final export – handles sensitive data with care. De-identification is applied consistently at each stage of the process. In practice, this means that as soon as you import images or PDFs, the system is prepared to flag and anonymize any detected PHI. During AI-assisted annotation (pre-annotation), identified personal data like names or IDs are marked for masking. In the review interface, those masks carry through so you can validate them, and on export you have the option to only include the de-identified versions of documents. Sensitive entities are automatically replaced or obscured throughout the workflow, ensuring that the original PHI never leaks at any point. Teams can confidently process visual documents containing patient data while meeting strict healthcare privacy requirements such as HIPAA.

Flexible Anonymization with Models and Rules

Identifying sensitive information rarely follows a single universal pattern. In Generative AI Lab, de-identification can be configured in two distinct ways, depending on how much control and customization you need.

At the core of the platform is a clinical de-identification pipeline, which is a curated and optimized combination of models and resources designed specifically for PHI detection. This pipeline provides strong out-of-the-box performance for common healthcare identifiers such as patient names, dates, IDs, addresses, and other clinical entities, and is recommended when you want reliable results with minimal configuration.

For teams that require more granular control, Generative AI Lab also allows de-identification to be built without using the pipeline, by selecting individual NER models and rule-based detectors. In this mode, users can apply pre-trained NLP models for entity recognition alongside built-in or custom rules for pattern-based identifiers such as phone numbers, emails, dates, or organization-specific ID formats. This approach is useful when working with highly specialized data or non-standard document formats.

While both options are supported, the pipeline itself is optimized to balance coverage and accuracy across typical healthcare documents. Choosing between the pipeline and a custom model-and-rule configuration allows teams to align the de-identification process with their data requirements, while maintaining clear and predictable behavior in how sensitive entities are detected and masked.

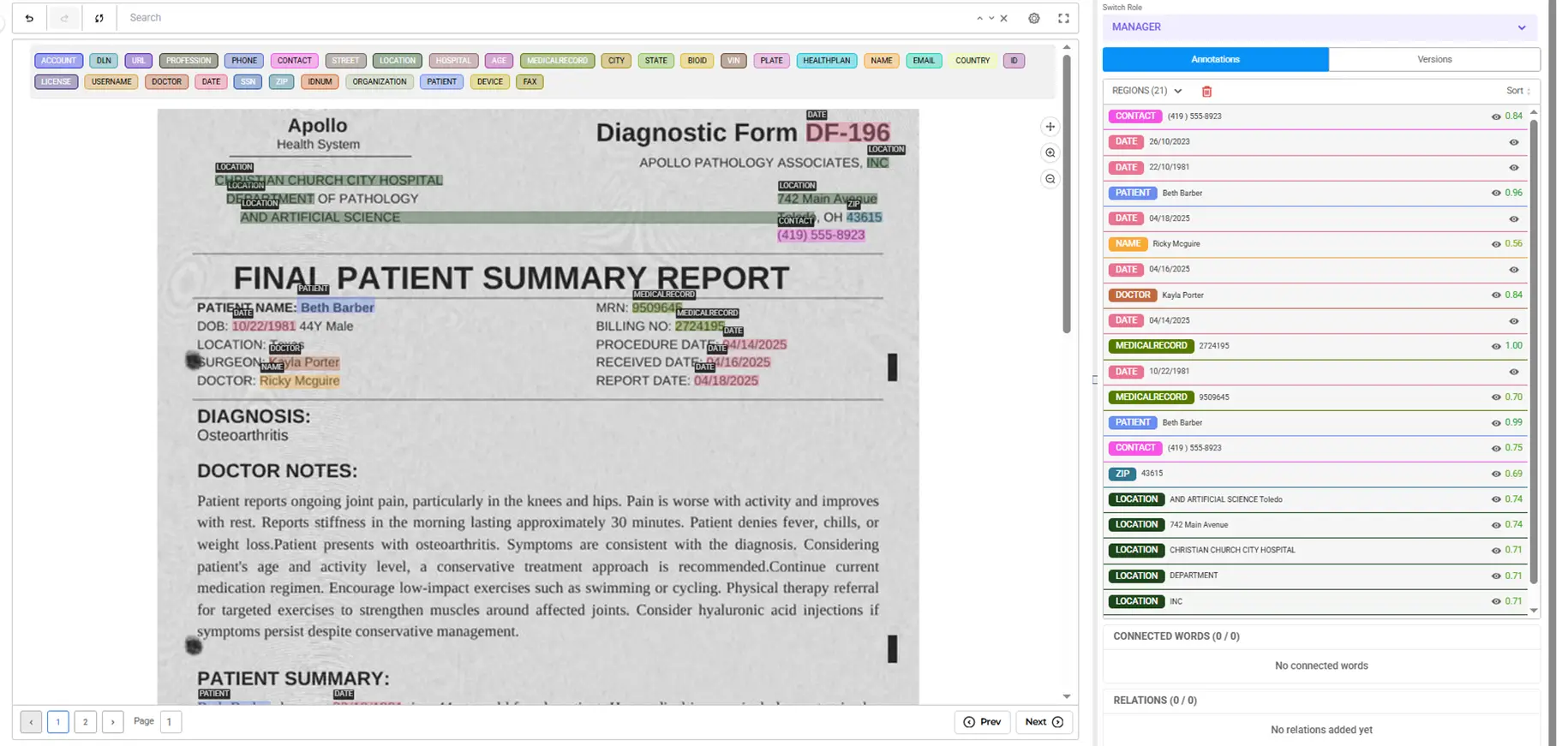

Seamless Review with Live Anonymization Preview

After the automated masking runs, Generative AI Lab provides a live preview of the de-identified document in the annotation UI. Reviewers can toggle a view to see exactly what each image or PDF will look like with all sensitive data hidden or replaced. Clear visual indicators highlight the anonymized content and what will be included in the safe output.

This immediate feedback loop lets you verify that no PHI was missed by the model. If the AI missed a field or masked something incorrectly, you can simply adjust the annotation or add a label, then re-run the de-identification step – the updates will be reflected instantly in the preview.

The ability to correct and re-run de-identification on the fly means you maintain high accuracy and don’t have to do any manual redaction outside the system. For example, a reviewer can confirm that a patient’s name is fully removed from a scanned admission form before approving that document for export. The live preview gives human experts confidence that the output is correct and privacy-compliant before the data leaves the platform.

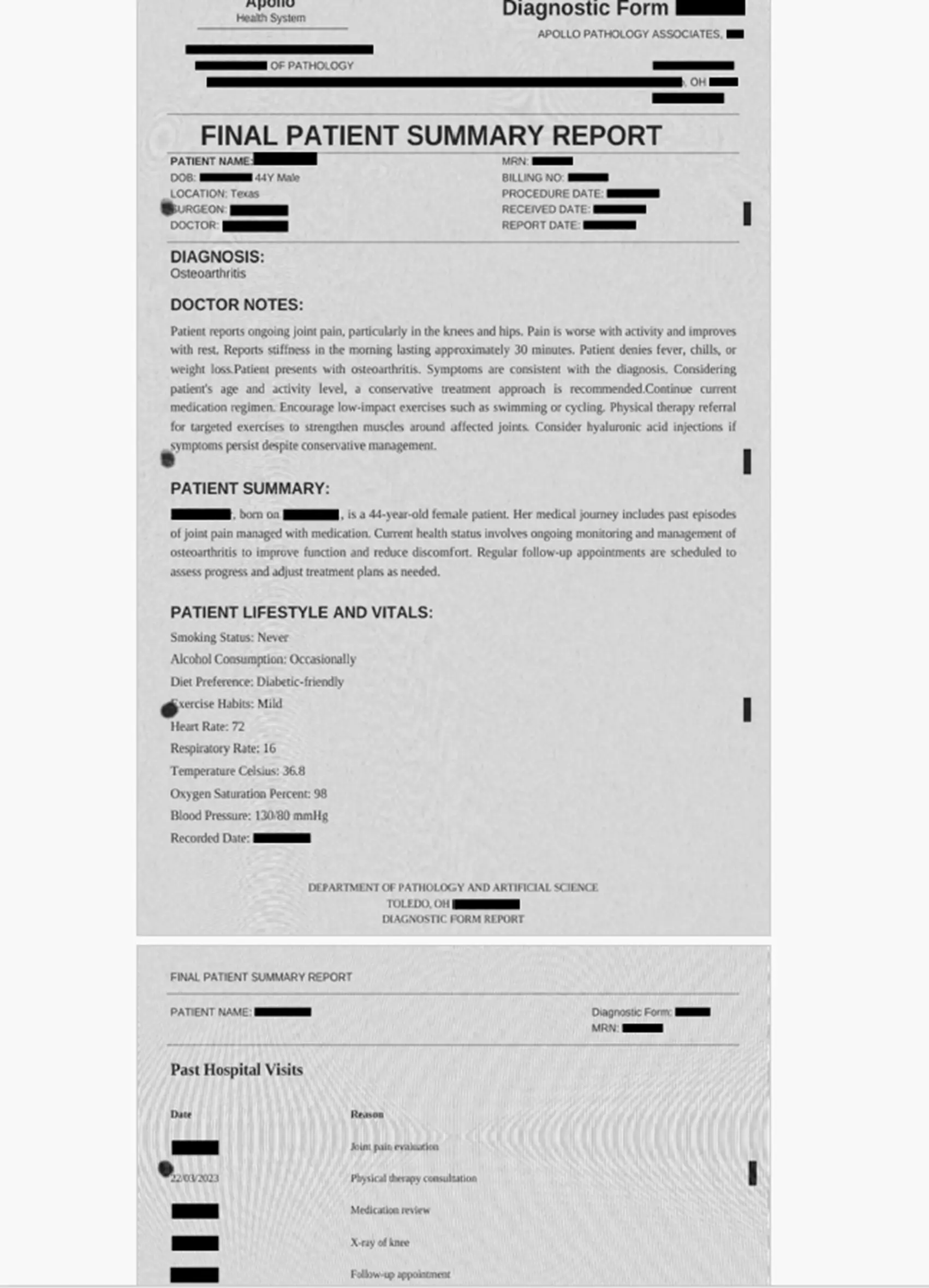

Safe, Compliant Outputs and Real-World Use Case

Once documents have been reviewed, exporting the anonymized dataset is straightforward and secure. Generative AI Lab provides an “Export Only De-Identified” option to ensure that only the sanitized images or PDFs are included in your output – original files with sensitive data are never inadvertently released. The exported files retain the same format as the project type, so an image-based project will output image files with the PHI masked, and a PDF project will output de-identified PDF files. Downstream teams can immediately work with these outputs for AI model training, data analysis, or sharing with partners, without worrying about privacy issues. This integrated approach adds a crucial layer of safety for healthcare AI initiatives: you no longer need separate redaction tools or manual checks before sharing data, since de-identification is built into the workflow from the start.

To illustrate the impact, consider a hospital compliance team that needs to prepare a batch of scanned patient records for a research project. By creating a Visual De-Identification project, the team can automatically mask patient names, ID numbers, and contact details across all those scanned reports and intake forms. The platform’s clinical NLP models identify the sensitive text in each document, while custom rules catch any hospital-specific identifiers. Reviewers then validate each masked document using the live preview, catching any edge cases (like a handwritten note or an unusual ID format) and adjusting as needed. Finally, the team exports the collection of de-identified PDFs to share with the research partners – with confidence that no personal data remains. This end-to-end solution not only saves countless hours of manual scrubbing, but also ensures compliance every step of the way, helping the hospital meet HIPAA obligations while still unlocking valuable data for analysis.

Conclusion

The new visual de-identification workflow in Generative AI Lab extends the platform’s human-in-the-loop AI capabilities into the realm of data privacy. By automating the tedious parts of anonymization and baking privacy protection into the annotation process, teams can move faster without ever compromising patient confidentiality. From the moment a file is imported to the moment a project is exported, PHI is handled in a secure, standardized way. For healthcare and life science organizations, this means sensitive documents can be safely harnessed for AI development, clinical research, or operational analytics – all on one platform that streamlines annotation and anonymization. Generative AI Lab now enables users to focus on extracting insights from medical documents, while it takes care of protecting the individuals behind that data through a complete visual de-identification workflow.