Human-in-the-Loop (HITL) validation is critical to ensuring AI model outputs meet the highest standards of accuracy, compliance, and usability. Generative AI Lab is purpose-built to empower annotation teams to validate AI results efficiently and confidently. Our latest updates bring tangible improvements to how teams assign tasks, manage resources, and validate sensitive information—accelerating workflows, minimizing manual errors, and improving overall annotation quality.

Generative AI Lab offers smart task distribution, improved resource filtering, streamlined project resets, and more — all focused on maximizing your productivity and ensuring smoother, more intuitive workflows.

Smarter Task Distribution: Bulk Assignment at Scale

Managing large volumes of annotation tasks is now easier and more efficient. Generative AI Lab introduces bulk task assignment, allowing users to assign up to 200 tasks at once to selected annotators.

Key Features

- Sequential or Random Assignment: Tasks can be distributed in order or randomly from the unassigned pool.

- Efficient Scaling: Reduces manual effort in large-scale projects, optimizing resource utilization.

User Benefits

Bulk assignment accelerates task distribution, improving operational efficiency and boosting annotation throughput, particularly for large-scale labeling operations.

Example

When launching a new medical NER project involving thousands of documents, project managers can now allocate tasks swiftly across teams without tedious, manual interventions.

Bulk Assignment Process

- Click on the “Assign Task” button.

- Select the annotators to whom you wish to assign tasks.

- Specify the number of tasks to assign to each annotator.

- Define any required criteria for task selection (sequential or random).

- Click on “Assign” to complete the process.

Notes

- The maximum number of tasks that can be assigned at once is 200.

- If the total number of unassigned tasks is less than the selected task count, all unassigned tasks will be assigned to the first selected annotator.

Only What You Need: Resource Filtering by Project Type

Generative AI Lab enhances the Reuse Resource interface by displaying only compatible models, prompts, and rules based on the selected project type.

Context-Aware Resource Display: When a project type is selected, the Reuse Resource page now filters and displays only those models, prompts, and rules that are compatible with that specific project type.

Example – Visual NER projects, for example, will only show supported text NER models and skip unsupported resource types. Resources such as assertion models, classification models, and OpenAI prompts, which are not supported, will not be shown.

User Benefit: Simplifies setup, eliminates validation errors, and dramatically accelerates project configuration.

Effortless Project Resets: Bulk Task Deletion

Generative AI Lab offers a one-click bulk deletion option for project tasks. This enhancement eliminates the need to remove tasks individually, facilitating more efficient project management, especially during large-scale cleanups.

Feature Details

- User Interface Integration: Accessible directly from the project task page.

- Cautionary Control: Deletion is irreversible, ensuring that users proceed with clarity and intent.

User Benefit: Enables faster project resets, helping teams pivot or relaunch projects without tedious manual cleanups.

Note: Irreversible Action: Please note that deleting tasks in bulk is irreversible. Ensure that all necessary data is backed up or no longer needed before proceeding with this action.

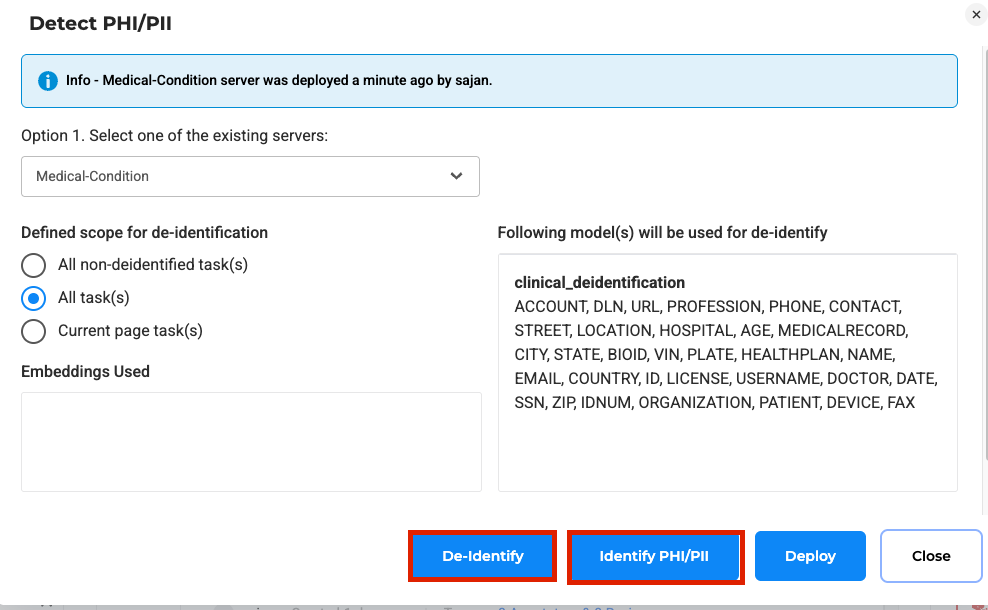

More Control, More Confidence: Split Actions for PHI De-Identification

Transparency and control are critical in handling sensitive data. De-identification workflows are now split into two distinct steps: identification and removal.

Key Features

- Two-Step Process: First identify PHI/PII, then review and finalize removal.

- Review and Adjust: Opportunity to verify and refine results before finalizing.

User Benefits

Offers full visibility and manual control, reducing errors and boosting confidence in sensitive data handling.

By splitting identification and de-identification into separate steps, the new process provides greater transparency and allows annotators to review and confirm all changes before finalizing the output.

Use Case Example

In healthcare compliance workflows, teams can ensure that PHI detection meets regulatory standards before any irreversible actions are taken.

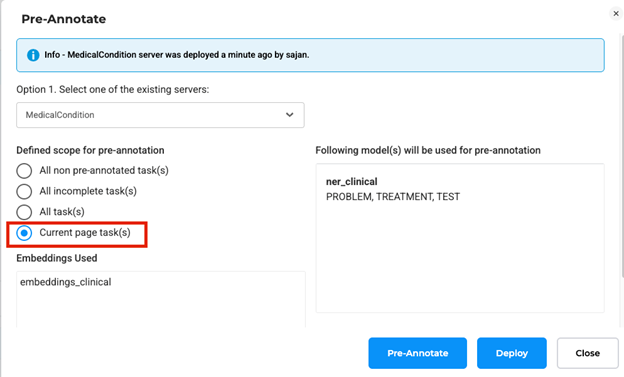

Stay Focused: Pre-Annotation Defaults to Current Page

Pre-annotation now defaults to tasks on the current page instead of the entire project. Previously, users had to manually select this option; now, it is the default behavior.

Key Features

- Error Prevention: Reduces the risk of accidentally pre-annotating full projects.

- Faster Processing: Localizes the workload for quicker completions.

User Benefits

Speeds up task preparation while minimizing accidental errors during project setup.

Seamless Zoom: Persistent PDF Zoom Levels

For projects involving PDF annotation, your preferred zoom level now persists across navigation actions within the same session.

Key Features

- Consistent Experience: Zoom levels stay intact through saves and page switches.

- Session Reset Only: Manual refresh resets zoom settings.

User Benefits

Enhances comfort, reduces repetitive actions, and ensures uninterrupted workflow during detailed reviews of multi-page PDFs.

Color-Coded Clarity: Random Colors for Visual Builder Labels

When adding multiple labels in the Visual Taxonomy Builder, each label is now assigned a random color by default. While users could previously add multiple labels at once using the Visual Taxonomy Builder, all labels were assigned the same default color, making it harder to visually distinguish them during annotation, and users had to manually update the colors for each label.

Key Features:

- Automatic Differentiation: Easier visual distinction between labels.

- Reduced Setup Time: No need to manually adjust colors.

User Benefits

Saves setup time and improves usability during annotation tasks.

Find It Fast: Auto-Expanded Labels in Page-Wise Annotation View

For HCC Coding projects, annotations are now organized page-by-page, with clicked labels auto-expanded in the sidebar.

Key Features

- Enhanced Navigation with Label Spotlighting: Clicking a labeled text highlights and expands the corresponding annotation entry.

- Improved efficiency: Streamlines navigation, improves editing accuracy, and simplifies the review of complex multi-page documents.

User Benefits

Enhances accuracy during reviews and makes handling complex documents significantly more intuitive.

Conclusion

Generative AI Lab is built to help annotation teams validate AI outputs quickly, accurately, and at scale. With its advanced task distribution, intuitive resource filtering, efficient project resets, and enhanced de-identification workflows, annotation teams can spend less time managing logistics and more time focusing on quality and compliance. These capabilities streamline human-in-the-loop validation, making it easier to manage complex datasets, ensure data privacy, and deliver consistent, reliable outcomes across all projects.

By leveraging these features, teams can accelerate their workflows, minimize manual errors, and confidently deliver higher-quality AI validations—ultimately raising the standard for AI project performance.

Getting Started is Easy

Generative AI Lab is a no-code human-in-the-loop annotation tool that can be deployed in a couple of clicks using either Amazon or Azure cloud providers, or installed on-premise with a one-line Kubernetes script.