How are medical LLMs reshaping healthcare today?

Large Language Models (LLMs) tailored to healthcare are rapidly moving from research labs to production environments. These models now support hospitals, payers, and digital health startups by automating documentation, enhancing diagnostics, improving administrative workflows, and boosting patient engagement.

Unlike general-purpose LLMs, medical LLMs such as John Snow Labs’ Medical Reasoning LLM and John Snow Labs MedLLAMA are trained on de-identified clinical notes, guidelines, and real-world biomedical content. This domain focus results in more accurate, explainable, and compliant outputs, essential qualities in high-stakes medical settings.

Which clinical documentation tasks benefit most from medical LLMs?

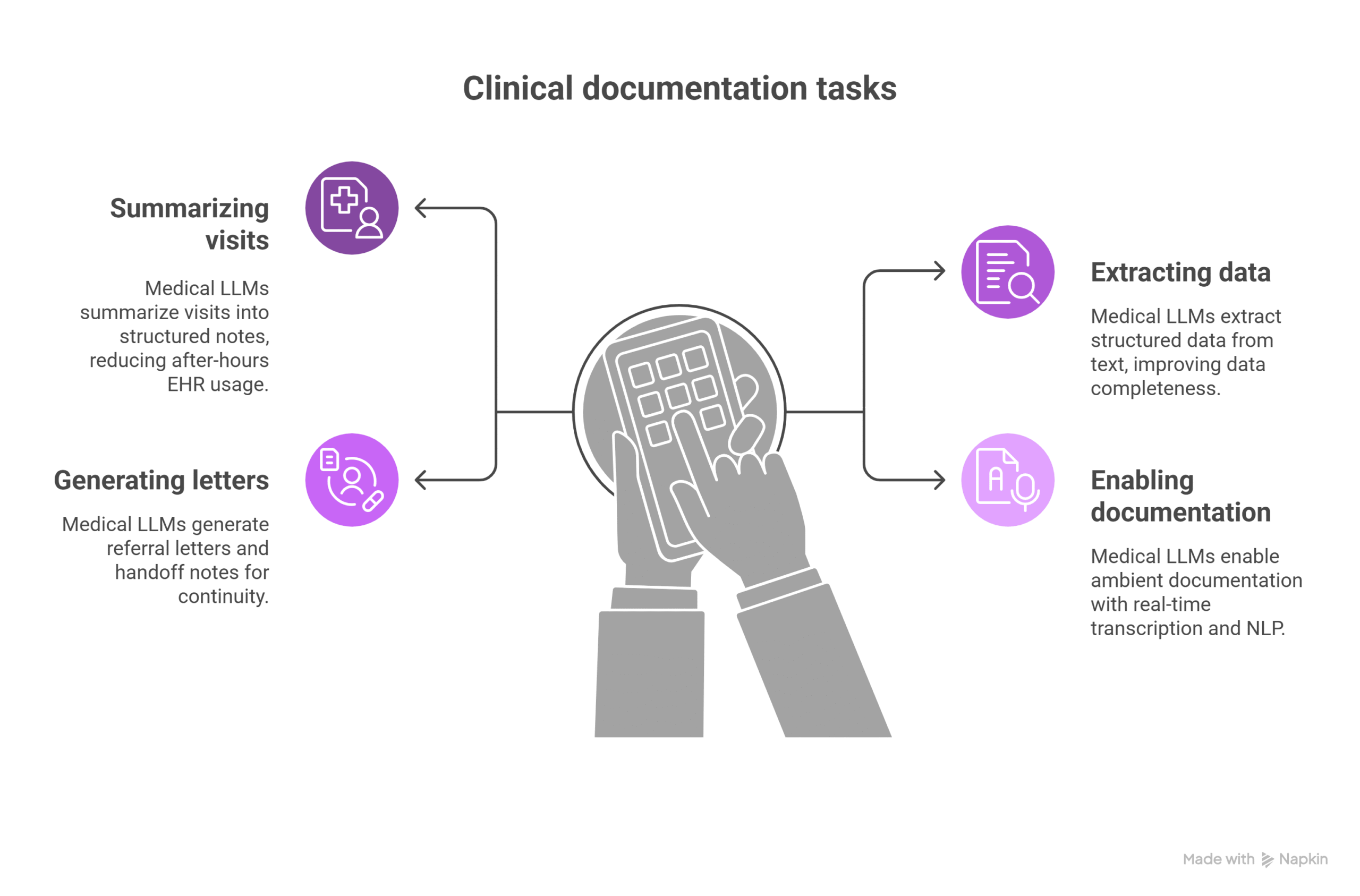

Medical LLMs are significantly alleviating the challenges of clinical documentation across several critical tasks.

One of their most impactful uses is summarizing patient visits into structured SOAP or clinical notes. This automation reduces after-hours documentation, with real-world deployments reporting up to a 50% decrease in charting time. Additionally, LLMs can extract structured data from free-text clinical narratives. This improves data completeness, which is crucial for analytics, quality reporting, and accurate reimbursement.

Medical LLMs also streamline the generation of referral letters and handoff notes, ensuring seamless care transitions among providers. Perhaps most notably, they support ambient documentation workflows by combining real-time transcription with natural language processing. This enables clinicians to produce context-rich, compliant notes during the patient encounter without manual input, significantly enhancing workflow efficiency and what is more important, documentation quality.

John Snow Labs’ Healthcare NLP library includes over 6,600 pre-trained models to support these use cases.

How are LLMs enhancing decision support and triage?

Medical LLMs are strengthening decision support systems by enhancing the way patient data is interpreted and used.

These models can identify clinical risks and escalate care based on insights drawn from longitudinal health records. They also align patient profiles with established clinical guidelines, such as those from the NCCN, AHA, and CDC, ensuring that care pathways are evidence-based.

In diagnostic workflows, medical LLMs assist by suggesting missing labs, imaging, or follow-ups, reducing diagnostic gaps. They also play a critical role in patient safety by flagging potential issues like drug-drug interactions or overlooked contraindications.

As a result, these models are becoming core components of Clinical Decision Support Systems and digital platforms in healthcare. For instance, an oncology workflow supported by reasoning LLMs could infer cancer progression timelines and treatment efficacy by analyzing unstructured EHR data, offering powerful support for precision oncology.

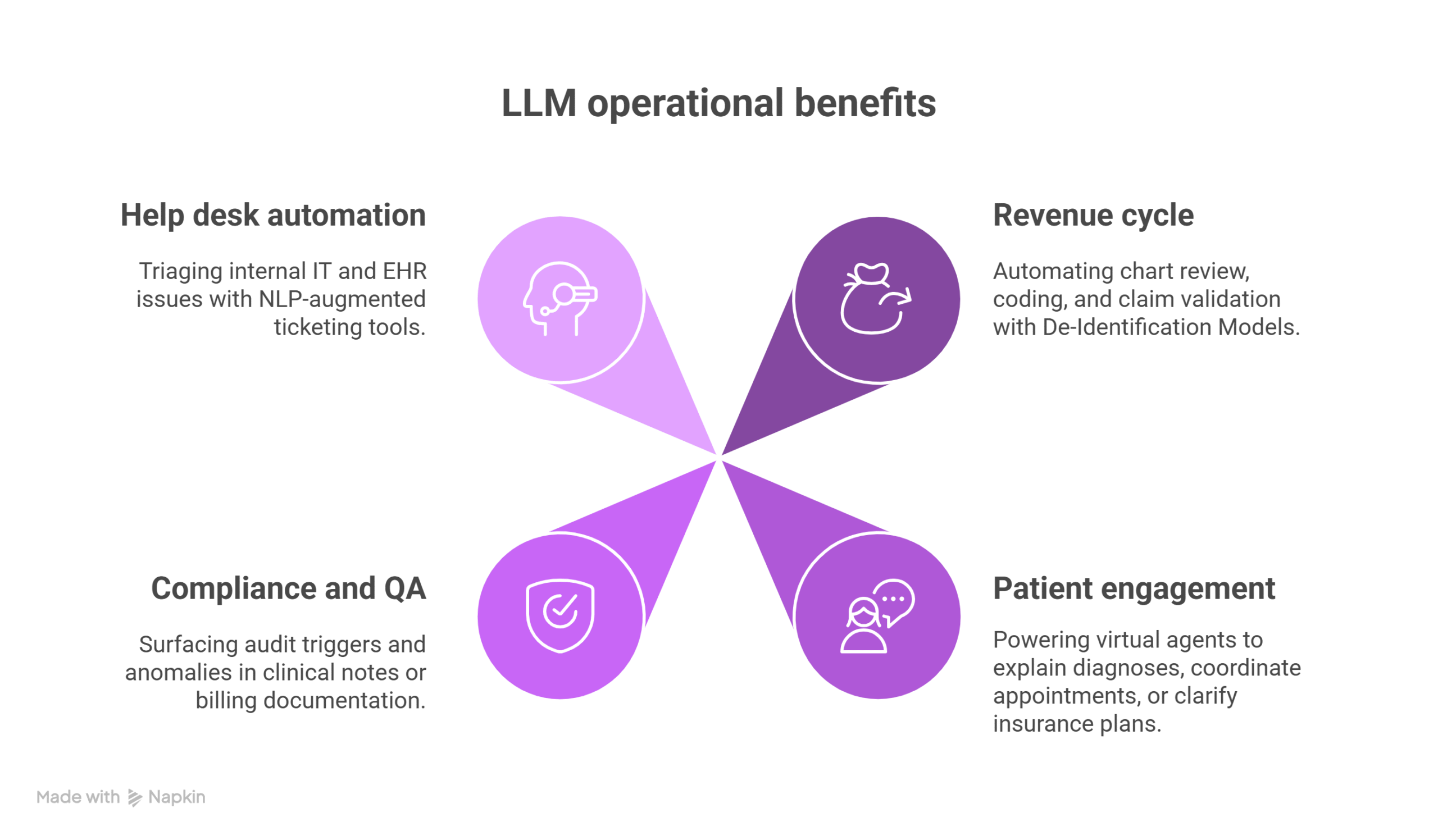

What operational areas benefit from LLM deployment?

Medical LLMs aren’t limited to direct care. They’re transforming operations across:

- Revenue cycle: Automating chart review, coding, and claim validation.

- Patient engagement: Powering virtual agents to explain diagnoses, coordinate appointments, or clarify insurance plans.

- Compliance and QA: Surfacing audit triggers and anomalies in clinical notes or billing documentation.

- Help desk automation: Triaging internal IT and EHR issues with NLP-augmented ticketing tools.

LLMs like Generative AI Lab support these workflows with tools for fine-tuning, annotation, and human-in-the-loop (HITL) validation, ensuring safe and scalable enterprise adoption.

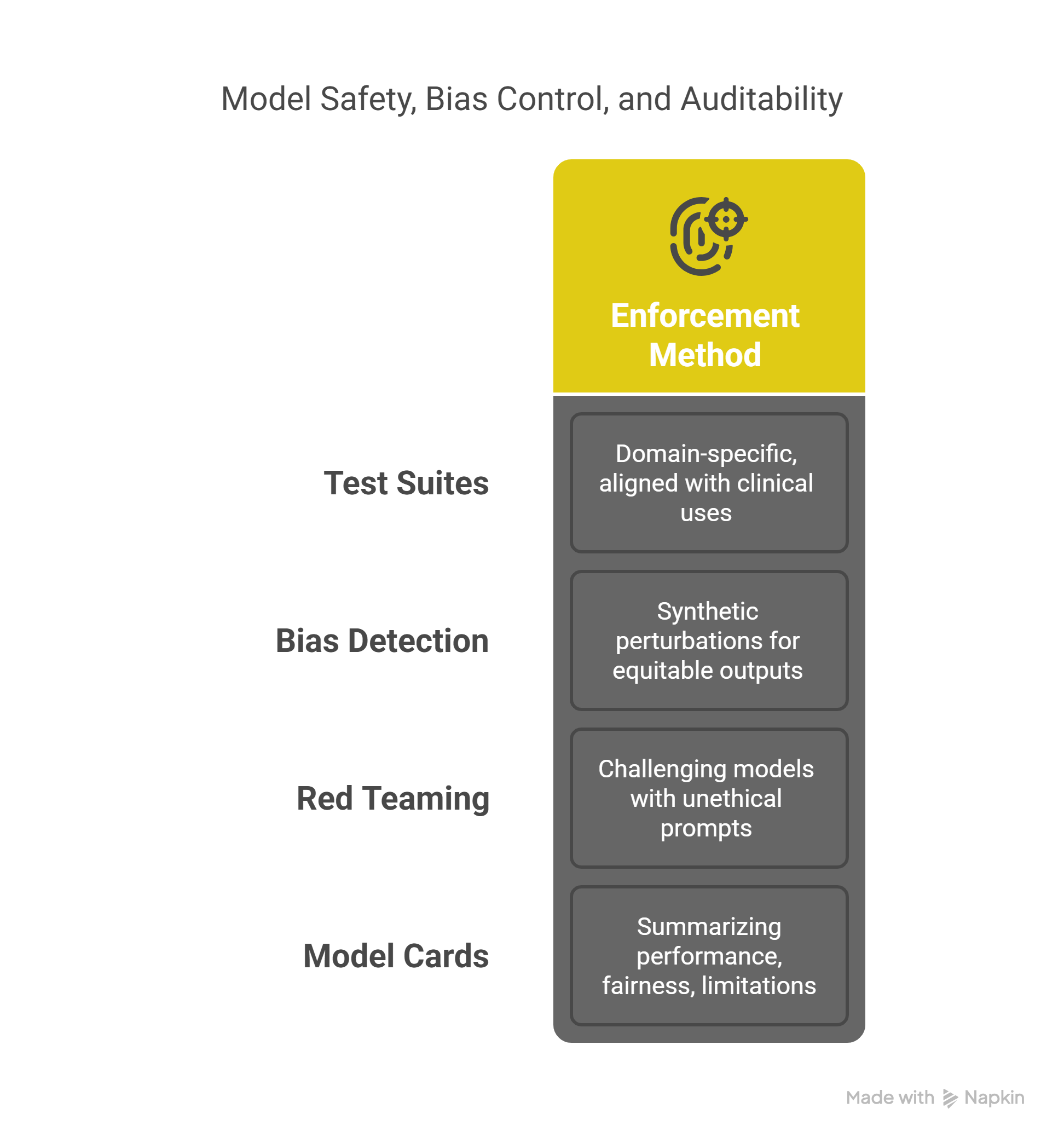

How is model safety, bias control, and auditability enforced?

Trust is foundational in healthcare AI, and the deployment of medical LLMs is carefully governed to uphold this principle.

To ensure models are fit for clinical use, domain-specific test suites are used. These are aligned with real-world healthcare applications and include tools like LangTest, which facilitate bias testing and robustness audits. Additionally, bias detection is conducted through synthetic perturbations—such as swapping gender or ethnicity terms, to validate that model outputs remain fair and equitable across diverse patient populations.

Medical red teaming further strengthens trust by exposing models to unethical or adversarial prompts, assessing their safety using rigorous standards like Med-Safety benchmarks. Complementing these efforts are transparent model cards. These documents provide clear summaries of a model’s performance, fairness profile, and known limitations, offering stakeholders a grounded understanding of model behavior before deployment.

Stanford’s Med-HALT platform and John Snow Labs’ internal evaluation tools are setting new standards for what meaningful AI validation looks like.

What’s next for medical LLMs?

Looking ahead, the next wave of clinical LLM innovation will include:

- More specialized models, fine-tuned for cardiology, oncology, psychiatry, and radiology.

- Greater agentic capabilities, where LLMs autonomously query databases or trigger alerts based on clinical rules.

- Multimodal understanding, combining text, imaging, and genomics for integrated decision support.

- Interoperability via standards like FHIR and OMOP, enabling model portability across systems and organizations.

Institutions like Mayo Clinic, Stanford Medicine, and Roche Diagnostics are already using LLM-powered solutions like those from John Snow Labs to scale patient-centered, guideline-concordant care across populations.

FAQ: Fast Facts on Medical LLM Adoption

Which clinical use cases are most validated?

Ambient documentation, oncology timeline generation, and medical coding are currently the most mature applications.

Can medical LLMs work without access to sensitive patient data?

Yes. John Snow Labs supports on-premise deployments that keep all PHI within hospital firewalls, fully HIPAA-compliant.

What role does HITL play in clinical AI?

Human-in-the-loop validation ensures that every model output used in care is reviewed by clinicians, boosting both safety and trust.

How are models evaluated for fairness?

Bias is measured by altering demographic identifiers in inputs and analyzing output shifts, followed by retraining with synthetic data if needed.

Are these tools suitable for non-English-speaking populations?

Yes. Many medical LLMs support multilingual inputs and outputs, enabling inclusive care delivery and cross-border collaboration.

Supplementary Q&A

What are the patient-facing benefits of LLMs?

They reduce complexity in patient communications, translating medical jargon into clear, empathetic language across multiple languages.

Why use synthetic benchmarks and real-patient data together?

Synthetic testing reveals potential failure modes, while real-patient data ensures models work under realistic, noisy conditions.

How do LLMs assist with value-based care?

By reducing administrative burden, improving documentation quality, and supporting proactive risk identification, LLMs directly enable better care at lower cost.