How are foundation models transforming radiology?

Foundation models are reshaping the landscape of healthcare AI, and radiology is among the most impacted specialties. These large-scale, pretrained AI models, trained on vast and diverse datasets including images and text, enable smarter, more scalable solutions for clinical workflows. Their capabilities go beyond traditional algorithms by generalizing across modalities and supporting complex decision-making tasks.

Radiology is data-rich and time-sensitive, making it a prime candidate for AI-driven improvements. As image volumes increase and reporting demands grow, the need for scalable, explainable, and compliant automation has never been greater. John Snow Labs is facilitating the change by integrating foundation models into radiology workflows, enhancing everything from triage to reporting.

What are foundation models and why are they important?

Foundation models are massive neural networks trained on broad and heterogeneous datasets. They learn representations that can be adapted to multiple downstream tasks, making them versatile for a range of applications. In healthcare, they are designed to interpret clinical language, medical images, or both.

Unlike general-purpose models like GPT-5 or Gemini, healthcare-specific foundation models are tuned on domain-relevant data. This focus enhances their reliability, reduces hallucinations, and aligns outputs with clinical expectations.

Examples of foundation models applicable to radiology:

- Medical VLM-24B: John Snow Labs’ multimodal model for vision and language.

- BioGPT & ClinicalBERT: Text-based models for clinical NLP tasks.

- MedGemma: a collection of Gemma 3 variants that are trained for performance on medical text and image comprehension.

What are the benefits of foundation models in radiology?

Foundation models bring several distinct advantages to radiology workflows:

- Reduced Annotation Burden: These models generalize well, requiring fewer labeled examples to perform well across different imaging modalities and clinical contexts.

- Improved Fairness: Pretraining on diverse datasets can reduce bias compared to narrowly trained models, promoting equitable performance across patient populations.

- Multimodal Reasoning: By processing both image and text inputs, foundation models can generate richer insights, such as associating visual findings with differential diagnoses or clinical recommendations.

- Speed and Scalability: These models can automate or augment repetitive tasks, allowing radiologists to focus on high-value activities.

How do foundation models integrate into radiology workflows?

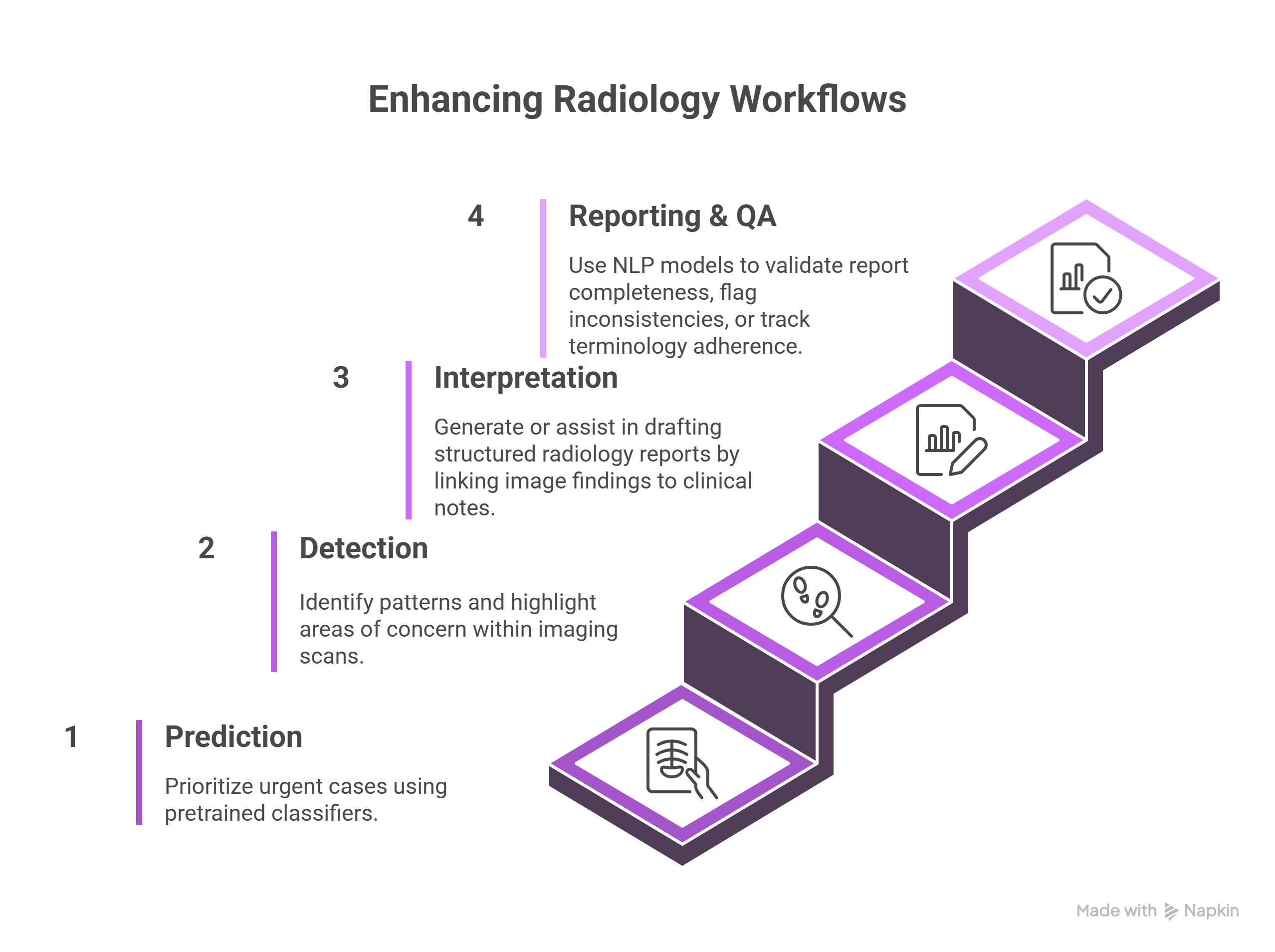

Foundation models enhance every stage of the radiology pipeline:

- Prediction (Exam Triage): Prioritize urgent cases using pretrained classifiers.

- Detection (Anomaly Identification): Identify patterns and highlight areas of concern within imaging scans.

- Interpretation (Contextual Analysis): Generate or assist in drafting structured radiology reports by linking image findings to clinical notes.

- Reporting & QA (Consistency Checks): Validate report completeness, flag inconsistencies, or track terminology adherence.

John Snow Labs supports these tasks with modular pipelines that include:

- Named Entity Recognition (NER) for extracting anatomy, pathology, and device mentions.

- Assertion Detection to distinguish between confirmed findings, ruled-out conditions, or uncertain mentions.

- Relation Extraction to understand clinical context and build structured outputs.

These pipelines are explainable, scalable, and designed to comply with evolving regulatory standards, including HIPAA and GDPR.

How is clinical deployment made accessible and scalable?

John Snow Labs prioritizes accessible AI for clinical users. Foundation models such as Medical VLM-24B are available through cloud platforms like AWS, enabling scalable deployment with minimal infrastructure overhead.

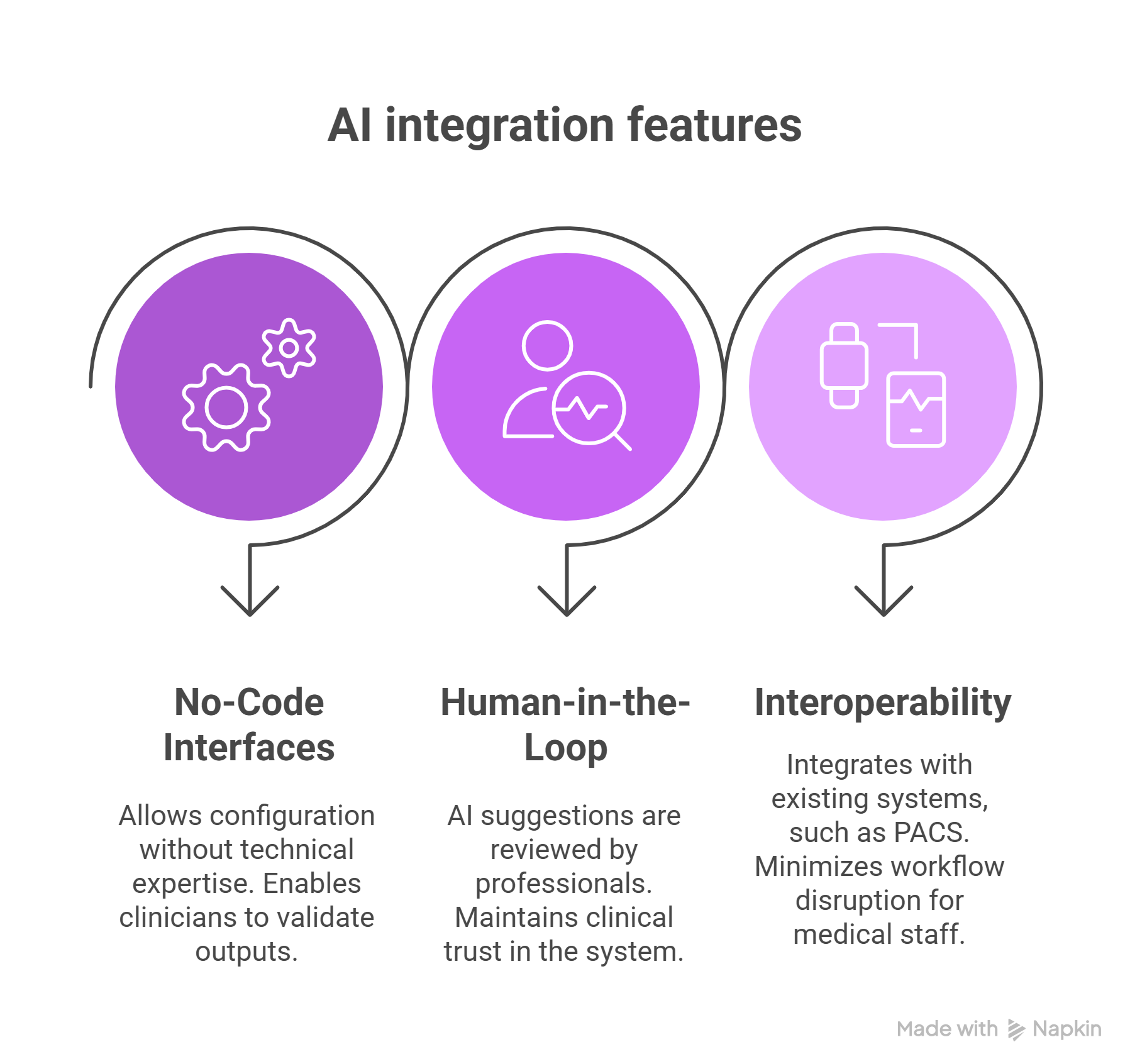

Key accessibility features:

- No-Code/Low-Code Interfaces: Clinicians and medical operations teams can configure workflows and validate outputs without needing deep technical skills.

- Human-in-the-Loop (HITL): Critical to maintaining clinical trust, HITL ensures that AI suggestions are reviewed and approved by qualified professionals.

- Interoperability: Integration with existing PACS, EHR, and RIS systems ensures minimal workflow disruption.

What are the ongoing challenges and future directions?

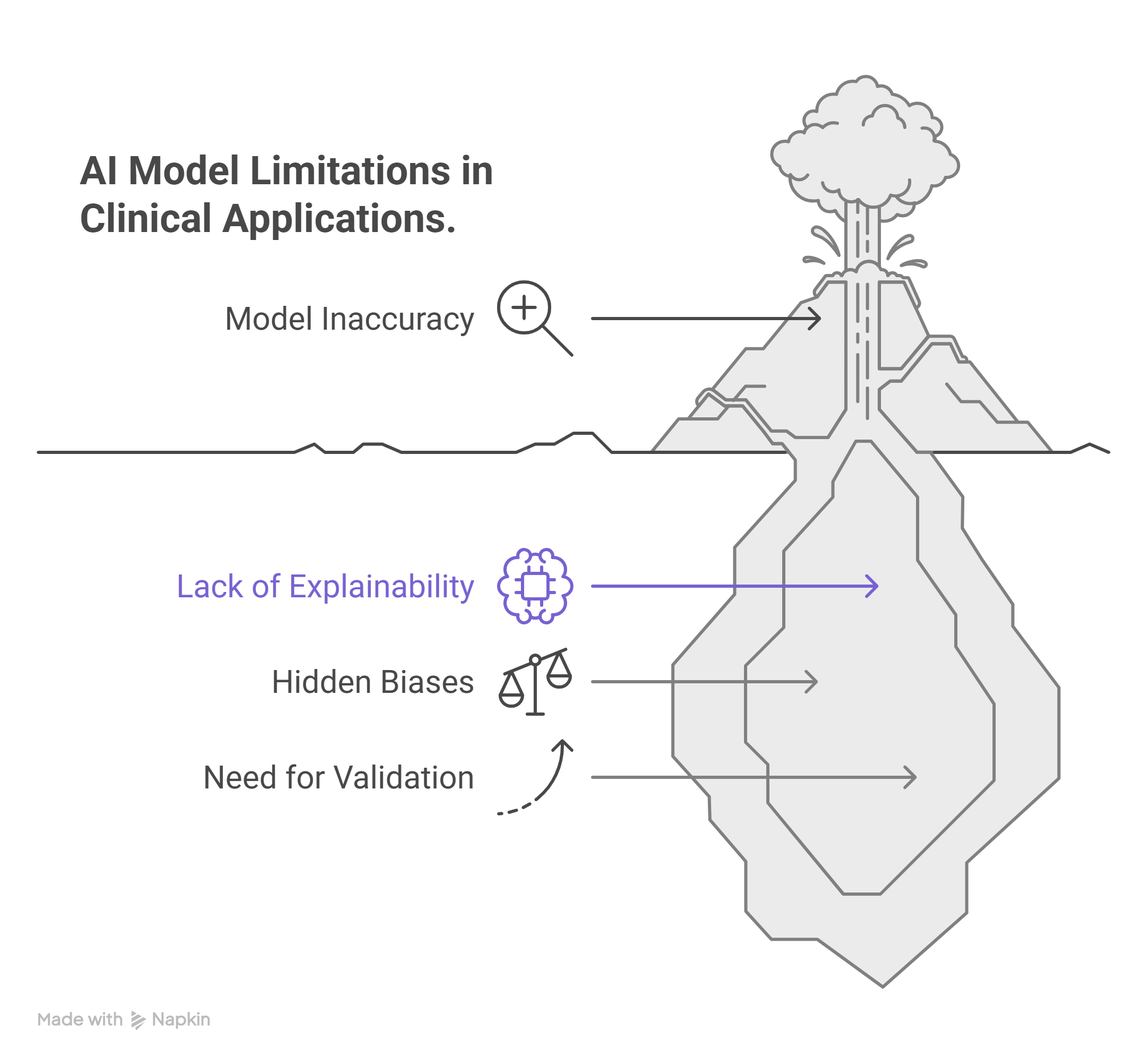

Despite their promise, foundation models in radiology face several hurdles:

- Explainability and Transparency: Even with attention maps and saliency methods, translating model behavior into human-understandable reasoning remains a challenge.

- Residual Biases: Even large, diverse datasets can encode biases that affect clinical fairness.

- Continuous Validation: Models must be retrained and validated across time, populations, and institutions to remain effective.

Why do foundation models matter for radiology’s future?

Foundation models mark a shift toward smarter, safer, and more efficient radiology. By reducing repetitive tasks, improving reporting accuracy, and supporting multimodal reasoning, these models help radiologists deliver higher-quality care.

John Snow Labs is committed to making these advancements practical. With explainable AI pipelines, scalable infrastructure, and HITL interfaces, we help healthcare organizations bring AI from research to bedside.

Interested in deploying foundation models in radiology? Schedule a call with us

FAQs

What is a foundation model in radiology?

A foundation model in radiology is a large AI system pretrained on medical images and/or clinical text, enabling it to support various tasks like anomaly detection, report generation, and clinical triage.

How does a foundation model reduce annotation work?

By learning from diverse datasets, foundation models generalize better, meaning they can perform tasks with fewer labeled examples, reducing manual annotation needs.

Can clinicians use foundation models without coding?

Yes. John Snow Labs provides low-code interfaces that let clinicians configure and validate AI outputs without needing to write code.

What makes John Snow Labs’ models unique?

Our models are trained on domain-specific data, comply with healthcare regulations, and include modular pipelines tailored for real-world clinical use.

Supplementary Q&A

How does HITL improve trust in AI radiology workflows?

Human-in-the-loop (HITL) mechanisms ensure that AI-generated outputs are reviewed by clinicians before use. This fosters clinical trust, catches edge cases, and allows continuous feedback to refine models over time. HITL is particularly important in radiology, where misinterpretations can impact diagnosis.

What is the role of multimodal AI in radiology?

Multimodal AI processes both images and clinical text, allowing it to bridge the gap between visual data and patient history. This holistic view improves diagnostic accuracy and supports tasks like report generation and clinical summarization.

What infrastructure is needed to deploy these models safely?

Secure, compliant infrastructure is key. This includes HIPAA-compliant cloud environments, role-based access controls, auditable logs, and the ability to finetune models on local datasets to maintain performance in specific clinical contexts.