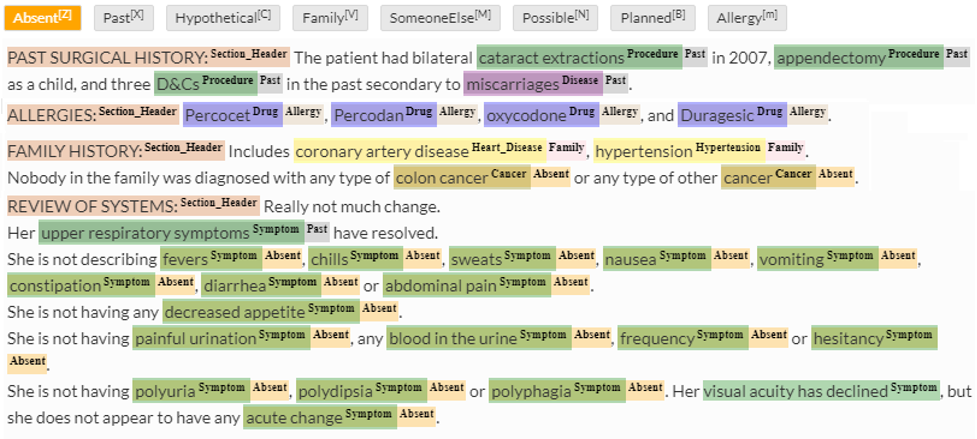

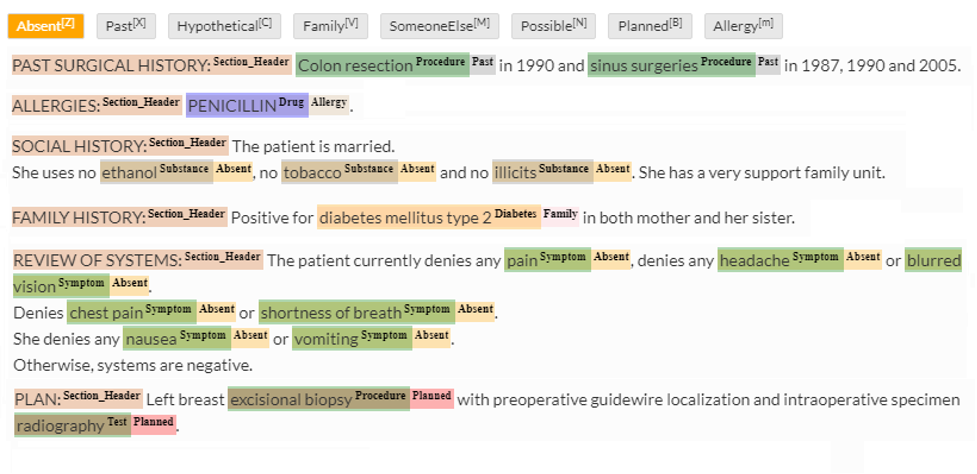

When it comes to clinical annotation projects, many times NER is not enough. Suppose that you want to identify all the diseases that were diagnosed to a group of patients using data from their EHRs. If you analyzed the extractions made only by an NER model, you would soon notice that something is not right: each patient would seem to have too many different diseases – sometimes even dozens of them. The reason for this is that each EHR can include many mentions of diseases that do not refer to the patient’s actual health status, such as conditions that were ruled out, diseases mentioned as risks or diagnoses included in the family history section. So, what could you do to identify the extractions that refer only to the patient’s actual situation? The answer is simple: using assertion labels.

Assertion labels are tags with contextual information that are assigned to NER extractions. The typical example of assertion status detection is negation identification: in the sentence “the patient has no history of diabetes”, the word “diabetes” is annotated using both an NER label (Disease) and an assertion label (Absent) to indicate that the mention of the disease doesn’t refer to the patient’s actual health status. In this guide, you will find definitions and examples of the different kinds of assertion labels, specifications about how to annotate them using the best of all NLP solutions for healthcare – the Annotation Lab, and 10 practical tips –5 basic ones and 5 for expert annotators– that will make your clinical annotator project successful.

Kinds of Assertion Labels

Labels related to the Level of Certainty:

- Present (meaning certain): This label is used for entities that are mentioned affirmatively, like the words “abdominal pain” in the example “the patient refers abdominal pain”.

- Possible: When an entity is uncertain, it should be labeled as Possible. This is the case of the word “cancer” in the sentence “it’s necessary to rule out cancer”.

- Absent: As it was mentioned before, this label is used for negation detection in phrases like “the patient has no history of diabetes”.

Labels related to Temporality:

- Present (meaning current): This label is used for entities that are mentioned as ongoing, like the word “antibiotics” in “the patient is on antibiotics”.

- Past: Entities referred to as happening in the past should be assigned this label, like “smoking” in “the patient quit smoking three years ago”.

- Planned: When an entity is mentioned as something that will happen in the future, this label should be used. For example, in the sentence “the patient will undergo eye surgery”, “eye surgery” should be labeled as Planned.

- Hypothetical: This label is used for hypothetical tenses (the ones that include words like “if” or “would”), and also when entities are referred to as something that may happen in the future (for example, for diseases that are mentioned as possible risks of the patient). The Hypothetical assertion label should be used, for example, for the words “breast cancer” in “the patient has an increased risk of breast cancer”.

Labels related to the Person:

- Patient: All the entities included in sentences that don’t refer explicitly to other people should be assigned this label, like “hypertension” in “he was diagnosed with hypertension”

- Family: This label should be used for entities related to family members, like “lung cancer” in the sentence “her father died of lung cancer”.

- SomeoneElse: Sometimes EHRs can include mentions of entities related to other people, such as friends of the patient or patients included in a clinical trial. This label should be used, for example, for the words “chemotherapy” and “cancer” in the sentence “chemotherapy showed good results in patients with cancer”.

How to Annotate Assertion

Using the Annotation Lab, you can annotate assertion in a very simple way. First, choose a NER label and select the part of the text that you want to extract, and then choose an assertion label and select that same part of the document. Remember that you can assign shortcuts to the labels to make your annotations faster.

At the beginning of any project, it is very important to discuss with the data scientist in your team the overall aspects of the annotation of assertion. In general, you should assign up to one assertion label basing your decision only on the information mentioned in the sentence that you are analyzing. And, if your project includes relation extraction, create those relations as if the entities had no assertion labels (even if one of them is negated).

5 Basic Tips

- Assign a default assertion label: If most of the entities in your project refer to the patient’s present status, it can save a lot of annotation time to consider extractions without assertion labels as having that status by default.

- Define the assertion labels that can be assigned to each entity: Some assertion labels may make sense for certain entities and not for others, so it’s a good idea to include in your Annotation Guidelines the assertion labels that can be used in each case.

- Make your assertion taxonomy simple: You can merge into one single label (OtherStatus) assertion labels like Hypothetical, Planned and SomeoneElse if they are not relevant for your annotation project.

- Decide a way to choose between multiple possible assertion labels: Sometimes you may need to assign one assertion label to an entity that is, for example, both uncertain and related to a family member. It can be helpful to include a priority list in your Annotation Guidelines for this kind of cases. One recommended possibility is to prioritize the labels from the most important to the least important this way: SomeoneElse > Hypothetical > Absent > Family > Possible > Planned > Past (exception Possible > Absent).

- Define how to deal with special cases: A clinical document can include mentions of relevant entities in its title, in section headers or in the keywords, so it’s important to define beforehand how to annotate those cases. Besides that, it may be useful to decide how to deal with ambiguous assertion status, which can be found in phrases like “metastatic lung cancer was ruled out”, in which it is impossible to know if the Absent status refers only to the word “metastatic” or if it applies also for “lung cancer”.

5 Tips for Expert Annotators

- Create your own assertion labels: Adapt your assertion taxonomy to the needs of your project. If you want to identify drugs that cause allergy, create the assertion label Allergen for them. Or add to your taxonomy assertion labels like AdverseEvent (for symptoms or diseases that were caused by a therapy) or Conditional (for symptoms that appear only under certain circumstances).

- Consider creating a label for unknown status: Sometimes you may not have enough information to assign any assertion label, like in the sentence “3. Cholecystectomy.” included in a list. If you don’t want to assign any label by default in these cases, it can be a good idea to have an UnknownStatus label.

- Add a special label to make reviews easier: Assertion labels are more difficult to review than NER extractions, so it can be helpful to include a Review label that annotators can use when they have doubts in any edge case. This way, they can make sure that their work gets reviewed without altering their workflow.

- Include two different labels for past entities: If the annotation of temporal data is particularly important to your project, you may want to differentiate between past events that are clearly over (“the patient was on chemotherapy for two years”) and the ones that may still be ongoing (“chemotherapy was started two years ago”). A History label could be created for the second kind of cases, taking into account that the word “history” in clinical texts can be used to refer to events that are not necessarily finished (for example, in “patient with history of smoking”).

- Complement your assertion labels with section name extraction: It is not the same, for example, to find the sentence “He has cancer” included in the Personal History section or in the Family History section. Given that sections can provide information about the assertion status of an entity, it may be helpful to extract the most relevant ones using NER labels.

Following these suggestions, you will be able to use assertion labels in your clinical annotation project to make it successful.

By integrating these strategies into your project, you can leverage Generative AI in Healthcare to enhance clinical decision-making and deploy advanced Healthcare Chatbot solutions for improved patient interaction and care.