A single short GPT-4o query consumes 0.43 Wh of electricity. Scale that to 700 million queries per day, and the annual electricity consumption equals 35,000 U.S. residential households, or 50 inpatient hospitals.

Most hospitals deploying clinical LLMs have no idea how much energy their AI systems consume. As LLMs increasingly power clinical workflows, chart abstraction, coding, decision support, documentation, their energy footprint scales silently. Recent studies confirm that inference, not just training, now accounts for up to 90% of a model’s total lifecycle energy use. For continuous clinical deployments processing millions of notes annually, inefficient models translate to substantial electricity consumption, infrastructure costs, and carbon emissions.

Yet most healthcare organizations cannot answer basic questions: How much energy does our clinical AI actually consume? What’s our cost per processed note? Are we optimizing for efficiency or just throwing hardware at the problem?

It’s time to ask: How many tokens per joule does our LLM system deliver? And how can we optimize that ratio without degrading clinical performance?

In this post we outline:

- Why energy per token matters for clinical LLM deployments

- How to measure, benchmark, and monitor energy usage

- Engineering strategies (infrastructure, model & serving optimizations) to reduce the energy footprint

- How John Snow Labs’ architecture and approach support “Green AI” for healthcare

Why energy efficiency matters for Clinical LLM use

Environmental and regulatory context

Recent studies confirm that LLM inference, not just training constitutes a major part of AI’s environmental footprint.

For large-scale, continuous clinical deployments (e.g. processing millions of clinical notes, coding workflows, registries, real‑time pipelines), inefficiency can lead to substantial electricity consumption.

As sustainability and carbon‑accounting become priorities (especially for large health systems, payers, or institutions with environmental, social, governance (ESG) goals), being able to quantify, track, and reduce energy per inference becomes a material requirement, not an aspirational extra.

Operational cost and scalability

Hospitals and health systems often operate under constrained budgets, and compute costs (electricity, cooling, infrastructure) can escalate as data volume and usage increase.

Unoptimized inference pipelines might throttle scalability; bulky, inefficient LLM usage can lead to infrastructure bottlenecks, higher utility bills, and environmental compliance headaches.

Clinical, compliance, and ethical responsibility

Medical deployments are often already burdened with compliance, data governance, privacy, and auditability. Adding sustainability oversight aligns with emerging norms in “responsible AI.”

Energy‑aware design improves long-term maintainability, making it possible to scale inference workloads without excessive infrastructure bloat or surprise costs.

How to measure & benchmark LLM energy use

To optimize energy use, you must first measure it. Recent work offers guidance and growing consensus around metrics and methodology:

- A large-scale empirical study across 155 LLM architectures and 21 GPU configurations used prompt-level measurements to quantify energy consumption during inference.

- Others empirically measured inference cost per output token. For instance, on older LLMs (e.g. a 65B‑parameter LLaMA), the energy per output token was around 3–4 Joules.

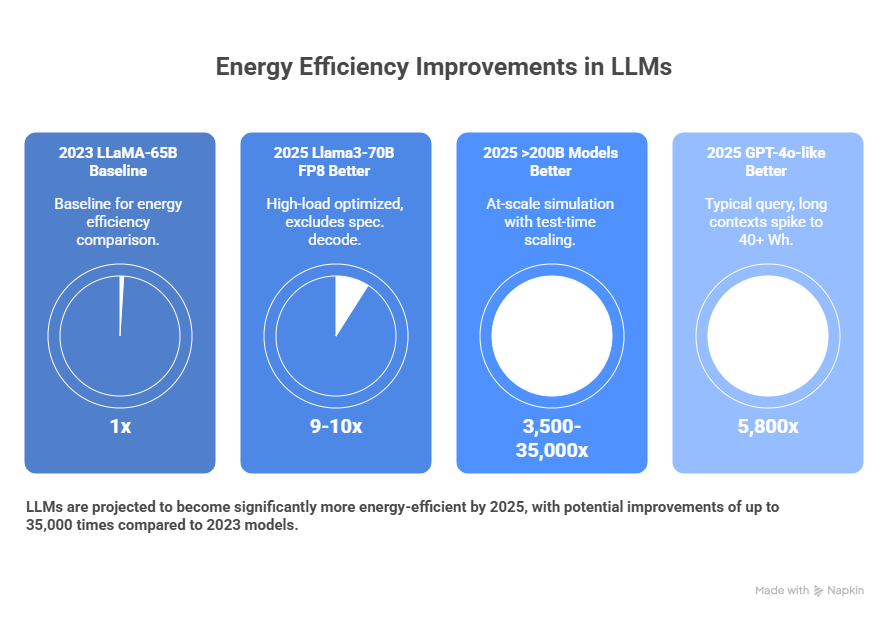

| Era/Source | Model/Hardware | Jules per Token (Output or Total) | Relative to 2023 LLaMA-65B (3.5 J) | Notes arxiv+1 |

| 2023 (Samsi) | LLaMA-65B / V100-A100 | ~3–4 J/output | 1× (baseline) | Empirical, multi-GPU, var. batch/seq. len. |

| 2025 (Lin test) | Llama3-70B FP8 / 8xH100 vLLM | ~0.39 J/total | ~9–10× better | High-load optimized; excludes spec. decode. |

| 2025 (arXiv:2509.20241) | >200B models / 8xH100 FP8 | ~0.1–1 mJ (implied median) | ~3,500–35,000× better | At-scale sim.; PUE 1.05–1.4; test-time scaling to 4+ Wh/query. |

| 2025 (Epoch AI) | GPT-4o-like / H100 | ~0.6 mJ/output (est.) | ~5,800× better | Typical query; long contexts spike to 40+ Wh. |

- Newer proposals suggest reporting Energy per Token as a standard efficiency metric, analogous to FLOPs or latency metrics in conventional ML benchmarking.

- Infrastructure-aware analyses show that energy efficiency depends not only on model architecture, but also on hardware, batch sizes, input/output sequence length, attention mechanism inefficiencies, GPU utilization, and deployment framework.

Based on this emerging literature, a practical “tokens per‑joule” benchmarking process could look like this:

- Instrument power consumption at GPU or server level – record watts drawn during inference workloads (ideally via data‑center power meters or GPU telemetry).

- Log token counts per inference request – track number of output tokens (or input + output) for each inference.

- Compute energy per token – total joules consumed ÷ number of tokens generated.

- Aggregate across workloads and batch runs – to get median / mean / percentile values (tokens/J, tokens/Wh) for each model + hardware + workload combination.

- Compare across alternatives (model size, quantized vs full precision, batching, hybrid architectures) to identify sweet spots: acceptable performance with lower energy cost.

- Track over time: as usage scales, infrastructure changes, or model versions evolve to detect drift or regressions in energy efficiency.

Once benchmarked, an organization can set targets (e.g. “>= 250 tokens per Wh at 95th percentile”) and monitor regressions – operationalizing “Green AI” as part of clinical AI governance.

Engineering strategies to reduce energy footprint of Clinical LLM inference

Based on research and emerging best practices, the following levers offer the biggest real-world gains:

| Lever | Benefit / Trade-off |

| Model pruning / quantization / smaller models for less critical tasks | Reducing precision (e.g. from FP32 to FP16 / INT8) or using smaller or distilled models can drastically cut energy per token often with minimal impact on utility for many NLP tasks. Studies have shown inference energy can drop by up to 70–80%. |

| Batching and request aggregation | Serving multiple inference requests in batch improves GPU utilization and amortizes startup overhead reduces per‑token energy consumption compared to naive one‑by‑one serving. |

| Dynamic model selection / hybrid pipelines | Use smaller, efficient models for “simple” tasks (e.g. entity extraction, normalization), reserve large models for complex reasoning. This reduces average energy per token across workflows. |

| Efficient hardware and utilization optimization | Use energy‑efficient GPUs/accelerators; monitor utilization and avoid idle spin; optimize memory and attention operations. Recent studies found that certain components (attention blocks) are disproportionately energy‑hungry suggesting architecture‑level optimization may pay off. |

| Caching, retrieval-augmented workflows, and output reuse | For repetitive tasks or common prompts (e.g., standardized documentation, coding, summaries), caching outputs or using smaller retrieval-based modules avoids redundant generation. This reduces energy usage without sacrificing throughput. |

| Infrastructure-level optimizations: renewable energy sourcing, efficient cooling, data‑center PUE optimization | Deploying inference in energy‑efficient data centers, using renewable energy, optimizing cooling, and using efficient scheduling can reduce carbon footprint even if absolute energy consumption remains sizable. |

Combining several of these levers, model optimization, batching, infrastructure tuning, can lead to 8–20× reductions in energy per query compared to naive deployment, especially on heavy, long‑context workloads.

Why John Snow Labs is well positioned to lead “Green AI” for healthcare

John Snow Labs’ stack and architecture deliver many of the levers above, making it possible to build energy‑efficient, compliance‑ready clinical NLP/LLM systems at scale:

- Flexible model architecture and pipeline modularity – John Snow Labs supports custom pipelines, enabling hybrid deployments: lighter models for extraction & coding tasks, heavier models for reasoning only where needed. This matches the “use‑the‑right‑tool” philosophy crucial for energy efficiency.

- Support for quantization, efficient inference, and batch serving – Because John Snow Labs builds on scalable infrastructure (Spark, distributed compute), it is well-suited to deploy efficient inference at scale, with batching and resource pooling.

- On‑premises / private‑cloud deployment options – Critical for healthcare environments where data governance, privacy, and regulatory compliance matter. With controlled infrastructure, it is possible to optimize GPU utilization, scheduling, and power/cooling efficiency, reducing waste.

- Integration with broader data and compute pipelines – Combining structured data processing, NLP, LLM inference, and data warehousing enables reuse (caching results, avoiding redundant generation), improving overall resource efficiency.

- Governance, auditability, and transparency – Because John Snow Labs systems allow measurement, logging, and traceability, organizations can track energy use, deploy “tokens‑per‑joule” metrics, and integrate sustainability into AI governance as a first‑class dimension.

In short, John Snow Labs enables not just “AI for healthcare,” but sustainable, scalable, compliant AI, validating ROI not just in clinical outcomes but in resource use, cost, and environmental impact.

Practical recommendations for healthcare organizations & AI teams

If you are building a clinical NLP/LLM deployment and care about sustainability, start with this checklist:

- Baseline your energy footprint now: monitor inference servers, log token counts, compute energy per token.

- Set internal energy‑efficiency targets: e.g., “tokens per Wh,” “Wh per 1,000 notes processed,” or “kWh per 1000 patients per year.”

- Employ hybrid model and pipeline architecture: use smaller/optimized models for routine tasks; reserve large models for complex reasoning; reuse outputs when possible.

- Optimize serving infrastructure: use batching, efficient GPU scheduling, off-peak workloads, autoscaling, and energy‑efficient hardware.

- Monitor and audit over time: watch for drift, load increase, regression in efficiency; track utilization; re-evaluate model and serving strategy periodically.

- Include energy metrics in compliance and governance frameworks: e.g. EHR/AI governance, sustainability reporting, carbon footprint policies.

- Report transparently: publish “tokens‑per‑joule” (or similar) metrics internally and externally to build trust, compare across deployments, and support sustainability goals.

Why “Green AI” is more than a buzzword. It is a strategic advantage

- Cost‑effective scaling: Lower energy consumption translates directly to lower utility costs, reduced need for overprovisioned infrastructure, and better resource utilization, all critical as clinical data volumes grow.

- Risk mitigation: Energy‑efficient, auditable systems are easier to justify to hospital leadership, compliance officers, or sustainability committees. They reduce the risk of “AI sprawl” leading to runaway power use or infrastructure bloat.

- Sustainability and corporate responsibility: As ESG becomes more relevant in healthcare, being able to show quantified energy savings and carbon-impact reduction becomes a competitive and ethical differentiator.

- Regulation readiness: In some regions, environmental reporting and sustainability requirements for large compute deployments are emerging. Having a robust energy‑accounting and optimization framework offers a head‑start.

- Better performance and robustness: Efficiency often correlates with better utilization, stable infrastructure and fewer bottlenecks. It facilitates delivering faster, more reliable inference, lower latency, and better user experience.

Conclusion

As clinical AI scales from pilots to production, from hundreds of notes to millions, energy consumption scales with it. And unlike training costs (one-time), inference costs accumulate every query.

The hospitals that will succeed with AI at scale aren’t the ones with the biggest GPU clusters. They’re the ones who measure energy per token, optimize it, and treat efficiency as a first-class requirement alongside accuracy and compliance.

By measuring tokens per joule, benchmarking inference energy, and optimizing across model, infrastructure, and serving architecture, institutions can turn AI deployment into a sustainable, efficient, and long‑term asset.

John Snow Labs is uniquely positioned to support this transformation: its tools make it possible to build energy‑aware, domain‑tuned, compliant, scalable NLP systems, delivering not just clinical value, but cost‑effective, environmentally responsible AI for healthcare.

Because sustainable AI isn’t just about corporate responsibility or ESG reporting, though those matter. It’s about building systems you can actually afford to run at the scale healthcare demands. Start measuring today. Your CFO and your sustainability officer will both thank you.