TL;DR Summary

As AI becomes embedded in clinical workflows, hospitals must transition from “trust by default” to a Zero Trust AI (ZTAI) architecture. This approach treats AI models and their outputs as sensitive infrastructure, equivalent to EHR or PACS systems, requiring continuous verification, least-privilege access, and immutable auditing. The risks are not theoretical: healthcare AI systems without proper governance face PHI leakage, model manipulation, and systemic failures that can compromise patient safety. Organizations like Providence St. Joseph Health processing 700 million patient notes and Novartis deploying AI in air-gapped networks demonstrate that Zero Trust principles and operational AI can coexist. Ultimately, ZTAI is the only path to moving AI from “pilot-grade” experiments to safe, “infrastructure-grade” clinical reality.

When a major academic medical center deployed an AI documentation assistant in 2024, their security team discovered the system had unrestricted access to the entire EHR database, far beyond what was clinically necessary. The AI could theoretically read any patient record, not just those relevant to the clinician using it. This violated the principle of least-privilege access that governed every other clinical system. The deployment was immediately paused.

This scenario illustrates a broader problem: as hospitals increasingly incorporate large language models (LLMs) and AI-powered tools into care, administration, and decision support, many are treating these systems as optional add-ons rather than core infrastructure requiring robust security, governance, and trust. In practice, this means applying a “zero-trust” mindset to AI, verifying every access, limiting permissions, monitoring behavior, and building oversight into every layer. Without these safeguards, AI systems in healthcare risk exposing patient data, undermining safety, and eroding institutional trust.

What is “zero‑trust AI” and why it matters for hospitals

The concept of Zero Trust Architecture (ZTA) originates from cybersecurity. The U.S. National Institute of Standards and Technology (NIST) defines Zero Trust Architecture in Special Publication 800-207 as a security paradigm where no implicit trust is granted based on network location. Instead of assuming that users or systems inside a network “can be trusted,” Zero Trust operates under the maxim “never trust, always verify.” Every access request, by a user, a device, or software, must be authenticated and authorized. Permissions follow the principle of least-privilege, and access is continuously revalidated.

When extended to AI, especially powerful models like LLMs or agentic systems, this becomes Zero Trust AI (ZTAI): treating AI models and their outputs as sensitive infrastructure, placing them under the same or stricter controls as EHR databases, PACS systems, or other regulated healthcare IT systems.

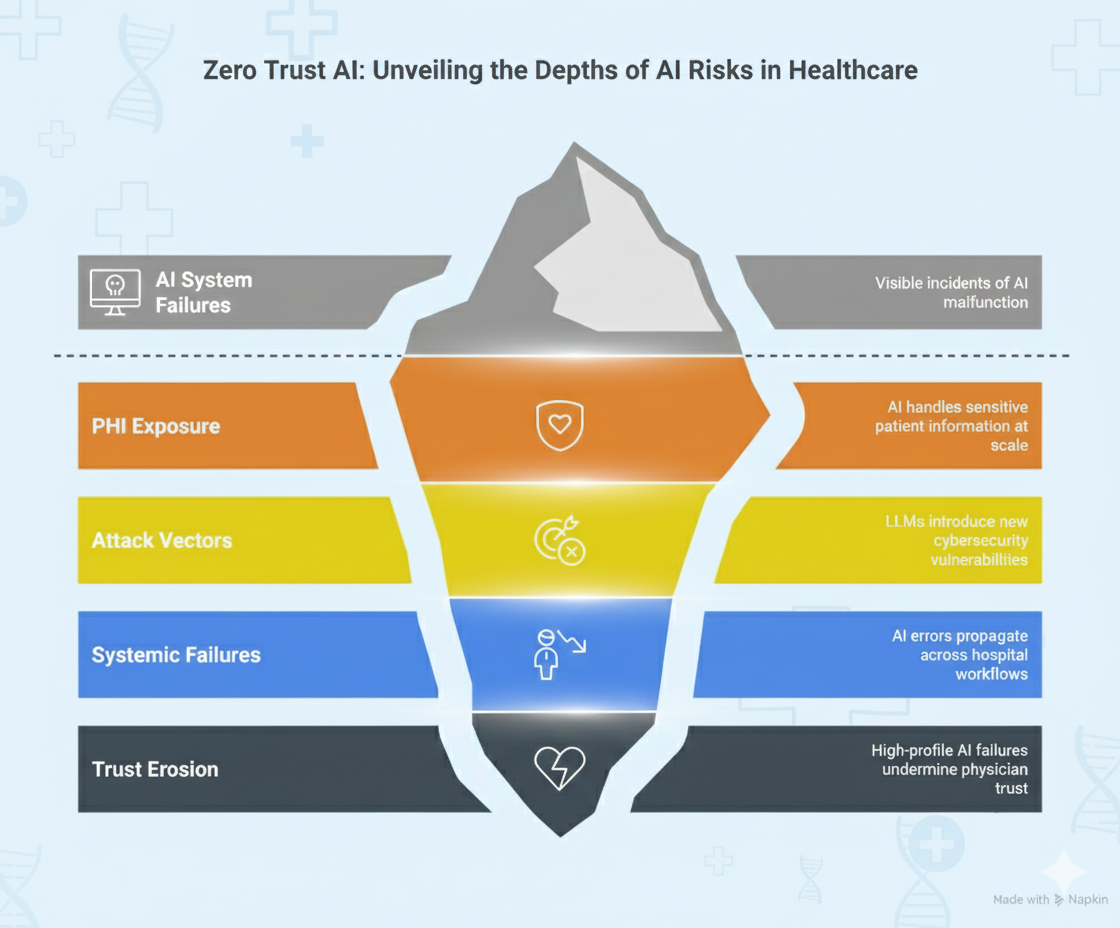

This matters because AI systems in healthcare operate fundamentally differently from traditional software:

AI systems handle and generate PHI at scale

Unlike traditional applications with well-defined data access patterns, LLMs can process vast amounts of clinical data, synthesize information across patient records, and generate new text that may inadvertently contain sensitive information. A single AI query might access dozens of patient records to formulate a response, creating exposure risks that traditional role-based access control was not designed to handle.

LLMs introduce unique attack vectors

Traditional cybersecurity focuses on preventing unauthorized access to systems and data. But LLMs bring entirely new vulnerabilities: adversarial inputs that manipulate model behavior, training data poisoning that corrupts model knowledge, model inversion attacks that extract training data, and prompt injection that bypasses safety controls.

AI failures become systemic

As AI becomes embedded across workflows, like documentation, triage, coding, decision support, quality measurement, its failure is not isolated. A compromised or malfunctioning AI system can propagate errors across an entire hospital. Consider an AI coding assistant that begins systematically mis-coding diagnoses: the impact affects billing, quality metrics, population health analytics, and clinical decision support, all simultaneously. This is fundamentally different from a single application failure.

Trust erosion is catastrophic

Clinical AI adoption depends on physician trust. A single high-profile failure, leaked PHI, a serious diagnostic error, or evidence of algorithmic bias, can undermine years of implementation work. Unlike consumer applications where users tolerate occasional errors, healthcare AI must maintain near-perfect reliability to remain clinically credible.

In short: AI must be treated as a trusted-no-one, verify-everyone system because the stakes, patient safety, privacy, compliance, institutional trust are too high for “trust and hope.”

What risks arise when AI is not treated with zero‑trust discipline

The risks of inadequate AI governance are not theoretical but real. Without Zero Trust discipline, hospitals face:

Data breaches and PHI leakage

AI tools often access and generate patient data across workflows. Without strict access controls, logging, and authorization, several failure modes emerge:

- Overprivileged access: An AI assistant with read access to the entire EHR database rather than just relevant patient records

- Output leakage: Model responses that inadvertently include PHI from unrelated patients due to training data memorization

- Logging vulnerabilities: AI queries and responses stored in unsecured logs that become targets for data exfiltration

- Third-party exposure: Cloud-based AI services that transmit PHI to external systems without proper safeguards

Providence St. Joseph Health demonstrates what rigorous data protection looks like: when processing 700 million patient notes, they validated their de-identification system by having human experts review 34,701 sentences across 1,000 randomly selected notes, achieving 0.81% PHI leak rate. This level of validation rigor, treating every data transformation as security-critical is what Zero Trust AI demands.

Model‑level vulnerabilities: poisoning, inversion, adversarial attacks

AI models can be manipulated in ways that traditional software cannot:

- Training data poisoning: Malicious actors corrupting training data to embed backdoors or biased behaviors

- Model inversion: Extracting sensitive training data by analyzing model outputs

- Adversarial inputs: Crafted queries that cause models to behave unpredictably or expose information

- Prompt injection: Manipulating model behavior by embedding malicious instructions in user inputs

These attacks exploit the statistical nature of large language models. Unlike traditional software where behavior is deterministic and testable, large language models can exhibit unexpected behaviors.

Uncontrolled access to corporate resources

LLM-based agents often require access to multiple systems, EHRs, imaging archives, lab data, scheduling systems, billing platforms. Without strict segmentation:

- An AI agent intended for documentation could theoretically modify billing records

- A compromised inference endpoint could become a pivot point for lateral movement across hospital networks

- API credentials stored insecurely could enable unauthorized system access

- Lack of rate limiting could allow data exfiltration through repeated queries

Novartis addressed this by deploying AI systems in air-gapped networks where external API access is prohibited. Their Trial Master File automation processes clinical trial documentation with strict network isolation and access controls, Zero Trust principles applied at the infrastructure level. This demonstrates that high-security requirements and AI functionality can coexist when architecture prioritizes security from the outset.

Lack of auditability and traceability

Without rigorous logging and governance, critical questions become unanswerable:

- Who prompted the AI system and what was asked?

- What patient data did the model access to generate its response?

- Which model version produced this output?

- Who acted on the AI’s recommendation and with what outcome?

- Can we reproduce this result for regulatory review?

This audit gap undermines accountability, makes compliance impossible to prove, and prevents root cause analysis when errors occur. In healthcare, where liability and regulatory requirements demand documentation, AI systems without comprehensive audit trails are fundamentally unsuitable for clinical use.

Bias, fairness, and equity risk amplified

AI systems can encode or amplify bias present in training data, leading to:

- Diagnostic algorithms that perform worse for underrepresented populations

- Risk stratification models that systematically under-prioritize certain demographic groups

- Clinical decision support that reflects historical disparities in care delivery

- Natural language processing that misinterprets cultural or linguistic variations

Without continuous monitoring and oversight, these biases remain invisible until they cause measurable harm. Healthcare organizations have a legal and ethical obligation to ensure equitable care, making bias monitoring a non-negotiable component of AI governance.

What Zero‑Trust AI in a hospital should look like: key principles and controls

Organizations like Intermountain Health, which processes hundreds of millions of clinical documents on unified data platforms, demonstrate that rigorous governance and operational scale can coexist. Below is a blueprint for implementing Zero Trust AI in hospital contexts:

Strong identity, authentication, and least-privilege access

Every interaction with AI systems must follow these principles:

- Unique identity verification: Each user, system, or AI agent must be uniquely identified and authenticated before any access, even when inside the corporate network. Multi-factor authentication (MFA) should be required for all AI system access.

- Least-privilege permissions: AI agents receive only the minimal permissions required for their specific function. An AI documentation assistant needs read access to the current patient’s chart, not the entire EHR database. A coding assistant needs to write to draft coding fields, not modify finalized billing records.

- Dynamic, context-aware authorization: Permissions adjust based on context. An AI system accessible during business hours might require additional authentication for after-hours access. Access to high-risk functions (PHI export, bulk queries) triggers elevated approval workflows.

- Continuous revalidation: Sessions expire, credentials are refreshed, and access is periodically revalidated rather than granted indefinitely.

Network segmentation and micro-segmentation of AI pipelines

Healthcare organizations should reference NIST Special Publication 800-207 when designing AI infrastructure segmentation. Key implementation patterns include:

- Microsegmentation of inference endpoints: AI model servers operate in isolated network zones with strict firewall rules permitting only necessary traffic. An AI clinical decision support system does not need network access to billing systems or HR databases.

- Secure enclaves for PHI workflows: Using hardware-based trusted execution environments (TEEs) or confidential computing to process sensitive data without exposing it to the broader system. This provides cryptographic guarantees that even system administrators cannot access data in use.

- API gateways with authentication: All AI access mediated through centrally controlled gateways that enforce identity verification, rate limiting, and request validation. This creates a single control point for monitoring and enforcement.

- Containerization and isolation: AI workloads run in containers or virtual machines with minimal privileges, preventing lateral movement if a container is compromised.

Strict control over data flow and external access

Many AI systems require external connectivity, accessing medical knowledge bases, querying drug interaction databases, or utilizing cloud-based inference. Zero Trust principles demand:

- Whitelist-based external access: Only explicitly approved external endpoints are reachable. Default-deny network policies prevent unauthorized outbound connections.

- Input and output validation: All data entering or leaving AI systems is validated against schemas, scanned for malicious content, and logged. This prevents prompt injection attacks and data exfiltration.

- PHI boundary enforcement: Systems that cross the PHI boundary (accessing identifiable patient data) are subject to additional controls, including regulatory-grade de-identification before data leaves secure zones

- Rate limiting and anomaly detection: Unusual query patterns, like bulk data access, repeated failed authentication, or abnormal data export volumes should trigger automatic alerts and potential lockouts.

Immutable audit logs and provenance tracking

Organizations processing sensitive clinical data at scale demonstrate what comprehensive audit trails require:

Every AI interaction must be logged immutably:

- Input: The complete query or prompt submitted to the system

- Context: User identity, timestamp, session information, authentication method

- Data access: Which patient records or datasets the model accessed

- Model version: Exact model version, weights, and configuration used

- Output: The complete response generated

- Action taken: Whether and how the output was used (approved, rejected, modified)

Providence’s production pipeline processing 100K-500K notes daily maintains full audit trails documenting every transformation, enabling them to prove compliance in regulatory reviews. This level of documentation is not optional. It is foundational to demonstrating that AI systems operate within approved parameters.

Audit logs must be:

- Immutable: Stored in tamper-evident or append-only systems (write-once storage, blockchain-based logging, or cryptographically signed logs)

- Comprehensive: Capturing sufficient detail for complete reconstruction of any interaction

- Searchable: Enabling rapid investigation and compliance reporting

- Retained: Following regulatory requirements (typically 6+ years for healthcare data)

Continuous monitoring, anomaly detection, and drift detection

Zero Trust demands active, not passive, security. AI systems require monitoring for:

Behavioral anomalies:

- Out-of-distribution inputs that may indicate adversarial attacks

- Unusual access patterns suggesting compromised credentials

- Performance degradation that could signal model corruption

- Output patterns inconsistent with expected behavior

Model drift and performance degradation:

- Accuracy decline over time as real-world data diverges from training data

- Bias amplification as edge cases accumulate

- Concept drift where the relationships the model learned become outdated

Security events:

- Failed authentication attempts

- Privilege escalation attempts

- Unusual data export volumes

- Queries attempting to extract training data

Automated alerting, sandboxing, or rollback procedures activate when suspicious behavior is detected. Critical systems should fail-safe: when monitoring detects anomalies, the system should default to human review rather than continuing automated operation.

Human-in-the-loop governance and oversight

Zero Trust AI recognizes that AI outputs are recommendations, not decisions, especially for high-stakes clinical tasks:

Validation workflows: Clinicians or coders must review and approve AI-generated outputs before they enter production systems. An AI-suggested diagnosis requires physician verification. AI-generated billing codes require coder approval. AI-recommended treatment plans require clinical oversight.

Defined accountability: Clear assignment of responsibility for AI oversight. Who reviews outputs? Who approves deployment of new models? Who responds when errors are detected? How are mistakes handled? Who bears liability if AI output leads to patient harm?

Escalation procedures: Complex or uncertain cases automatically escalate to human experts. AI systems should recognize their limitations and request human intervention rather than generating low-confidence outputs.

Organizations deploying AI at scale report that human-in-the-loop workflows actually increase clinician trust and adoption. Rather than fearing replacement, clinicians appreciate AI as a tool that augments their capabilities while preserving their clinical judgment.

Data governance, privacy, and compliance enforcement

Healthcare AI must comply with multiple regulatory frameworks:

Privacy protection:

- Encryption in transit (TLS 1.3+) and at rest (AES-256)

- De-identification or anonymization for non-clinical uses

- Consent management and patient opt-out mechanisms

- Data minimization: collecting only necessary information

Access controls:

- Role-based access control (RBAC) defining who can access which data

- Audit trails of all data access

- Automatic access revocation when employment ends

- Regular access reviews to identify over-privileged accounts

Compliance documentation:

- HIPAA compliance for PHI handling

- GDPR compliance for EU patients

- State-specific laws (California Consumer Privacy Act CCPA, etc.)

- FDA regulations for clinical decision support systems

- CMS requirements for algorithms affecting payment

Regular validation and updates:

- Periodic model performance reviews

- Regular security assessments and penetration testing

- Bias audits examining model performance across demographic groups

- Documentation updates as regulations evolve

Why is this approach not optional? Because AI failures can become patient‑harm risks

Healthcare is not like retail, marketing, or entertainment, where AI errors are inconvenient or embarrassing. The consequences of AI failures in hospitals are fundamentally different:

Direct patient harm is possible

A misdiagnosis suggested by AI and accepted without adequate review, a medication dosing error from an AI clinical decision support system, or a missed critical finding in an AI-analyzed imaging study can directly injure or kill patients. These are not theoretical risks, they are scenarios that keep hospital risk management teams awake at night.

Systemic impact magnifies individual errors

AI-driven workflows often integrate deeply across hospital operations. A single compromised AI pipeline can affect:

- EHR documentation quality

- Billing accuracy and compliance

- Quality measure reporting

- Clinical decision support reliability

- Operational logistics and resource allocation

- Population health risk stratification

An error in one component propagates downstream, potentially affecting thousands of patients before detection.

Regulatory penalties are severe

Healthcare organizations face substantial penalties for HIPAA violations, billing fraud, and quality-of-care issues. AI systems that leak PHI, generate fraudulent billing codes, or produce biased clinical recommendations expose hospitals to:

- Civil monetary penalties ($100-$50,000 per violation, up to $1.5M annually per violation category)

- Criminal charges for willful neglect

- Exclusion from Medicare/Medicaid programs

- Reputation damage and patient trust erosion

- Class-action lawsuits from affected patients

Trust erosion can be catastrophic

Clinical AI adoption depends on physician trust. Intermountain Health’s 70% reduction in document review time came from trustworthy automation specialized medical text summarization that clinicians could rely on. When trust exists, AI accelerates workflows. When trust is absent, AI systems are ignored or actively resisted, and implementation investments fail to deliver ROI.

A single high-profile AI failure can undermine years of careful implementation work. Healthcare organizations cannot afford to learn AI security lessons through patient harm events.

In other words: AI in healthcare must be infrastructure-grade, not pilot-grade.

Steps hospitals should take today toward zero‑trust AI

To move from experimentation to safe, institutional-grade AI, hospitals should:

- Inventory AI assets and data flows – what models are used, where data flows, who has access.

- Define governance and security policy – who owns AI safety, who approves deployment, how approvals and audits happen.

- Segment AI infrastructure – isolate model servers, data stores, inference endpoints; use secure enclaves or confidential computing for PHI workflows. Organizations implementing de-identification infrastructure demonstrate how PHI boundary enforcement works in practice.

- Implement access controls and identity verification – RBAC, multi-factor authentication, least-privilege policies.

- Build audit logging and monitoring – record every AI interaction, monitor for anomaly or misuse, alert security/clinical governance teams.

- Establish human‑in‑the‑loop review workflows for high-risk outputs.

- Conduct regular security and compliance assessments – penetration testing, adversarial robustness checks, drift monitoring, bias audits.

- Train staff and stakeholders – both clinical and IT teams must understand AI risks, limitations, governance processes, and shared accountability.

Conclusion: AI is powerful, but without zero‑trust, it is too risky for healthcare

The promise of AI in healthcare, faster documentation, automated coding, clinical decision support, predictive analytics, population health management is real and compelling. Organizations already implementing these capabilities report substantial efficiency gains: up to 70% reduction in document review time, improved accuracy in risk adjustment coding, and enhanced ability to identify high-risk patients before adverse events occur.

But these tools bring with them new, amplified risks that traditional healthcare IT security was not designed to address. Treating AI as optional add-on tools rather than critical infrastructure invites catastrophic failures: data breaches affecting millions of patients, systematic diagnostic errors propagating across entire patient populations, compliance violations resulting in severe penalties, and loss of the clinical trust that makes AI adoption possible.

The evidence from organizations deploying AI at scale is clear: rigorous governance and operational AI can coexist. Providence St. Joseph Health processing 700 million patient notes with validated de-identification, Novartis deploying AI in air-gapped high-security environments, and Intermountain Health achieving efficiency gains through trustworthy automation, all demonstrate that Zero Trust principles enable rather than constrain AI capabilities.

Zero Trust AI offers a path forward, a disciplined, security-first, governance-embedded approach that treats every AI interaction as potentially sensitive, demands continuous verification, logs everything immutably, and ensures human oversight for high-stakes decisions. This is not a theoretical framework: it is a practical implementation methodology grounded in established cybersecurity principles (NIST 800-207) and adapted for the unique requirements and risks of healthcare AI.

For hospitals embracing AI seriously, adopting Zero Trust AI is not a luxury or future consideration. It is a present necessity. The alternative, deploying AI systems without appropriate governance, security, and oversight is simply too risky given the patient safety, privacy, compliance, and institutional trust implications.

Hospitals that build their AI foundations on Zero Trust principles, rigorous governance, and continuous oversight will be the institutions that realize AI’s promise: safely, responsibly, and sustainably. Those that treat AI security as an afterthought will face the consequences through breaches, failures, and erosion of the clinical trust that took decades to build.

The technology is proven. The framework is clear. The question is whether healthcare organizations will commit to implementing AI with the rigor its risks demand.

FAQs

What is Zero Trust AI (ZTAI) in a hospital context?

Zero Trust AI is a security framework that treats AI models and their outputs as sensitive clinical infrastructure rather than optional software add-ons. Operating under the principle “never trust, always verify”and derived from NIST Special Publication 800-207, ZTAI demands that every access request to an AI system be authenticated and authorized, regardless of whether it originates inside or outside the hospital network. This means AI systems operate under the same strict controls as EHR databases: least-privilege access, continuous authentication, comprehensive audit logging, and network segmentation. Unlike traditional security that assumes internal systems can be trusted, Zero Trust AI recognizes that AI-specific vulnerabilities like prompt injection, model inversion, and training data poisoning require additional safeguards beyond perimeter-based security.

Why is traditional perimeter-based security insufficient for healthcare LLMs?

Traditional perimeter-based security fails to address the unique attack vectors that large language models introduce. While classic security focuses on preventing unauthorized access to systems, LLMs can be manipulated through adversarial inputs that cause unpredictable behavior, training data poisoning that corrupts model knowledge, and prompt injection that bypasses safety controls. Additionally, LLMs can inadvertently leak sensitive information through their outputs, not because of a security breach, but because they have learned patterns from training data that include PHI. Furthermore, because AI systems in hospitals often integrate deeply across workflows, documentation, coding, clinical decision support, a single compromised AI component can have systemic impact affecting thousands of patients. Zero Trust AI addresses these risks through continuous monitoring, strict access controls, and comprehensive audit trails that traditional security does not provide.

How can hospitals ensure AI governance and compliance with healthcare regulations?

Hospitals ensure AI governance through a combination of technical controls and organizational processes. Technical requirements include encryption of data in transit and at rest, regulatory-grade de-identification for any PHI leaving secure zones, and immutable audit logging that records every AI interaction with sufficient detail for regulatory review. Organizations processing clinical data at scale have demonstrated that systematic validation, such as having human experts review thousands of randomly sampled outputs, provides the documentation regulators require. Governance also demands human-in-the-loop workflows where clinicians or coders validate AI outputs before they enter production systems, particularly for high-stakes decisions affecting diagnosis, treatment, or billing. These workflows must define clear accountability: who approves AI deployments, who monitors performance, who responds when errors occur. Regular assessments including quarterly performance reviews, annual security audits, and bias monitoring across demographic groups ensure compliance remains current as both models and regulations evolve. Documentation of all these processes, model versions, decision logic, validation results, incident responses, forms the foundation of demonstrating compliance with HIPAA, FDA requirements, and other healthcare regulations.

What are the primary risks of inadequate AI governance in healthcare?

Inadequate AI governance exposes hospitals to several categories of severe risk. PHI leakage can occur through multiple mechanisms: overprivileged AI systems accessing data beyond clinical necessity, model outputs inadvertently including sensitive information from training data, or insecure logging systems storing patient data without proper protection. These breaches affect not just individual patients but potentially millions if systemic. Uncontrolled system access means compromised AI endpoints can become pivot points for broader network attacks, with AI agents that require access to multiple systems (EHRs, imaging, labs, billing) potentially enabling unauthorized modification of clinical or financial records. Lack of auditability makes it impossible to trace the provenance of AI-generated clinical decisions, undermining accountability when errors occur and making compliance impossible to prove during regulatory reviews. Algorithmic bias without monitoring can lead to systematic disparities in care quality across demographic groups, creating both patient harm and legal liability. The systemic nature of AI integration means these risks compound: a single governance failure can propagate across documentation quality, billing accuracy, clinical decision support, and population health analytics simultaneously, affecting entire patient populations before detection.

How do hospitals implement medical language models safely at scale?

Safe implementation requires treating AI as core infrastructure from the architectural design phase. Organizations should begin by implementing network micro segmentation that isolates AI systems, model servers, data stores, inference APIs, from general hospital networks, with strict firewall rules permitting only necessary traffic. For workflows involving PHI, secure enclaves or confidential computing provide cryptographic guarantees that even system administrators cannot access data during processing. Identity and access management must enforce least-privilege principles: an AI documentation assistant needs access only to the current patient’s record, not the entire EHR database. Centralized API gateways mediate all AI access, providing single control points for authentication, rate limiting, and monitoring. Organizations processing hundreds of millions of clinical documents demonstrate that comprehensive audit logging, capturing queries, responses, data accessed, and actions taken in immutable storage, enables both security monitoring and regulatory compliance. Human-in-the-loop workflows ensure clinicians validate AI outputs for high-stakes decisions before they affect patient care. Regular validation including quarterly performance reviews, adversarial robustness testing, and bias audits maintains safety as systems evolve. The key lesson from successful deployments: security and governance must be architectural requirements from day one, not features added after pilot deployment. Organizations that retrofit security onto existing AI systems consistently face challenges; those that build Zero Trust principles into initial architecture achieve both safety and operational scale.