A new generation of the NLP Lab is now available: the Generative AI Lab. Check details here https://www.johnsnowlabs.com/nlp-lab/

Annotation Lab v3.3.0 brings a highly requested new feature for displaying the confidence scores for NER preannotations as well as the ability to filter preannotations by confidence. Also, benchmarking data can now be checked for some of the models on the Models Hub page. This version also includes IAA charts for Visual NER Projects, upgrades of the Spark NLP libraries and fixes for some of the identified Common Vulnerabilities and Exposures (CVEs). Below are more details on the release content.

Confidence Scores for Preannotations

When running preannotations on a Text project, one extra piece of information is now present for the automatic annotations – the confidence score. This score is used to show the confidence the model has for each of the labeled chunks. It is calculated based on the benchmarking information of the model used to preannotate and on the score of each prediction. The confidence score is available when working on Named Entity Recognition, Relation, Assertion, and Classification projects and is also generated when using NER Rules. On the Labeling screen, when selecting the Prediction widget, users can see that all preannotation in the Results section now have a score assigned to them. By using the Confidence slider, users can filter out low confidence labels before starting to edit/correct the labels. Both the “Accept Prediction” action and the “Copy Prediction to Completion” feature apply to the filtered annotations via the confidence slider.

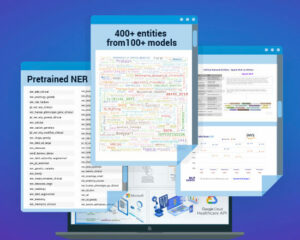

Benchmarking Data available on Models Hub

Benchmarking information related to some of the models published by John Snow Labs on the NLP Models Hub can now be seen by clicking the small graph icon next to the model name on the Models Hub page.

IAA charts are now available for Visual NER Projects

In previous versions, IAA (Inter-Annotator Agreement) charts were available only for text-based projects. With this release, Annotation Lab supports IAA charts for Visual NER project as well.

Auto-save completions

Starting with version 3.3.0 the work of annotators is automatically saved behind the scenes. This way, the user does not risk losing his/her work in case of unforeseen events and does not have to frequently hit the Save/Update button.

Improvement of UX for Active Learning

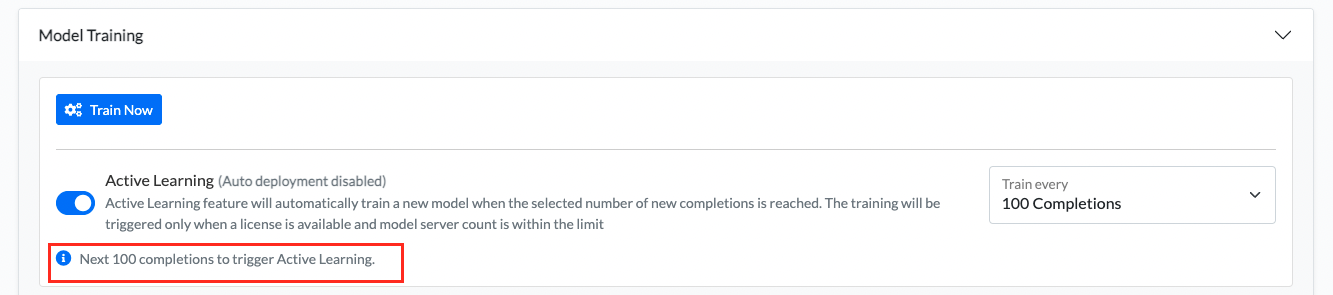

View completion countdown until model training is triggered

- When Active Learning is enabled for a project, users can now see how many completions are still missing until the next model training is automatically triggered.

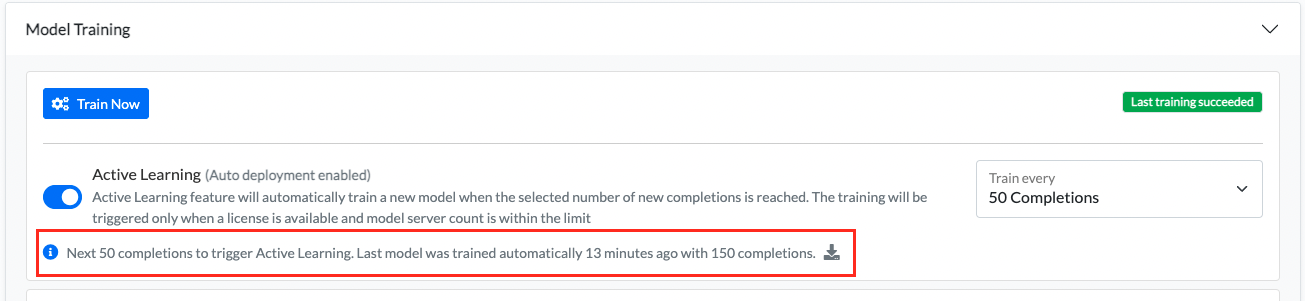

View info on previous training step

- Once an automatic training is complete, information about the previously triggered Active learning is displayed along with the number of completions required for the next training.

Error/Warning message in case of insufficient resources

- When the conditions that trigger active learning for a project using a healthcare model are met and all available licenses are in use, an error message appears on the Training and Active Learning page informing the user to make room for the new training server. Once a valid license is uploaded or when the user deletes one of the training/preannotation servers, the Active Learning will trigger the training again.

Upgrade of Spark NLP libraries

Annotation Lab now includes Spark NLP for Healthcare Version 3.5.3 and Spark OCR Version 3.13.0. With this we have also updated the list of supported models into the Models Hub page.

Support for BertForSequenceClassification and MedicalBertForSequenceClassification models

Up until now, only NerDLModel, MedicalNerModel, MultiCLassifierDLModel and ClassifierDLModel were supported for preannotations. From this version on, support was added for:

- BertForTokenClassification,

- MedicalBertForTokenClassifier,

- BertForSequenceClassification and

- MedicalBertForSequenceClassification.

Miscellaneous

Disable user management options for SAML/AI Platform setup Previously for any users created using an external authentication mechanism, the user information could be edited and deleted on the Manage Users page. With this release, the information for such users cannot be edited or deleted from the Annotation Lab UI. If some user information needs to be added or if the user needs to be deleted then that can be done from the keycloak UI.

Security

The Annotation Lab team makes sure that the application is up-to-date with the available security fixes. In this release, the most recent and supported versions of DataFlows, Database, Active Learning and Redis images have been included. Redundant libraries and packages are also removed from different images. K3S can be upgraded to the latest version supported by Annotation Lab using a script bundled with the installation artifacts.