A new generation of the NLP Lab is now available: the Generative AI Lab. Check details here https://www.johnsnowlabs.com/nlp-lab/

The Annotation Lab’s final 2020 release is a major milestone for our team. It brings numerous frontend and backend improvements that will improve the speed and efficiency for all users, makes it easier to manage teams & projects, and brings a more stable experience to the application.

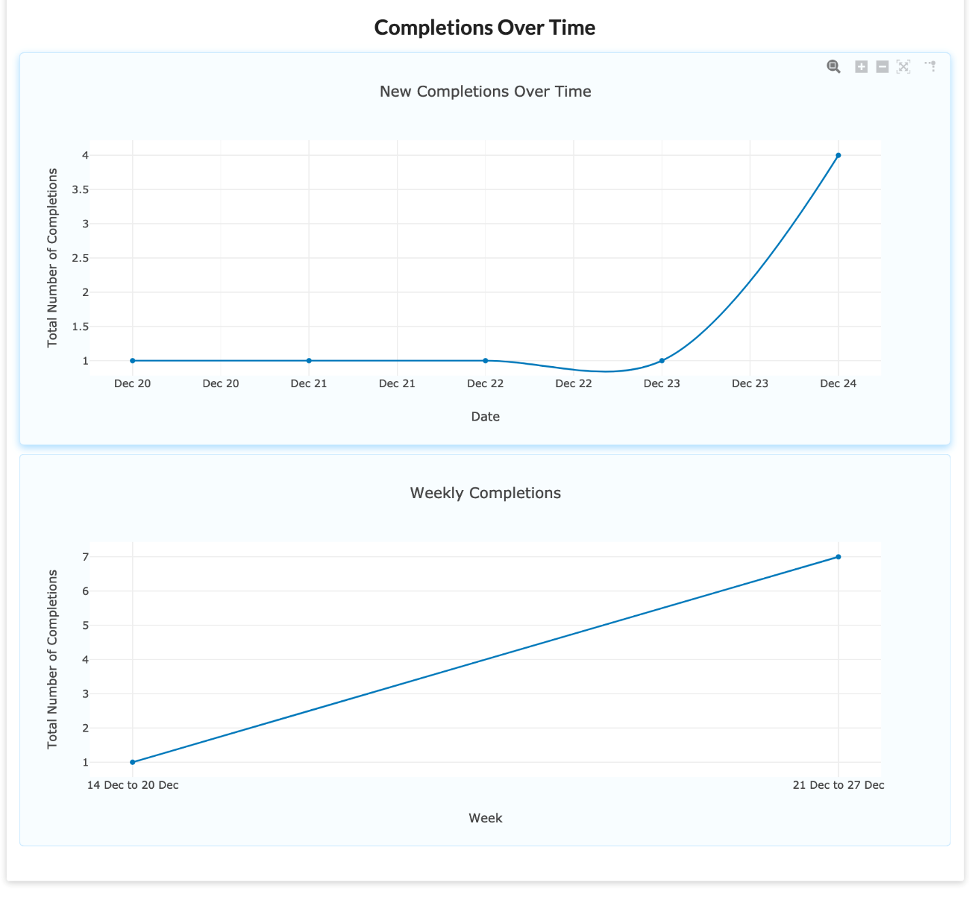

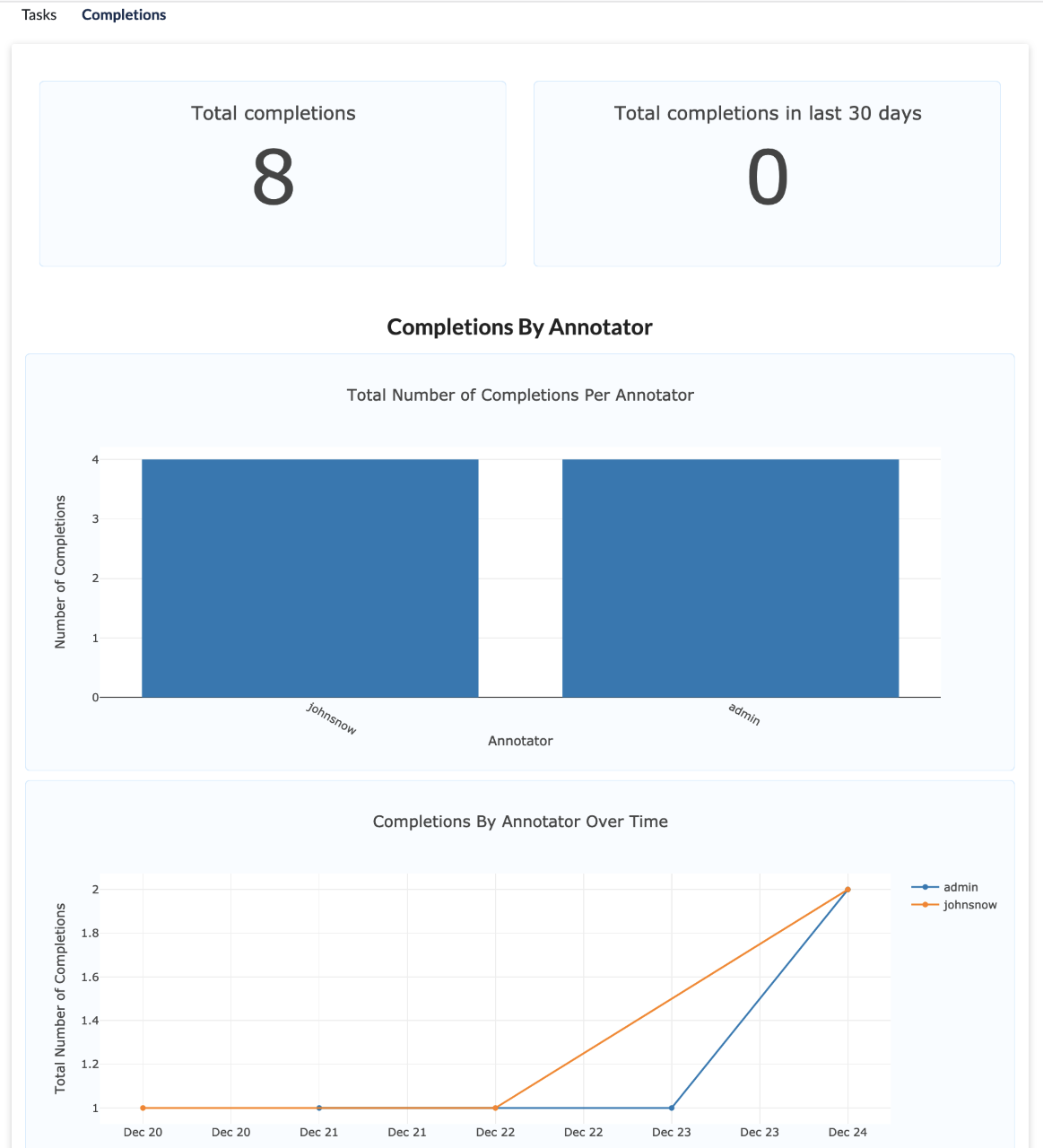

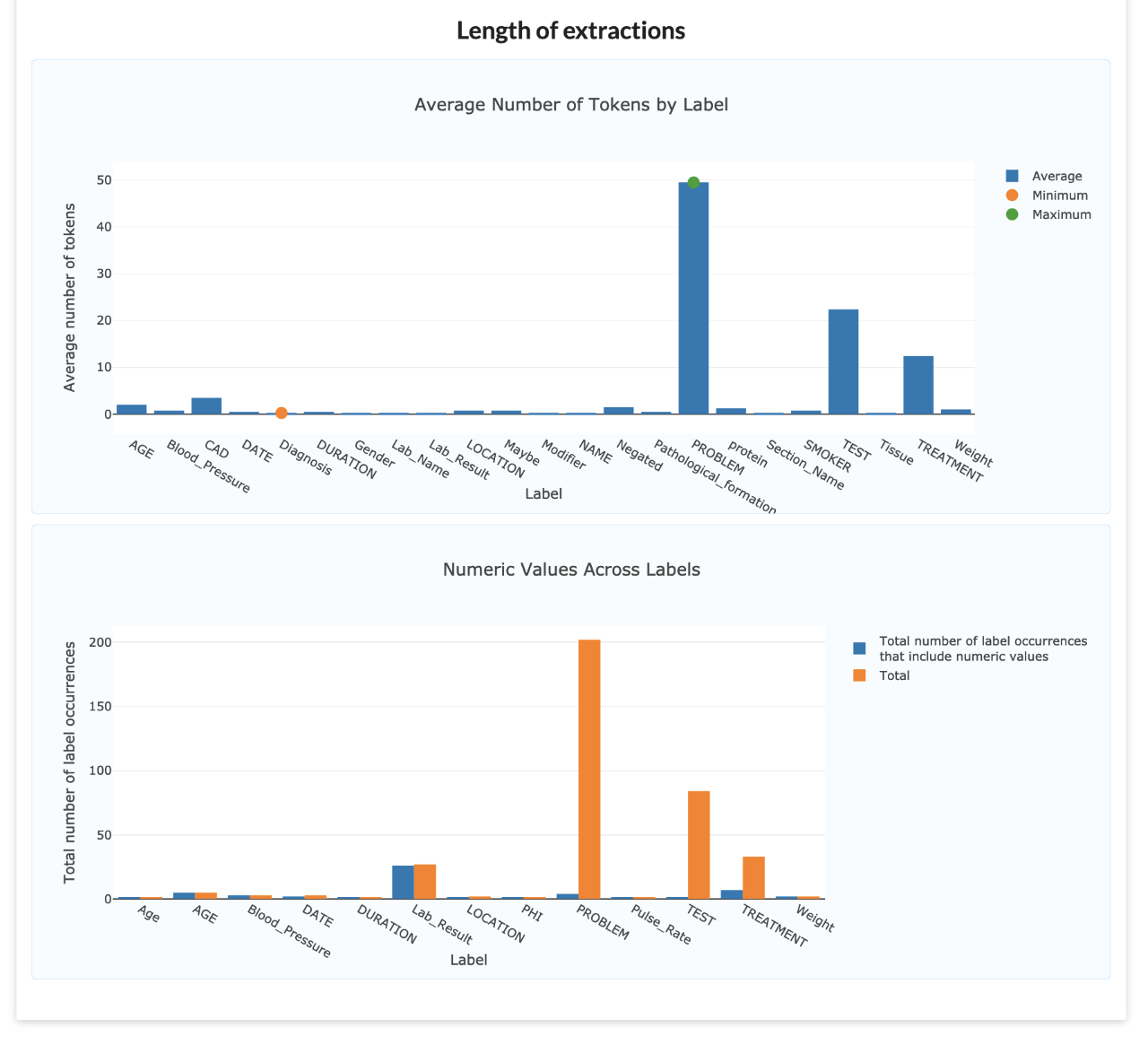

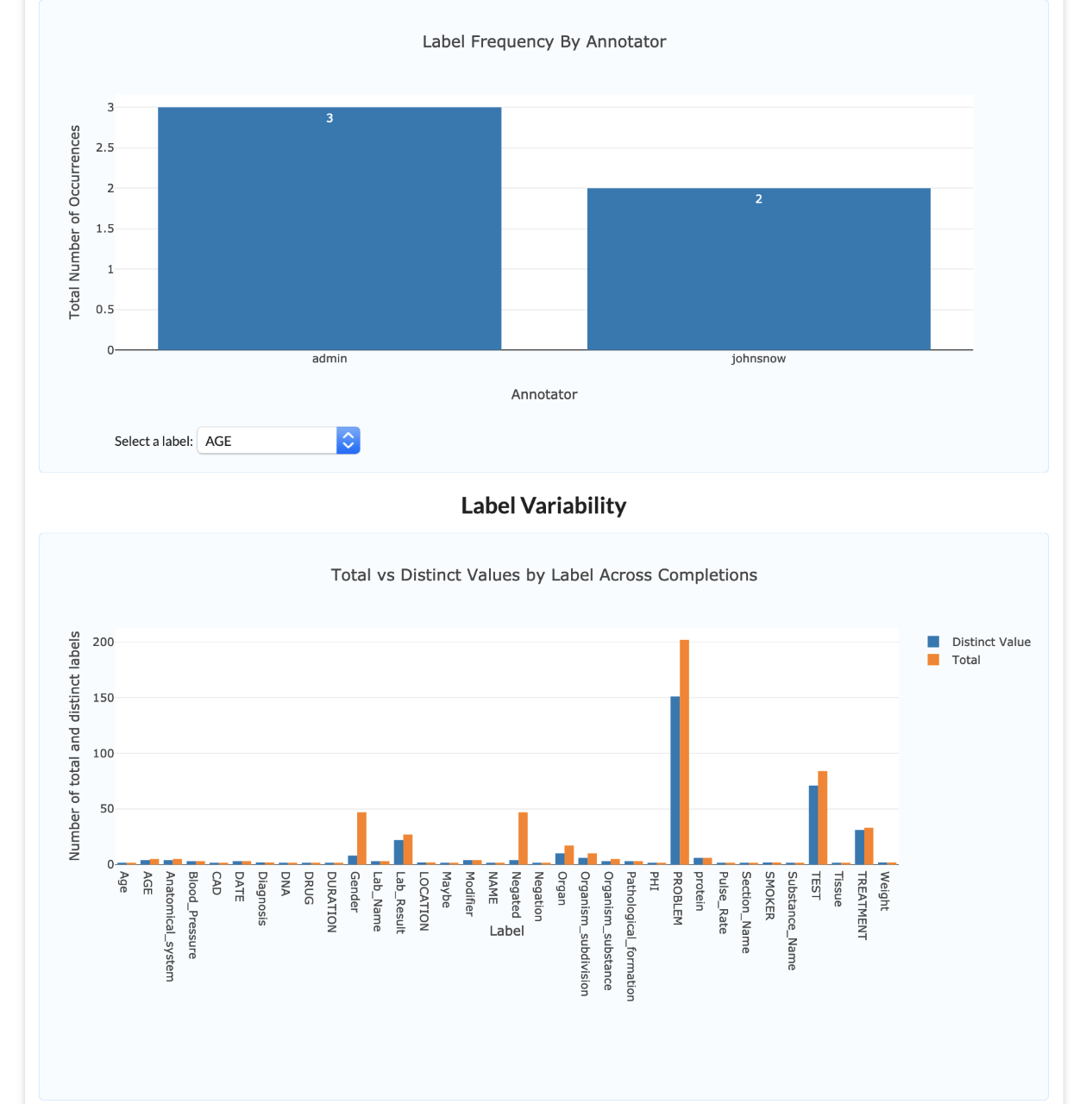

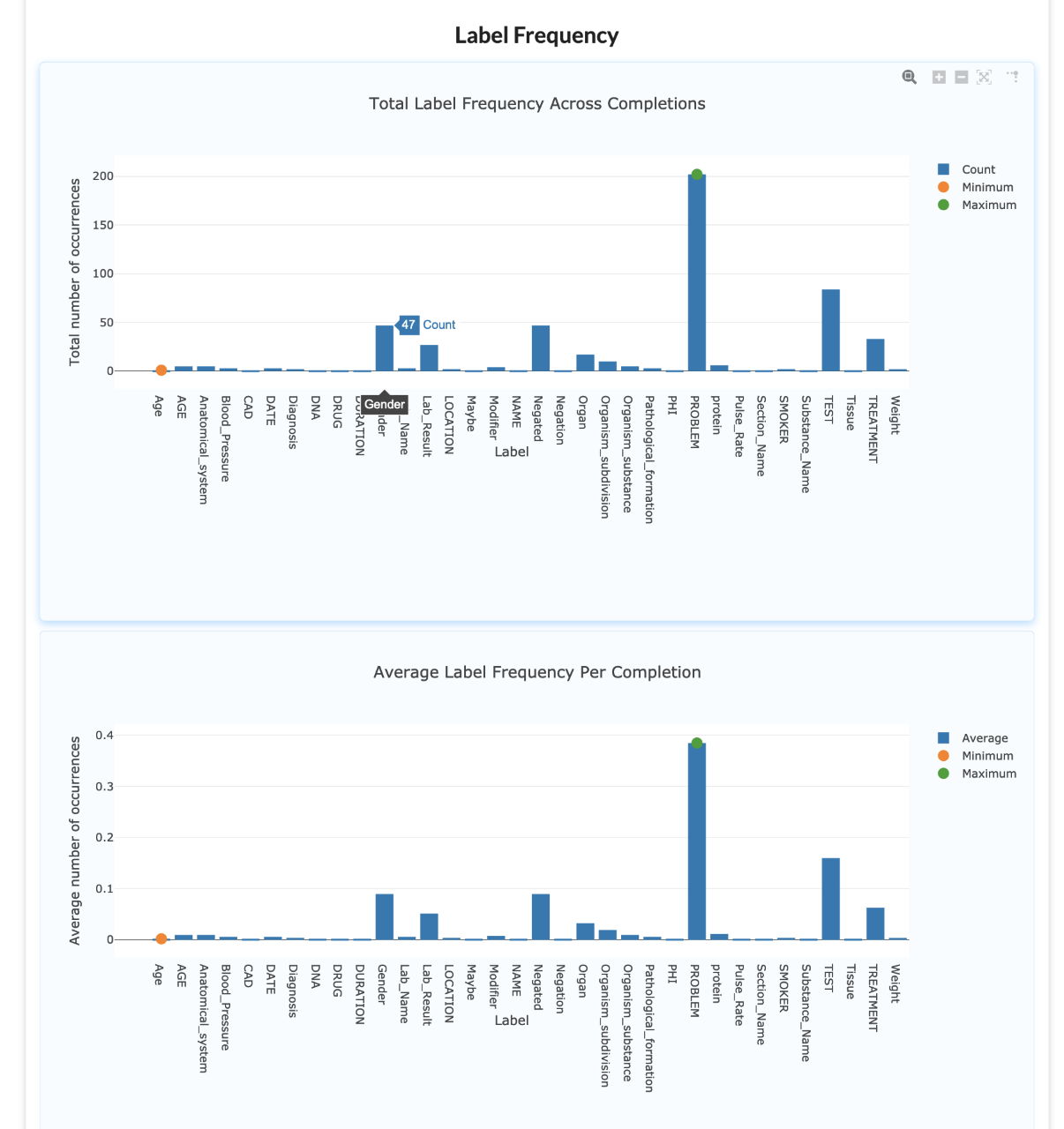

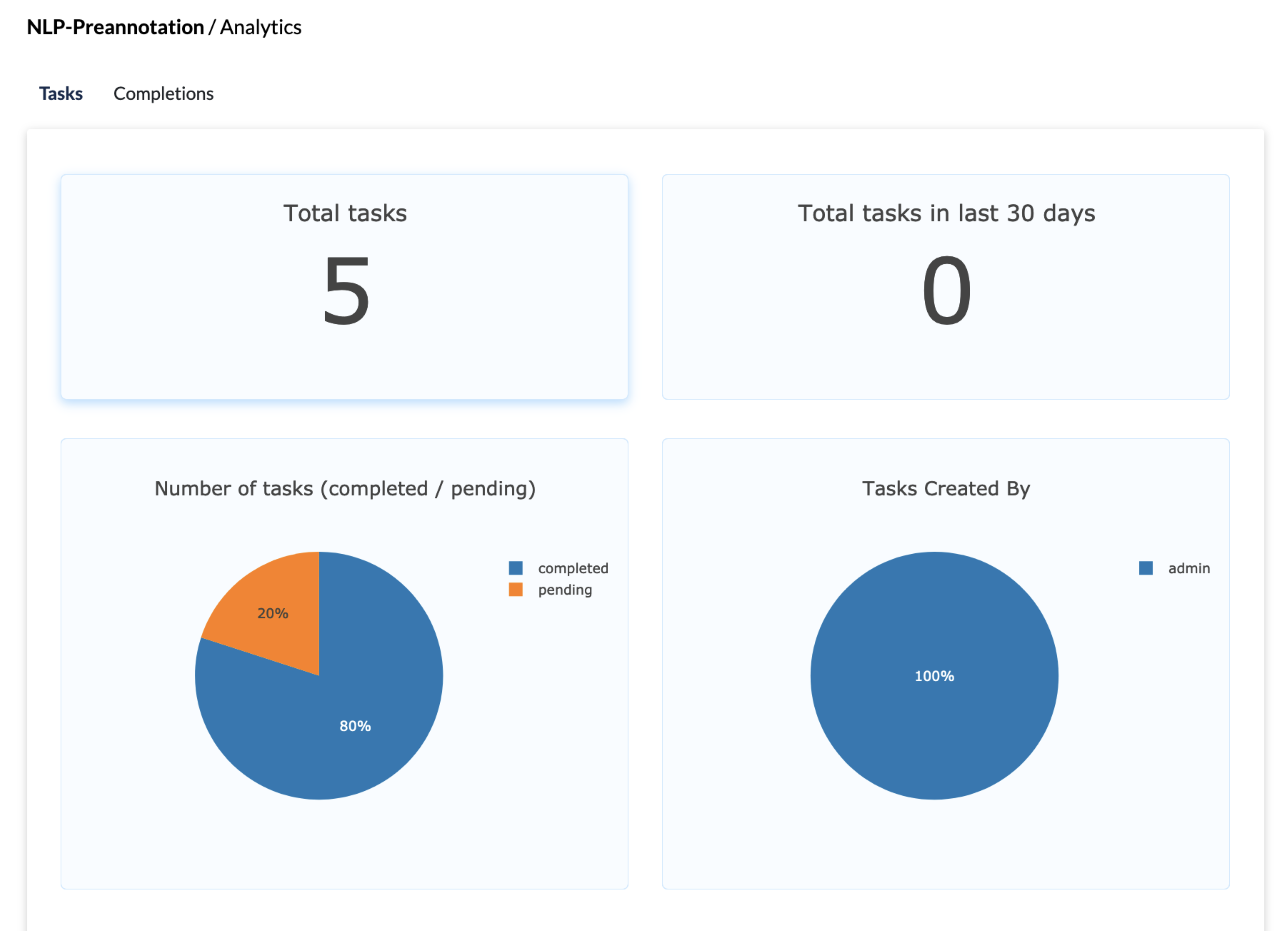

Project Analytics

Another major feature in this release is the analytics page, which will allow users to visualize 10 different metrics to observe the state of the project. For NER based projects we have added an additional 6 charts in the following categories – label frequency, label variability and length of extractions.

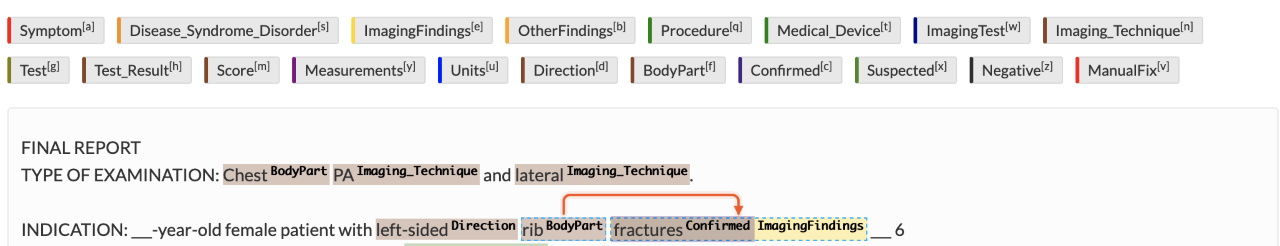

Annotation UI Improvements

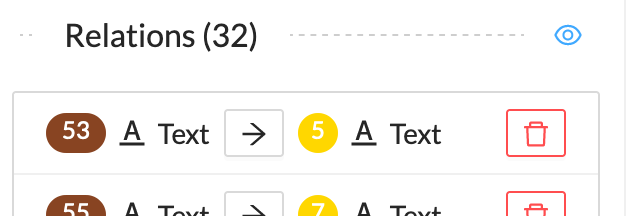

Users will see a revised UI with tweaks to how labels are presented. The labeling engine has been improved and users can expect faster loading times on the labeling page. Furthermore, the labeling page now displays relations between labels and these relations can be toggled on and off using an eye button above relations.

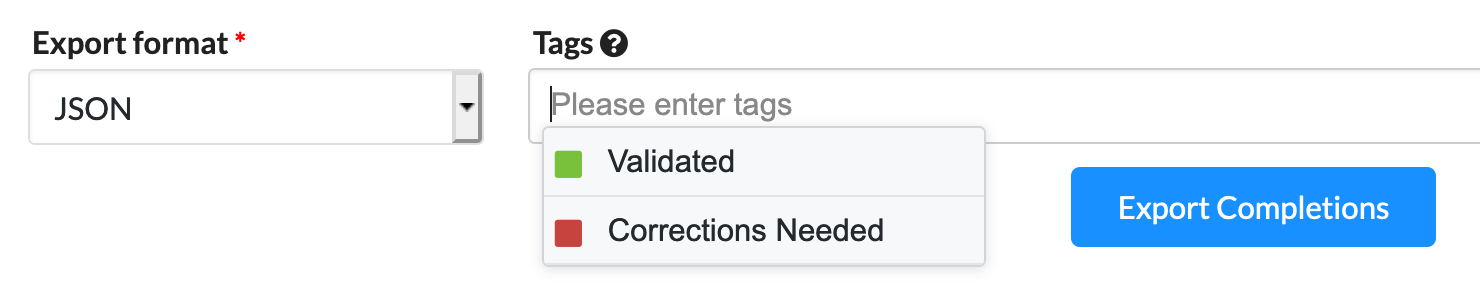

Export Validated Completions

Finally, we have also added the ability to export completions via specific tags, allowing data science teams to only export those task completions that have been validated.

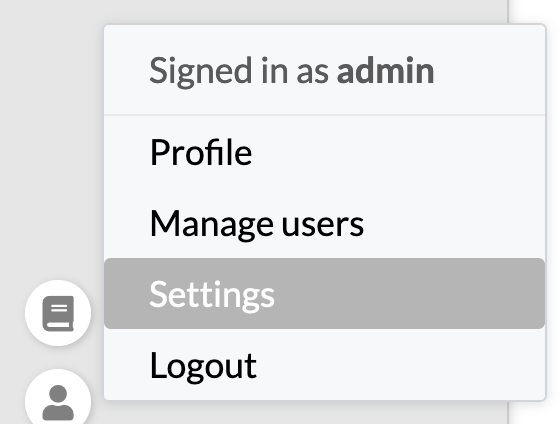

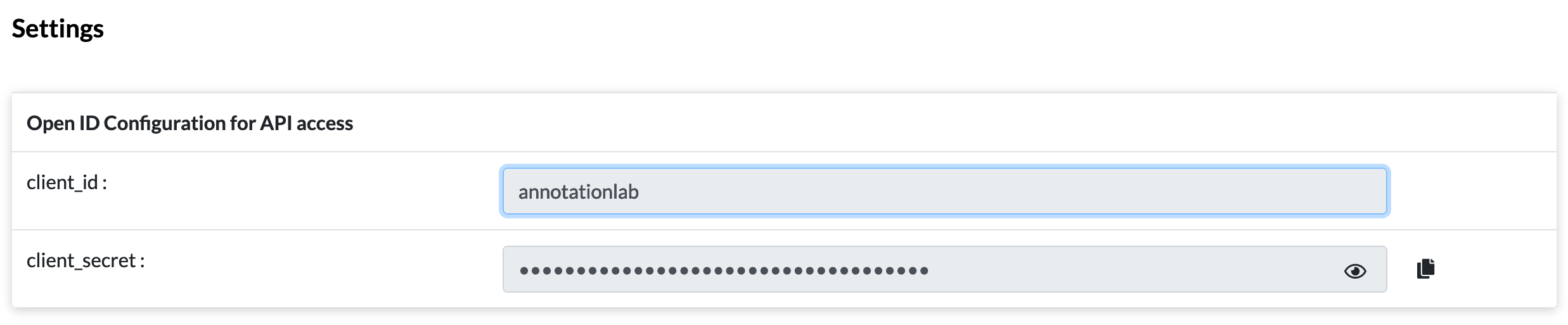

Easier API Access

We’ve added a new Settings page that allows admins to see the client_id and client_secret and share it easily with users who want to use Annotation Lab APIs.

Backend Improvements: Speed, Stability, and Simplified Installation

On the backend, we have seen major improvements to the task loading logic. This will prevent inconsistencies between data loaded in the UI vs the database and users can expect faster loading of pages across the board.

Another enhancement was the lead time to creating a completion is now recorded, further enhancing our API and the metrics collected from Annotation Lab.

We undertook a major effort to simplify our helm chart installation, allowing us to deploy quickly and efficiently on AWS and making it compliant with our AI Platform offering.

The pre-annotation server also saw major improvements in its resource requirements and the CPU/memory footprint was reduced by 4x. We also implemented hot reloading of the spark context in case of failure, making the system more fault-tolerant. Also, there is improved logging to identify which project and task id are being pre-annotated to facilitate any debugging.

Summary of Enhancements & Fixes

Frontend:

- Major frontend upgrade #754

Highlights:- labeling engine improved for faster initialization and deserialization

- UI improvements for showing labels with consistent colors

- Relations visualizations for NER Projects

- Nested labeling improvements

Bug Fixes:

-

- Fixed issue with dates in Firefox

- Fixed issue with duration of completion date when completion created <1min ago

- Fix issue with stretched entity when text with long lines labeled

- Broken regions of polygons and brushes are no longer saved

- Fixed per-region audio labeling with predictions

- Relation arrows view #705

- Implement analytics screen #662 #753 #776

- Tag based exports #706

- New settings page for admins to see client id and client secret #642

Backend:

- Optimization in task loading logic #736

- Check if ‘lead_time’ can be removed from the completions/predictions #762

- Simplify helm chart installation #816 #838

- Optimize resource requirements for preannotation server #835

- restart spark context on failure #788

- preannotator server must show logs on which project/task id its annotating #692

Bug Fixes:

- screen blanking in image classification project #791

- cannot change labeling config after removing completions #696

- Set created_ago field as current date-time, if not present in completion/predictions #727

- Reload project in memory whenever config is updated #713

- Need to create a config for NER with only single label #756

- Tests are failing on develop branch – #759

- Intermittent frontend test failure #691

- completions created less than a minute ago show up as Created a while ago – #745

- tabs inside the menu not showing proper active tab – #730

- deleting a task of one config type and then creating another task of another config type results error in setup page – #732

- Labeling page fails for the project with config of type Pairwise comparison #722

- Project setup fails for Filtering long labels list #721

- Export does not work in Safari #760

- screen blanking during incorrect relation additions #689

- project_view giving wrong results when importing predictions #769

- Resolve issues with running tests using Jenkins #716

- Relogin after executing logout test #746

With the ongoing enhancements in the John Snow Labs Data Annotator, including improved project analytics and speed, the integration of Generative AI in Healthcare can provide even more powerful insights, enabling the development of highly efficient Healthcare Chatbot systems for seamless patient engagement and support.