As generative AI continues to make waves in healthcare, it is becoming clear that LLMs alone are not enough for safe, scalable automation. The most successful real-world systems combine the reasoning capabilities of LLMs with the precision, structure, and governance of domain-specific NLP. This hybrid architecture delivers the best of both worlds: fluent, contextual understanding from LLMs, anchored by the consistency and auditability of NLP pipelines.

John Snow Labs is at the forefront of this shift. Our solutions can integrate LLMs and classical NLP within Spark-based pipelines that support real-time healthcare applications, from summarization and decision support to coding, quality reporting, and de-identification.

The true power of modern healthcare AI emerges when the generative capabilities of Large Language Models (LLMs) are anchored by the structural precision of specialized NLP pipelines. LLMs provide remarkable capabilities in narrative synthesis, excelling at summarizing vast amounts of unstructured text, and generating clear explanations for complex clinical questions. However, these models require a “governance layer” to be safe for clinical use.

This is where NLP pipelines become indispensable. They excel at deterministic tasks that LLMs often struggle with, such as extracting structured entities like specific diagnoses or medications and mapping them with high fidelity to standardized terminologies like ICD-10 or SNOMED CT. NLP pipelines are optimized for applying explicable validation rules and handling the large-scale batch processing required for enterprise workloads.

By integrating these two technologies into a hybrid architecture, you can create a workflow where the LLM proposes insights while the NLP pipeline validates them against clinical truths. This collaboration allows NLP to handle the heavy lifting of extraction while the LLM provides more complex capabilities. Similarly, it enables the LLM to handle front-end engagement while the NLP engine works in the background to encode and structure the data. Ultimately, this partnership delivers an end-to-end automation framework that is both highly intelligent and at the same time compliant with medical standards.

What are real-world use cases for LLM + NLP pipelines?

John Snow Labs customers leverage hybrid LLM and NLP pipelines for cancer registry automation, where LLMs summarize clinical findings while NLP extracts precise TNM staging details and biomarkers for structured reporting.

In clinical summarization workflows, LLMs generate initial draft summaries from patient notes, with NLP post-processing to capture and map essential details like medications, allergies, and procedures to standardized formats.

For risk adjustment tasks, LLMs analyze full clinical documentation to identify relevant conditions, while NLP tags diagnoses, validates coding assertions, and ensures HCC compliance.

De-identification processes use NLP to mask protected health information (PHI) such as names and dates, followed by LLMs to detect and redact subtle narrative risks in free-text sections.

These hybrid pipelines deliver higher accuracy, greater explainability through traceable steps, and improved scalability compared to standalone LLMs or traditional NLP approaches.

How does John Snow Labs enable hybrid workflows?

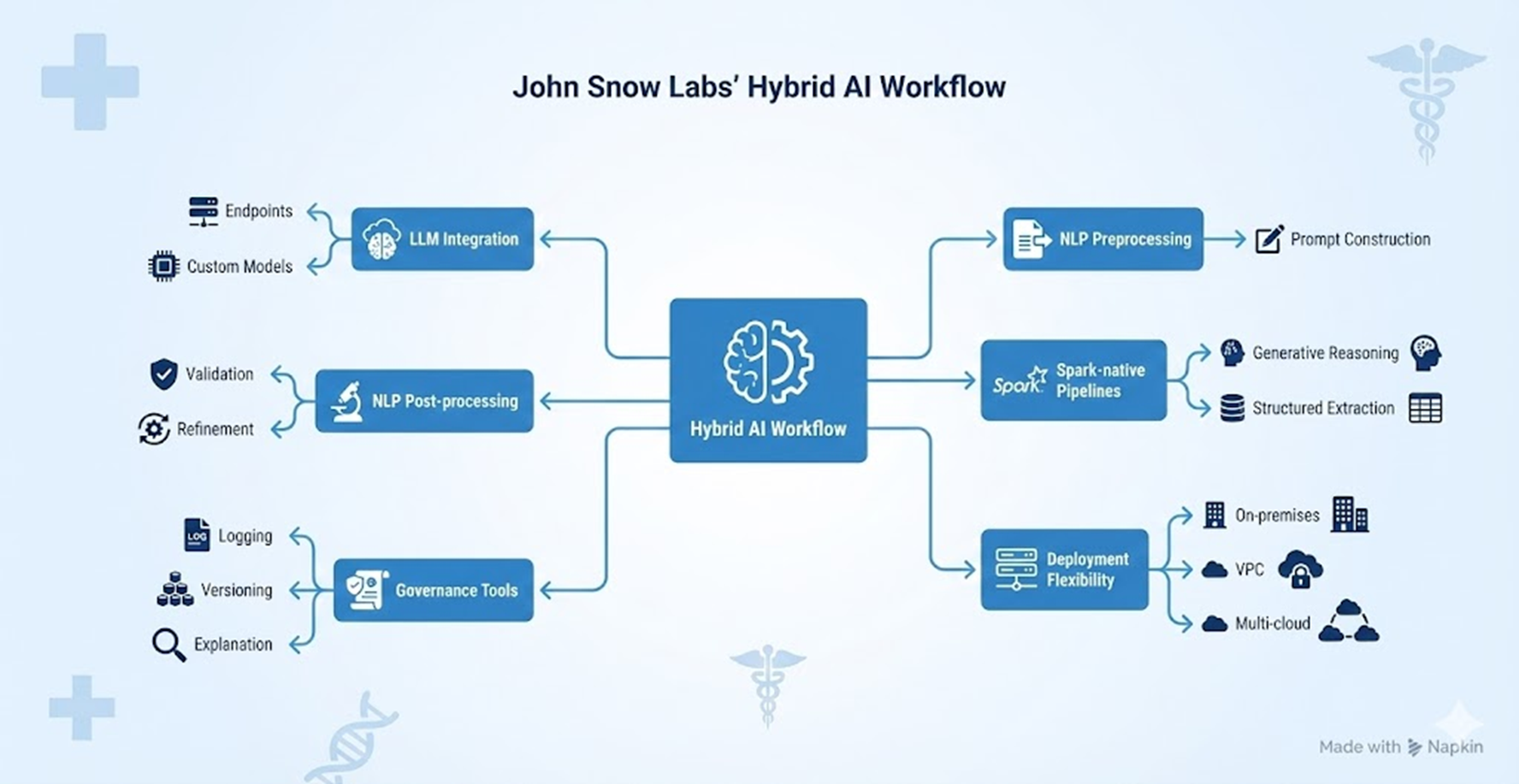

The John Snow Labs solutions support hybrid workflows by integrating LLMs through endpoints or custom models, combined with preprocessing via NLP for effective prompt construction. Post-processing with NLP further validates and refines LLM outputs to ensure clinical accuracy and reliability. These capabilities extend to Spark-native pipelines that seamlessly blend generative reasoning from LLMs with structured extraction tasks, enabling scalable processing of healthcare data.

Governance tools within the platform log, version, and explain each workflow step, providing full traceability for compliance in regulated environments. Models are deployable on-premises, in virtual private clouds (VPCs), or across multi-cloud setups, allowing organizations to architect workflows tailored to their operational requirements, data security standards, and regulatory needs. This flexibility supports efficient, compliant hybrid AI systems for clinical NLP and decision support.

Why is this hybrid approach the path forward?

This hybrid approach is the definitive path forward because it mirrors the dual nature of clinical workflows, combining high-level language understanding with the data structures healthcare requires. Beyond simple alignment with how clinicians think, this architecture provides the essential transparency needed to support safety and regulatory auditability in high-stakes environments. The modular nature of hybrid systems allows them to scale efficiently across diverse teams and legacy platforms, facilitating a smooth, incremental adoption of AI rather than a disruptive “rip-and-replace” transition. Ultimately, the future of the industry does not involve LLMs replacing existing clinical expertise or infrastructure; instead, it relies on LLMs and NLP working in tandem to build robust systems that both clinicians and regulators can truly trust.